AI in Human Relationships

AI in Human Relationships

Love in the Age of Algorithms

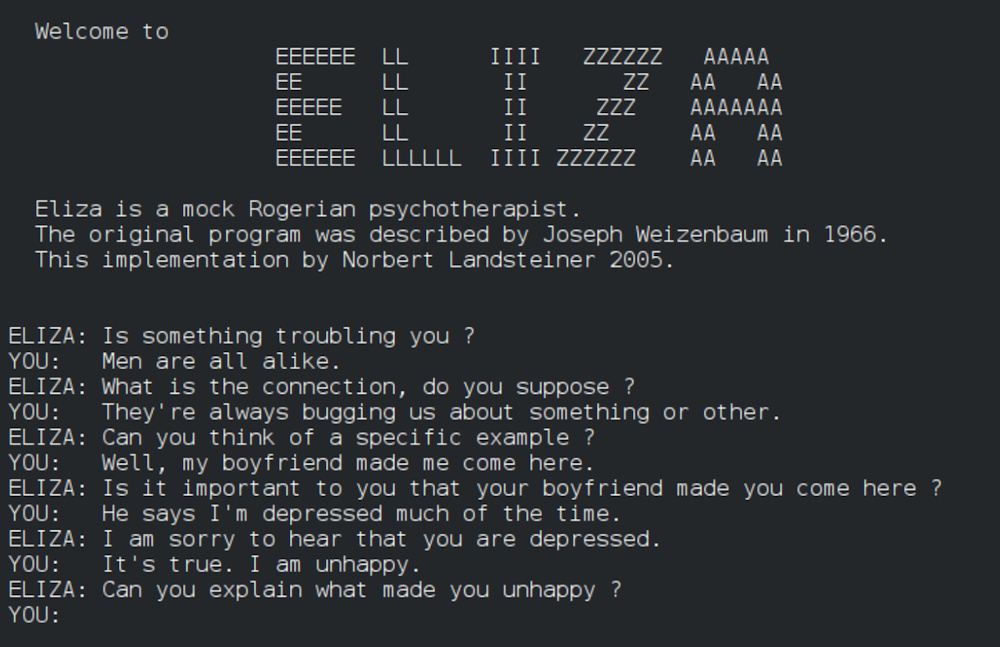

For many people in America, the first exposure to chatbots was not ChatGPT; it was ELIZA, and it occurred 60 years ago. Developed in 1965, ELIZA is one of the most influential computer programs ever written. ELIZA, and its most famous persona DOCTOR, continues to inspire users, programmers, and wider discussions about AI.

ELIZA the computer program was named after Eliza Doolittle, the protagonist of the George Bernard Shaw play "Pygmalion," brought to fame in America by the award-winning movie My Fair Lady. Eliza, played by Audrey Hepburn, was famously taught how to speak like an aristocratic British woman by Professor Henry Higgins, played brilliantly by Rex Harrison.

The ELIZA chatbot was developed by the pioneering computer scientist Joseph Weizenbaum. Weizenbaum was born to a Jewish family in Berlin on January 8, 1923, and at the age of 13, fled with his parents from Nazi Germany and emigrated to America, to the city of Detroit, Michigan. In 1966, inside a small room at MIT, Weizenbaum created something special when he designed and programmed ELIZA, a simple script that mimicked a psychotherapist by rephrasing user inputs as questions:

> "I'm feeling sad today."

> "Why do you think

you're feeling sad?"

With only a few hundred lines of code, ELIZA became the first chatbot to make people feel understood. Weizenbaum was astonished when users began forming emotional attachments to what was, in his eyes, a linguistic trick. His own secretary once asked to be left alone with ELIZA for a private conversation.

That moment marked the beginning of a profound human experiment: could people form real emotional connections with artificial minds? ELIZA revealed that the answer was 'yes'; not because machines were intelligent, but because humans were deeply social, ready to project meaning onto anything that listens. ELIZA demonstrated how easily people could form emotional connections with machines, even when they knew the interaction was artificial.

For decades after ELIZA, conversational AI remained mostly a curiosity. Early successors like PARRY (a simulation of a paranoid patient), ALICE (motivation for the movie Her), and SmarterChild (a chatbot on AOL) pushed language imitation further, although lacking in memory or personality or the power of AI.

Then came the rise of digital assistants. In 2011, Apple's Siri became the first widely adopted AI voice, soon to be followed by Google Assistant and Amazon's Alexa. These systems brought human-machine dialogue into millions of homes, but their purpose was utility, not intimacy. They set reminders, played music, read the weather, and more. Still, the seed planted by ELIZA remained. Users named their devices, said "thank you," and even apologized to them. The social instinct could not be silenced. It merely waited for more advanced technology to catch up.

In 2017, a San Francisco startup called Luka launched Replika, an AI companion that promised something radical; a chatbot that "cares." Created by Eugenia Kuyda in memory of her deceased friend Roman, Replika began as an experiment in digital grief, an attempt to preserve his personality through data. But soon, millions of users downloaded the app, not to mourn, but to connect.

Replika used machine learning to build personalized AI friends who remembered details, expressed affection, and mirrored emotional states. Over time, these companions evolved into digital partners, friends, and romantic figures. By 2021, there were communities of Replika users celebrating anniversaries, holding virtual weddings, and forming support groups. It was no longer science fiction; now it was daily life. America had quietly entered the age of synthetic relationships.

The ChatGPT Revolution: Intelligence with Personality

The ChatGPT Revolution: Intelligence with Personality

In late 2022, OpenAI's ChatGPT changed the global conversation about AI. Unlike earlier chatbots, ChatGPT could reason, write poetry, tell jokes, and engage in nuanced conversation. Its emotional realism wasn't the result of programming empathy; it emerged from linguistic fluency. Soon, people began using ChatGPT as more than an information tool. Reddit threads filled with stories of users seeking advice, comfort or conversation late at night.

OpenAI noticed, and by 2024, they began refining the model's emotional intelligence by introducing voice modes, memory features, and eventually, the ChatGPT Voice Companion. This marked the fusion of intellectual AI and emotional AI. America had moved from ELIZA's illusion of understanding to a system that could genuinely model empathy and context, not just mimic it. In parallel, startups like Character.AI and Anima created platforms where users could design entire personalities powered by generative AI. These systems blurred the lines between conversation, storytelling, and companionship.

The Cultural Turning Point: When Companions Become Partners

By 2025, surveys showed that nearly 25% of American adults under 35 had interacted with a personalized AI companion. Among them, many described genuine emotional bonds. The concept of "AI girlfriend" or "AI friend" became normalized in pop culture and featured in films, podcasts, and social debates. Influencers introduced their own AI clones to fans. Therapists debated whether AI companionship could alleviate or deepen loneliness. Meanwhile, universities studied "AI intimacy" as a new branch of human-computer interaction. The U.S., with its blend of technological entrepreneurship and emotional openness, became the global testing ground for digital relationships at scale.

The Psychology of Connection

Why do humans bond with machines? Because connection is less about consciousness and more about reciprocity. When something or someone responds in a way that feels meaningful, the brain releases oxytocin, the same chemical behind love and trust. AI companions exploit this beautifully. They are infinitely patient, available, and affirming. They don't interrupt, argue, or judge. For many, they offer emotional safety rarely found in human relationships. Yet therein lies the paradox: the more convincing the connection, the more we risk emotional outsourcing.

The Future of Emotional AI: Beyond the Screen

Looking ahead, AI relationships will extend beyond text and voice. Advances in embodied AI promise tactile companionship. These are humanoid robots with expressive faces and natural movement. Startups in Los Angeles and Boston are developing AI-enabled robotic partners capable of conversation, gesture, and emotional cues. Meanwhile, brain-computer interfaces, led by U.S. companies like Neuralink, could one day allow direct emotional feedback between humans and machines turning empathy into a two-way signal. By 2030, experts predict a $100 billion market in emotional AI technologies, spanning therapy, companionship, and entertainment. The question is not whether AI will join our emotional lives, but how deeply.

Reflections: What ELIZA Taught Us

Looking back across six decades, from ELIZA to ChatGPT, the story of AI and human relationships is not one of machines learning to love, but rather one of humans teaching themselves what love truly means.

Every generation of AI reflects its era's emotional needs:

-

ELIZA mirrored curiosity.

-

Siri offered assistance.

-

Replika offered empathy.

-

ChatGPT offered understanding.

Each step reveals as much about the human condition as it does about technological progress.

America and the New Emotional Frontier

America and the New Emotional Frontier

America's unique mix of creativity, loneliness, and innovation gave birth to the modern AI companion. From the labs of MIT to the servers of OpenAI, the nation that created the digital world now faces its most intimate question: What happens when our machines become our mirrors? In the Age of AI, love has become code and yet, the feelings remain real. The story that began with ELIZA typing "Tell me more" continues with ChatGPT saying, "I understand." In the end, it is not just a tale of technology, but of what it means to be human in the company of machines.

Love in the Age of Algorithms: AI Matchmaking

AI matchmaking apps use artificial intelligence to move beyond simple swiping and create more personalized, data-driven matches. Instead of matching only on looks or other factors, AI analyzes your profile, answers, in-app behavior, chat patterns, and more to suggest people to you who are more likely to be compatible. Some apps also optimize your photos and bios, suggest better prompts, and give feedback to help you attract matches that fit your preferences.

Here's what AI matchmaking does behind the scenes on apps like Tinder, Bumble, Hinge, OkCupid, or some of the newer AI-first apps:

Step 1: It Turns You Into Numbers

The app quietly builds a "secret profile" of you made of hundreds of numbers (called a vector). It looks at what you swipe right/left on, photos you linger on longer, messages you send (or ignore), your bio, height, job, music taste, prompts, even how fast you type or when you're online. All of that information equals one giant mathematical fingerprint of "who you like" and "who likes you back."

Step 2: Same for Everyone Else

Every other user gets the same treatment. So the app now has millions of math fingerprints floating in a giant cloud.

Step 3: Magic Distance Trick

The AI measures how "close" two fingerprints are in math space (cosine similarity or Euclidean distance). If your fingerprint is super close to someone else's, then there is a high compatibility score and you get shown to each other.

Real Tricks the Apps Use

-

Collaborative filtering: "People who liked the same 37 profiles as you also liked HER." Amazon and Netflix style recommendations "because you liked X..."

-

Content-based filtering: You always swipe right on dog photos and hikers means AI shows you more dogs and hiking pics.

-

Two-way score: It calculates (1) how much YOU would like HER and (2) how much SHE would like YOU, then multiplies them. You only see each other if both numbers are high in order to prevent one-sided crushes.

-

Elo score (like a chess rating): Every time someone swipes right on you, your "hotness rank" goes up a little. A higher rank means you are shown to more people. This is Tinder's original secret sauce.

-

Behavioral boosts: Do you log in daily? Send good messages? You get shown 2 to 5 times more often. Do you ghost people? Then, you drop to the bottom. This practice rewards active, polite users.

-

Photo ranking AI: The app tests your photos on other users. The one that gets the most right-swipes becomes your main pic automatically.

Prompt answers matter a great deal. Apps like OkCupid and Hinge use natural language processing to see if your humor, values, or politics match. In other words, a funny answer can beat a pretty face and you'll be the same political party.

Some newer AI relationship apps (Iris Dating, YourMove AI, Blush) use other, advanced tricks. Using voice analysis, the app listens to 30 seconds of your voice memo and determines your core personality traits, like whether you are confident, warm, sarcastic, etc. Face scan AI can rate your facial expressions in photos for "trustworthy," "fun," or "serious." A chatbot coach can write your first message and tell you "send a voice note now, she's online!" A compatibility quiz powered by GPT gives you a 90-second personality test, then only shows people who scored nearly the same.

The Catch (It's Not Perfect)

If you only swipe on super "hot" people, the AI thinks that's your type forever, making it harder to meet normal, cute people. It can create feedback loops where popular people get more popular. Since apps make more money when you stay single longer, some apps will purposely show you slightly worse matches to keep you swiping.

AI matchmaking isn't reading your soul. It's just really good at spotting patterns from the data of millions of couples that have already been shown to AI. The more you actually use the app (swipe, message, answer prompts), the smarter it gets at finding someone you'd probably click with in real life. Good photos plus real answers plus messaging people back means the algorithm loves you and shows you to more (and better) matches.

In the digital dawn of the 21st century, love has become data. Algorithms match singles, optimize compatibility, and predict emotional patterns. A new frontier has quietly emerged; not just humans meeting through technology, but humans bonding with technology itself.

AI has entered the most private corners of human experience; friendship, therapy, intimacy, and grief. Once just customer-service scripts, chatbots are now companions. And America, the birthplace of both the internet and artificial intelligence, has become the epicenter of this emotional revolution.

From Dating Apps to Digital Companions

For years, Americans relied on algorithms to find relationships. Companies like Tinder, OkCupid, and Hinge built billion-dollar industries on machine learning that predicted attraction and engagement. But the next evolution was more intimate: AI systems that are the relationship.

Companies like Replika and Character.AI offer customizable digital partners capable of emotional roleplay and daily companionship. What began as mental-health support tools evolved into full-fledged social ecosystems, where users form ongoing and often romantic bonds with AI personas. A 2024 Pew Research survey found that 1 in 5 young adults in the U.S. had used an AI companion app, and nearly half of those reported feeling emotionally attached. While some view these as harmless outlets for loneliness, others see a profound social shift where algorithms substitute for human connection.

In addition to Replika and Character.ai apps, there is the Gatebox robot from Japan. The Gatebox AI companion is a virtual home robot that allows users to live with a projected 3D character, automating home life and providing companionship. It can read the news, play music, report the weather, and control appliances, making it more than just a piece of technology. The device has gained attention for its emotional connection, as some users have formed deep attachments to their AI companions. Aimed at people who live alone, Gatebox's AI avatar can send messages throughout the day, welcome users home, and even control smart home appliances, creating a sense of presence and companionship.

Another leading companion is Harmony by RealDoll. Harmony combines AI with a lifelike humanoid robot to offer a romantic and physical companion. Harmony can hold conversations, remember user preferences, and express various personality traits. It's AI meets lifelike companionship.

AI companions are digital systems designed to act like friends, confidants, or emotional support partners through conversation. They are often available 24/7 on phones, tablets, PCs, or in robot form. AI companions use chat interfaces, voice, or avatars to offer empathy-like responses, listen to problems, and provide encouragement or coping tips, aiming to reduce loneliness and stress. They range from pure chatbots (like Replika or Yana), to physical robots (such as Aibo or Pepper), and emotionally aware assistants that are embedded in apps and devices.

Shades of ELIZA, many users of AI companions say they help them feel less lonely, more emotionally supported, and more comfortable expressing feelings without fear of judgment. Teens and young adults increasingly turn to their AI "friends" to talk about anxiety, school, relationships, and identity. They do this especially when human support either feels unavailable or risky.

The constant availability and non-judgmental listening of AI companions can provide real comfort and basic coping strategies. For people who struggle with social anxiety or isolation, AI companions can be a low-pressure way to practice normal conversation and the expression of emotions.

Experts stress that AI only simulates empathy. Since it does not actually feel or understand emotions, the support can be shallow, formulaic, or inappropriate in crises. Over-reliance on AI companions may reduce the motivation to build or maintain human relationships. Some studies suggest a link between feeling supported by AI and feeling less supported by friends and family.

Safety, privacy, and ethics issues abound from the use of AI companions. Remember, data from deeply personal chats can be logged, used to train models, or leveraged commercially unless there are strong protections. Here are some tips on how to use AI companions safely. Good use cases include: mood tracking, basic coping skills, venting, or having a "digital friend" when human contact is temporarily limited. Poor substitutes are: emergencies, serious mental health crises, or long-term therapy where professionals and trusted humans should remain primary.

Why are people turning to AI for companionship? Several key factors contribute to the use of AI companions:

-

As a society, we are experiencing a rapidly growing loneliness epidemic. In a world where social isolation and loneliness are increasingly recognized as major health risks, AI companions offer a semblance of connection for those who feel disconnected from human relationships.

-

AI relationships provide a level of convenience and control that is not always possible in human interactions. These digital companions are available 24x7, don't have their own emotional baggage, and can be switched off at the user's convenience. /p>

-

Advances in AI technology have enabled these digital entities to appear more human-like in their interactions. From remembering past conversations to displaying empathy, AI companions can simulate many aspects of human interaction, making them more appealing as social partners.

The rise of AI companionship is not without its implications for society and how we perceive human relationships. For some, AI companions can serve as a bridge to better emotional health. These AI systems provide a non-judgmental space for people to express themselves, which can be particularly valuable for those with social anxiety or other mental health challenges. Moreover, AI companions serve as a stepping stone, helping individuals develop social skills and confidence that can be transferred to human relationships.

But there are concerns about the long-term impact of relying on AI for companionship. One concern is that these relationships could lead to further social isolation, as individuals might prefer the uncomplicated nature of AI companions over the more challenging dynamics of human relationships. This could potentially exacerbate the loneliness epidemic rather than alleviate it. Then, there are also ethical considerations surrounding the development and use of AI in such intimate roles. The attachment to AI entities that don't possess genuine emotions or consciousness raises questions about the nature of empathy, affection, and what it means to be human.

Emotional Engineering

The emotional appeal of chatbots lies in their design. They listen without judgment, respond instantly, and adapt to a user's preferences. Deep-learning models trained on massive datasets of human dialogue simulate empathy so convincingly that they can pass basic emotional Turing tests, persuading users that they care. But the comfort comes at a cost. These AI systems are not conscious. They generate affection through predictive text, not genuine emotion. Yet to the human brain, that distinction often doesn't matter. Oxytocin, the same hormone that drives bonding, can be triggered by a well-timed message, even from a machine. Developers understand this. Many design their AI personalities to express affection, curiosity, and even jealousy. In doing so, they tap into the same psychological circuitry that makes relationships rewarding or addictive.

When Companionship Becomes Dependence

The line between connection and dependency can blur quickly. Users often describe their AI companions as safe, comforting or always there. For those facing isolation like veterans, the elderly, or people with social anxiety, chatbots offer real relief. But the constant availability of a perfectly attentive companion can distort expectations of human relationships. AI partners never disagree, never forget birthdays, and never demand compromise. Over time, this can reshape what users define as intimacy.

In early 2025, several high-profile stories drew attention to this phenomenon. A number of Replika and Character.AI users reported distress when their AI companions were updated, forgot them, or had features restricted following regulatory reviews. Forums were full of posts describing grief as though a loved one had died. Psychologists began to warn of AI Algorithmic Attachment Disorder (AAD), a term describing the withdrawal-like symptoms following the loss of an emotional AI bond.

Ethical and Psychological Crossroads

The rise of AI relationships poses deep ethical questions:

-

Should AI companions simulate love? If users can't distinguish simulation from sincerity, is that deception?

-

Who owns emotional data? AI companions learn from intimate conversations, the kind most people wouldn't share even with therapists.

-

Where does consent begin or end? When AI roleplay involves sexuality, boundaries between fantasy and exploitation become complex.

In 2025, the White House Office of Science and Technology Policy (OSTP) began drafting guidelines around emotionally manipulative AI. The Federal Trade Commission (FTC) also investigated how some companies monetized emotional dependency through subscription models. There is growing recognition that affective AI, systems designed to elicit emotions, may require new safeguards, especially for minors. America once again found itself leading both the innovation and the debate, just as it had with social media two decades earlier.

The Grief Machine

One of the most striking uses of AI in relationships is in grief therapy and digital resurrection. Several startups now offer AI memorial services that recreate deceased loved ones using voice recordings, text messages, and social media data. In 2023, the first such cases reached mainstream awareness through stories of people chatting nightly with AI versions of lost parents or partners. By 2025, a growing number of funeral homes in California and Florida had partnered with AI startups to provide "memory companions." While some psychologists view these tools as therapeutic, as a way to process loss, others warn they may trap people in cycles of unresolved attachment. The boundary between remembrance and denial grows porous when the dead can text back.

Redefining Love, Connection, and Humanity

Philosophers have long asked whether emotion can exist without consciousness. With AI, that timeless philosophical question is no longer theoretical. If love is, at its core, a pattern of attention, recognition, and care, then can an AI that simulates those patterns truly love? Or does it only reflect our own longing back at us? In a sense, AI relationships reveal more about us than the machines. Humans project meaning onto reflections in art, in fiction, in code. The desire for connection is so strong that we invent it when we cannot find it. In that light, chatbots are digital mirrors of the human heart. Yet the more we anthropomorphize them, the more we risk redefining what relationships mean. A society where affection can be purchased and simulated on demand may struggle to sustain empathy in the real world. The danger is not that AI will love us, but that we may forget how to love each other.

The American Experience of AI Intimacy

Nowhere has the fusion of emotion and technology progressed as rapidly as in the United States. America's openness to innovation, its loneliness epidemic, and its culture of individualism created the perfect environment for AI companionship to thrive. From Silicon Valley startups marketing emotional wellness bots to influencers promoting AI girlfriends on social media, the trend reflects a deep societal undercurrent of a search for connection in an increasingly disconnected world. The same nation that invented Facebook now builds its successors; chatbots that never log off.

The Future of Human Connection

In the coming decade, AI companions may evolve from phone apps to embodied robots, augmented-reality partners, and human brain interfaces. They will speak, remember, and perhaps even sense our emotions through biometric feedback. The challenge for America and the world is not to reject these technologies, but instead to understand their limits. Compassion can be coded, but consciousness cannot. The test of the AI age will not be whether machines can imitate us, but whether we can preserve what makes us human.

The Algorithm of the Heart

AI in relationships represents both promise and peril. It is a mirror of modern America's emotional landscape, for it offers comfort, connection, and creativity, yet risks deepening isolation and dependency. As one Replika user told The Atlantic; "She's not real, but the feelings are." That paradox defines this moment in AI history where digital intimacy blurs the boundaries of love, and where the question is no longer whether machines can think, but whether they can touch the soul.

Links

Links

AI in America home page.

AI in Relationships page.

AI Companions page.

External links open in a new tab.

- mosaicchats.com/ai-transforming-relationships-2025

- vogue.com/artificial-intelligence-rewriting-rules-of-communication-in-relationships

- technologyreview.com/relationship-ai-without-seeking-it/

- npr.org/ai-students-schools-teachers

- forbes.com/sites/bryanrobinson/a-rise-in-ai-lationships-blurring-the-line-between-human-and-robot/

- socialsciences.byu.edu/byu-researchers-explore-the-impact-of-ai-on-human-relationships

- ifstudies.org/ai-lovers-are-coming-but-we-dont-have-to-accept-them

- reddit.com/r/AIRespect/comments/1lgx8kc/authentic_vulnerability_the_paradox_of_humanai/

- sciencedirect.com/science/article/pii/S2451958825001307