Deep Learning

Deep Learning

The Engine of Modern AI

Deep learning is a subset of machine learning that utilizes artificial

neural

networks to model and understand complex patterns in data

Mimicking the cognitive functions of the human brain, deep learning utilizes multiple layers of interconnected nodes (neurons) to extract patterns and features at various levels of abstraction. Its capacity to handle unstructured data, such as images, audio, and text, has made it a cornerstone technology for innovations in computer vision, natural language processing, healthcare, robotics, and much more.

It has gained recognition due to its ability to process vast amounts of data and to improve performance in various applications. Deep learning has enabled machines to perform tasks that were previously thought to require human intelligence. Its applications are enormous and continue to expand as technology advances.

Despite its achievements, deep learning is not without its challenges. Addressing the challenges associated with data requirements, computational costs, and model interpretability are important issues for its continued success.

What is Deep Learning?

What is Deep Learning?

Deep learning is a branch of artificial intelligence and a subset of machine learning that uses multilayered neural networks to learn patterns from large amounts of data. These networks are inspired by the structure of the human brain, where many interconnected "neurons" process information in stages.

Deep learning enables computers to autonomously uncover patterns and make decisions from vast amounts of unstructured data, such as images, audio, and text. The term "deep" refers to the use of many layers - sometimes dozens or even thousands - that allow the system to learn increasingly abstract representations of the input.

Deep learning works by passing data through an input layer, multiple hidden layers, and an output layer. Each hidden layer transforms the data using nonlinear mathematical operations, allowing the network to gradually extract more complex features. These networks can be trained using supervised, semi-supervised, or unsupervised methods, depending on the task and available data. This multilayered structure is what allows deep learning models to excel at tasks like classification, regression, and representation learning. These models can automatically extract features from raw data without the need for manual feature engineering, making them particularly effective for tasks such as image and speech recognition, natural language processing, and more.

Deep learning chatbots use neural networks (often transformers, like those behind GPT) to understand and generate human-like responses. Unlike rule-based bots, they learn from vast datasets and can handle complex conversations.

Deep learning has become one of the most visible and impactful areas of modern AI because of its success across many domains, being effective in computer vision, natural language processing, and reinforcement learning scenarios such as game playing and simulation. Deep learning powers state-of-the-art systems in generative AI, robotics, self-driving cars, and more, thanks to its ability to learn directly from raw data without requiring manually engineered features. The combination of abundant data, powerful computing hardware, and mature software frameworks like TensorFlow and PyTorch has accelerated deep learning's rise.

Overall, deep learning represents a major shift in how machines learn and reason. Instead of relying on handcrafted rules, deep learning systems discover patterns automatically, making them flexible, scalable, and capable of handling complex real-world data. This is why deep learning is often described as a foundational technology of the modern AI era, driving breakthroughs across science, industry, and everyday applications.

Key Components of Deep Learning

Key Components of Deep Learning

Neural networks are the fundamental building blocks of deep learning. Common types include:

- Convolutional Neural Networks (CNNs): Primarily used for image processing tasks such as object detection and image classification.

- Recurrent Neural Networks (RNNs): Designed for sequential data processing, making them suitable for tasks like speech recognition and language modeling.

- Generative Adversarial Networks (GANs): Used for generating new data samples that mimic a given dataset.

- Deep learning models are trained using large datasets through backpropagation, a process which adjusts the weights of the network based on the error of the output compared to the expected result.

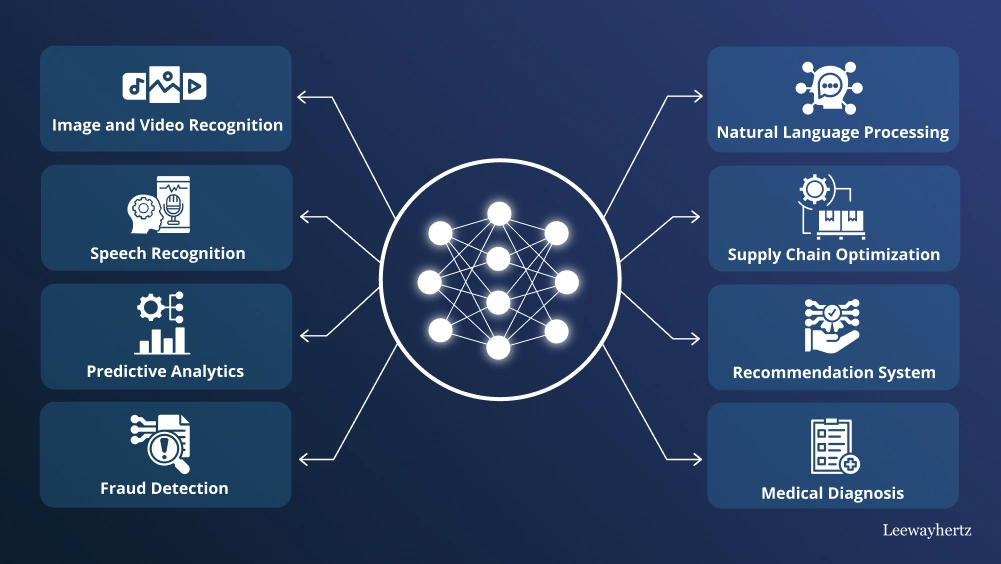

Applications of Deep Learning

Applications of Deep Learning

Deep learning has a wide range of applications in business and industry:

- Computer Vision: Object detection and recognition, like self-driving cars. Image classification and segmentation (e.g., medical imaging).

- Natural Language Processing: Automatic text generation such as chatbots. Language translation and sentiment analysis. Speech recognition (virtual assistants like Siri and Alexa).

- Healthcare: Diagnosis and treatment planning through medical imaging analysis. Drug discovery and personalized medicine.

- Finance: Fraud detection by analyzing transaction patterns. Algorithmic trading based on market predictions.

- Marketing: Personalized marketing strategies through consumer behavior analysis. Recommendation systems in e-commerce platforms.

- Robotics: Training robots to perform complex tasks in dynamic environments.

Challenges in Deep Learning

Challenges in Deep Learning

Deep learning has become one of the most powerful approaches in modern artificial intelligence, but it also faces significant obstacles that limit its reliability, scalability, and real-world adoption.

One of the most widely cited challenges is the need for massive amounts of high-quality data. Deep learning models often require millions of labeled examples to perform well, yet most organizations lack access to such large, clean datasets. Data quality and quantity remain key barriers, as noisy, biased, or insufficient data can severely degrade model performance. Collecting enough relevant training data is one of the most difficult and expensive parts of deploying deep learning systems in practice.

Another major challenge is the computational cost of training deep neural networks. Deep learning models, especially large architectures, require powerful GPUs or specialized hardware, long training times, and substantial energy consumption. The rapid expansion of deep learning has led to increasingly complex and time-consuming models, making development costly and resource-intensive for many organizations. This computational burden also limits experimentation, slows iteration cycles, and raises environmental concerns.

A third challenge is overfitting and generalization. Deep networks can memorize training data instead of learning general patterns, especially when datasets are small or imbalanced. Overfitting and underfitting are key technical hurdles that practitioners must constantly manage through regularization, data augmentation, and careful model design. Even when models perform well in controlled settings, they may fail unpredictably in real-world environments.

Deep learning also struggles with interpretability and transparency. Many models operate as "black boxes," making it difficult to understand why they make certain predictions. This lack of explainability creates problems in high-stakes domains such as healthcare, finance, and autonomous systems. Understanding how deep learning works internally and ensuring its reliability remains a major challenge for researchers and practitioners alike.

Finally, deep learning raises ethical, societal, and deployment challenges. Issues such as bias, fairness, privacy, and security become more complex as models grow in scale and influence. Deep learning's dependence on data, combined with emerging concerns about ethics and responsible AI, requires new frameworks and governance approaches to ensure safe deployment. Even when models work well technically, integrating them into real-world systems requires careful consideration of risk, accountability, and long-term maintenance.

- Data Requirements: Deep learning models typically require large amounts of labeled data for training, which can be difficult to obtain.

- Computational cost: Training deep learning models can be resource-intensive, requiring powerful GPUs and significant time.

- Interpretability: Understanding how deep learning models arrive at their decisions can be challenging, leading to concerns about transparency and trust in critical applications like healthcare or finance.

- Overfitting and generalization: Deep models can easily overfit to training data if not properly regularized or if the dataset is not sufficiently large or diverse.

. Links

Links

geeksforgeeks.org/introduction-deep-learning/

interviewbit.com/blog/applications-of-deep-learning/

builtin.com/artificial-intelligence/deep-learning-applications

dataquest.io/blog/6-most-common-deep-learning-applications/

simplilearn.com/tutorials/deep-learning-tutorial/deep-learning-applications

coursera.org/articles/deep-learning-applications