Algorithms

Algorithms

AI algorithms are computer programs that enable machines to perform tasks that typically require human intelligence

AI algorithms are vital components of artificial intelligence systems that enable machines to learn from data and perform tasks autonomously. By understanding different types of AI algorithms and how they function, we can better appreciate their impact across various fields and their potential to transform industries.

What are AI Algorithms?

What are AI Algorithms?

AI algorithms are sets of rules or mathematical procedures that allow machines to learn from data, make decisions, and solve problems. They are the mathematical and logical frameworks that allow machines to learn, adapt, and act intelligently. They are the reason AI can recognize images, translate languages, recommend movies, detect fraud, generate creative content, and more.

These algorithms form the backbone of artificially intelligent systems, enabling machines to perform tasks that normally require human cognitive abilities such as learning, reasoning, perception, and decision-making. In other words, an AI algorithm tells a computer how to analyze information and how to improve its performance over time.

AI algorithms are the engines behind everyday technologies like facial recognition, autocorrect, search engines, and social media feeds. Different algorithms are built for different goals. Some algorithms classify data, some detect patterns, and others learn by trial and error. This diversity is why AI can power everything from medical diagnostics to self-driving cars.

Machine Learning (ML) algorithms, a major category of AI algorithms, learn patterns from training data. ML then applies those patterns to make predictions on new data. These range from simple models like linear regression to advanced deep-learning systems with millions of parameters. They improve through exposure to more data, refining their internal rules to become more accurate.

There are four major types of AI algorithms:

- Supervised learning (learning from labeled examples)

- Unsupervised learning (finding hidden patterns)

- Semi-supervised learning (a mix of labeled and unlabeled data)

- Reinforcement learning (learning through rewards and penalties)

History of Algorithms

History of Algorithms

Algorithms have a much older and richer history than most people realize. While today we associate them with computers and AI, the concept of an algorithm as a step-by-step procedure for solving a problem, dates back thousands of years. Algorithms are rigorous, finite sequences of instructions used for calculations and data processing. Long before computers existed, civilizations created algorithmic methods to perform arithmetic, geometry, and logic.

The earliest known algorithms come from the ancient world. Over 4,000 years ago, the Babylonians and Egyptians used arithmetic algorithms for multiplication, division, and solving equations. One of the most famous early examples is Euclid's algorithm, developed around 300 BCE, which provides a systematic method for finding the greatest common divisor of two numbers. This algorithm is still taught today and remains foundational in number theory.

The word algorithm itself comes from the name of the 9th-century Persian mathematician Muhammad ibn Musa al-Khwarizmi, whose works introduced systematic methods for arithmetic and algebra. When his writings were translated into Latin in the 12th century, his name was rendered as "Algorithmi," giving rise to the modern term. His contributions helped formalize algorithmic thinking in mathematics.

As computing emerged in the 20th century, algorithms took on a new role. Algorithms became the specifications for performing calculations and automated reasoning in early computer science. Researchers like Alan Turing explored the limits of mechanical computation, showing that algorithms could describe any computable process. By the 1950s, algorithms were central to programming, data processing, and the design of early computers.

The history of algorithms also includes the evolution of data structures. Key structures like stacks were described as early as 1946 by Alan Turing and formalized in the 1950s. These structures enabled more sophisticated algorithmic techniques and laid the groundwork for modern software engineering.

Today, algorithms power everything from search engines and social media to cryptography, robotics, and artificial intelligence. Algorithms have become essential to the operation of the modern digital world, shaping how we compute, communicate, and even make everyday decisions.

Types of AI Algorithms

Types of AI Algorithms

AI algorithms can be categorized based on their functionality and learning approaches. Here are some key types:

Supervised Learning Algorithms

These algorithms learn from labeled data, where the input data is associated with the correct output. Common examples include:

- Linear Regression: Used for predicting continuous values.

- Decision Trees: Used for classification and regression tasks.

- Neural Networks: Used for various applications including image and speech recognition.

Unsupervised Learning Algorithms

These algorithms work with unlabeled data to find patterns or groupings within the data. Examples include:

- K-means Clustering: Groups data into clusters based on similarity.

- Principal Component Analysis (PCA): Reduces dimensionality while preserving variance in data.

Reinforcement Learning Algorithms

In this approach, an agent learns by interacting with its environment and receiving feedback in the form of rewards or penalties. This type is commonly used in robotics and game playing.

Search and Optimization Algorithms

These algorithms help in exploring vast search spaces to find optimal solutions. Examples include:

- A* Search Algorithm: Used for pathfinding and graph traversal.

- Genetic Algorithms: Mimic natural selection to optimize solutions.

Natural Language Processing (NLP) Algorithms

These algorithms enable machines to understand and generate human language. Examples include:

- Sentiment Analysis: Determines the emotional tone behind a series of words.

- Named Entity Recognition (NER): Identifies proper nouns in text.

These algorithms enable machines to interpret and understand visual information from the world. Examples include Convolutional Neural Networks (CNNs), which are specialized for processing grid-like data such as images.

How AI Algorithms Work

How AI Algorithms Work

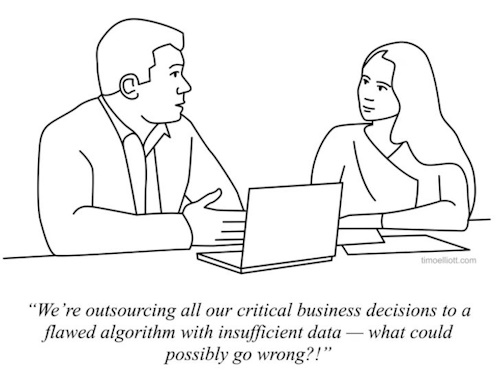

AI algorithms operate by processing large amounts of data to identify patterns and make predictions or decisions based on that data. The process typically involves:

- Data Collection: Gathering relevant data for training the algorithm.

- Training: Feeding the data into the algorithm so it can learn from it. This involves adjusting parameters to minimize errors in predictions.

- Validation: Testing the algorithm on new data to evaluate its performance.

- Deployment: Implementing the trained model in real-world applications where it can make predictions or decisions.

Applications of AI Algorithms

Applications of AI Algorithms

AI algorithms have a wide range of applications across various industries, including:

- Healthcare: Assisting in diagnosis, drug discovery, and personalized medicine by analyzing medical data.

- Finance: Fraud detection through pattern recognition in transaction data.

- Marketing: Personalizing customer experiences through recommendation systems based on user behavior.

Links

Links

geeksforgeeks.org/ai-algorithms

blog.hubspot.com/marketing/ai-algorithms

techtarget.com/searchenterpriseai/tip/Types-of-AI-algorithms-and-how-they-work

cmswire.com/information-management/ai-vs-algorithms-whats-the-difference

rockcontent.com/blog/artificial-intelligence-algorithm

ec.europa.eu/futurium/en/european-ai-alliance/what-algorithm-ai

ibm.com/topics/machine-learning-algorithms

coursera.org/articles/ai-algorithms