Generative AI

Generative AI

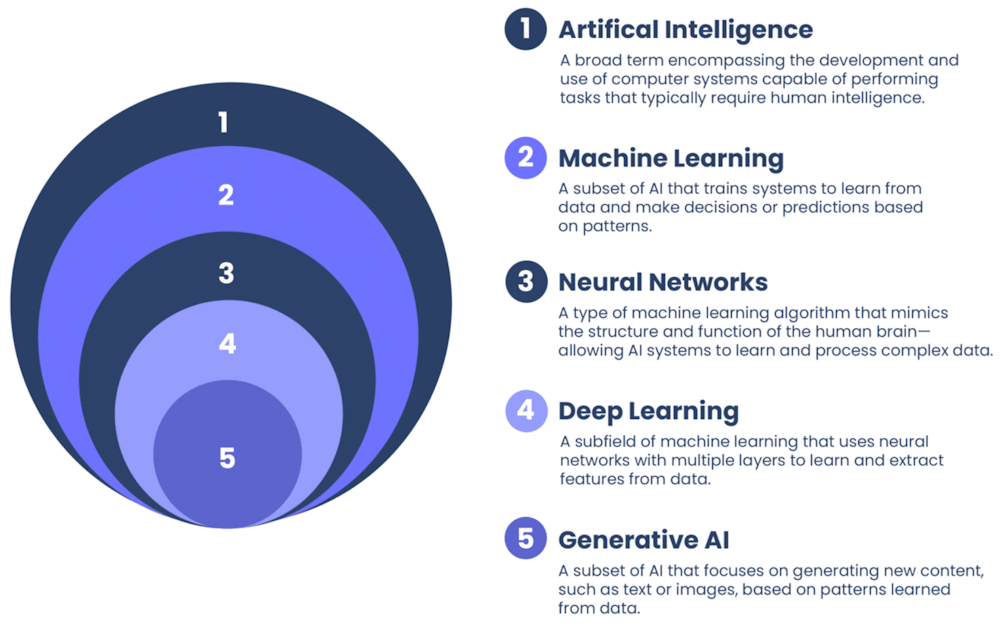

Generative artificial intelligence is the part of AI that addresses creating content based on data from its training. While traditional AI excels at analyzing data and automating tasks, generative AI adds a revolutionary twist by creating entirely new content.

This technology has gained attention and popularity due to its ability to produce a wide range of outputs, including text, images, audio, and videos

Generative AI is transforming how we create and interact with digital content. By understanding its mechanisms, applications, and ethical implications, users can harness its potential while navigating the challenges it presents. As the technology continues to evolve, it will likely play an increasingly important role in human life.

What is Generative AI?

What is Generative AI?

Generative AI refers to AI systems that can generate original content in response to user inputs.

Generative AI is a branch of artificial intelligence focused on creating new content, such as text, images, audio, video, or even software code, based on patterns learned from large datasets. Instead of simply analyzing or classifying information, these systems *generate* original outputs in response to prompts. Generative AI models learn the underlying structures of their training data and use them to produce new material that resembles human-created content. This capability has expanded rapidly due to advances in deep neural networks, especially large language models built on transformer architectures.

Generative AI models are trained on massive amounts of data, which allows them to identify patterns, relationships, and structures. Once trained, they can produce new content that mirrors the style or logic of what they've learned. These systems can create text, images, video, audio, or code in direct response to a user's prompt. The model doesn't "copy" the data, it synthesizes new outputs by predicting what should come next based on statistical patterns. This is why tools like ChatGPT, DALL-E, and other modern generators feel so fluid and creative.

Generative AI has become one of the most transformative technologies of the 2020s. It accelerates tasks that once required significant human time; drafting documents, designing graphics, writing code, or summarizing complex information. These tools are especially valued for handling time-consuming tasks efficiently and enabling new forms of creativity and productivity. As the technology evolves, it is reshaping industries such as education, entertainment, marketing, software development, and scientific research.

Generative AI is not just a technical breakthrough. It's a shift in how humans interact with information and creativity. These models redefine our relationship with technology by producing content that mirrors human expression and by enabling more natural, conversational interactions with machines. At the same time, researchers are working to address challenges such as accuracy, bias, and responsible use. As generative AI continues to advance, it is poised to become a foundational tool across nearly every domain of modern life.

How Does Generative AI Work?

How Does Generative AI Work?

Generative AI models typically utilize machine learning techniques, particularly deep learning algorithms.

Generative AI works by learning patterns from massive datasets and then using that knowledge to create new content - text, images, audio, video, or code - that resembles human-made material. Generative AI models analyze huge amounts of data and use neural networks to generate outputs that "mirror human expression". Unlike traditional AI, which classifies or predicts, generative AI produces something new each time you prompt it.

Here is how the process works:

1. Training on Large Datasets

Generative AI models are trained on enormous collections of text, images, audio, or other data. During training, the model learns statistical relationships such as how words follow each other, how images are structured, how code patterns work, etc. This training allows the model to create original content in response to user prompts.

2. Neural Networks Learn Patterns

Deep neural networks - especially transformer architectures - identify patterns across billions of examples. Understanding how neural networks process data is central to how generative AI works. These networks don't memorize; they learn representations that help them generalize.

3. The Model Predicts What Comes Next

When you give a prompt, the model generates content by predicting the most likely next token (word, pixel, sound sample, etc.). This prediction happens one step at a time, guided by the patterns learned during training. Systems like ChatGPT generate text by repeatedly choosing the next most probable token based on context.

4. Fine-Tuning and Alignment

After initial training, models are refined using techniques like:

- Supervised fine-tuning (learning from human-written examples)

- Reinforcement learning from human feedback (RLHF)

- Safety and alignment training to reduce harmful or incorrect outputs

This process helps models "learn, improve, and process natural language" more effectively.

5. Generation at Inference Time

When you type a prompt, the model:

- Converts your text into numerical vectors

- Processes them through its neural network layers

- Predicts and outputs new content

This happens in milliseconds, giving the impression of fluid conversation or creativity.

🎨 Different Models Generate in Different Ways

Generative AI includes multiple model families, each with its own mechanism:

- Transformers: Predict next tokens in text or multimodal sequences

- Diffusion Models: Start with noise and gradually refine into an image

- GANs: Two networks compete to create realistic outputs

- VAEs: Encode data into a latent space and reconstruct variations

🌍 Why It Feels So Human

Generative AI feels natural because:

- It has been trained on human-created data

- It uses probability to choose contextually appropriate outputs

- It can integrate reasoning, memory, and pattern recognition

- It adapts to your prompt style and intent

These systems redefine our relationship to technology by producing content that mirrors human expression.

Types of Generative Models

Types of Generative Models

Generative models come in several families, each with different strengths, architectures, and ideal use cases. Below is a breakdown of the most important ones.

✨ Transformer Models

Transformers are the dominant architecture for text generation,

multimodal reasoning, and general-purpose AI. They excel at producing

human-like text and code. Examples include GPT-4, Gemini, and other large

language models. Transformers are the best choice when you want text that

"looks like a human could write it".

Best for: Text, code, reasoning,

multimodal tasks.

🎨 Diffusion Models

Diffusion models generate images by gradually removing noise from random

patterns until a coherent picture emerges. They power modern image

generators like Stable Diffusion and DALL-E 3. Diffusion models are ideal

for creating original images from prompts.

Best for: Images, art,

photorealism, creative visual tasks.

🔁 GANs (Generative Adversarial Networks)

GANs use two neural networks - a generator and a discriminator - that

compete to produce increasingly realistic outputs. Historically dominant for

image synthesis before diffusion models. GANs are still widely used for

deepfakes, style transfer, and synthetic data.

Best for: Realistic

images, video synthesis, style transfer.

📉 VAEs (Variational Autoencoders)

VAEs compress data into a latent space and then reconstruct it, allowing

them to generate new variations. They produce smoother, less sharp images

than GANs but are more stable to train. VAEs are useful for representation

learning and controlled generation.

Best for: Latent-space exploration,

anomaly detection, structured generation.

📊 Autoregressive Models

These models generate data one step at a time, predicting the next token

or pixel based on previous ones. Transformers are technically a type of

autoregressive model, but earlier versions include PixelRNN and WaveNet.

These models are strong for sequential data.

Best for: Text, audio,

pixel-by-pixel image generation.

🔄 Flow-Based Models

Flow models learn reversible transformations between simple and complex

distributions. They allow exact likelihood estimation and fast sampling.

Examples include RealNVP and Glow.

Best for: Scientific modeling,

density estimation, interpretable latent spaces.

🧩 Hybrid & Multimodal Models

Modern frontier models combine multiple architectures. Multimodal

transformers (e.g., GPT-4, Gemini) integrate text, images, audio, and more.

Best for: Unified AI systems that understand and generate across

modalities.

Applications of Generative AI

Applications of Generative AI

Generative AI is effecting nearly every industry by creating new content, accelerating workflows, and enabling forms of automation that were impossible just a few years ago. Generative AI is already used in health care, advertising, manufacturing, software development, financial services, entertainment, cybersecurity, education, gaming, virtual assistants, and content creation.

Here are some of the most important application areas.

🏥 Healthcare: Drug discovery and molecular design, Medical image synthesis for training, Personalized treatment recommendations, Synthetic patient data for research

💼 Business & Productivity: Automated report writing and summarization, Email drafting and customer communication, Workflow automation and knowledge management, Personalized marketing content

🎨 Creative Industries: Image generation (art, design, branding), Video and animation creation, Music composition and sound design, Scriptwriting and story generation

🧪 Research & Manufacturing: Generating 3D models and simulations, Optimizing product designs, Synthetic data for testing and training, Accelerating R&D cycles

🧾 Software Development: Code generation and debugging, Automated documentation, Test case creation, Refactoring and optimization

💰 Finance: Fraud detection using synthetic data, Automated financial reports, Personalized investment insights, Scenario modeling

🎮 Gaming: Procedural world generation, Character dialogue and storylines, Realistic textures and assets

🔐 Cybersecurity: Generating synthetic attack data for training, Automated threat simulation, AI-driven defense modeling

🧑🏫 Education: Personalized tutoring, Automated quiz and lesson generation, Content simplification and translation

🗣️ Virtual Assistants & Customer Service: Conversational agents, Automated support responses, Voice synthesis for interactive systems

🎬 Entertainment & Media: Film pre-visualization, Deepfake creation (with ethical concerns), Automated editing and post-production

Ethical Considerations and Challenges

Ethical Considerations and Challenges

While generative AI offers exciting possibilities, it also raises ethical concerns:

Generative AI is powerful, creative, and transformative, but it also introduces serious ethical risks. As eWeek notes, generative AI ethics has become "an increasingly urgent issue" as the technology grows more mainstream and influential. Researchers, policymakers, and industry leaders are now grappling with how to ensure these systems are fair, safe, transparent, and accountable.

🧩 Bias and Fairness

Generative AI models learn from massive datasets that often contain

historical biases. If the training data is unrepresentative, the model may

reinforce stereotypes or discriminatory patterns. Biased data can perpetuate

harmful outcomes unless developers improve data practices and oversight.

Why it matters: Biased outputs can affect hiring tools, educational content,

legal assessments, and more.

📰 Misinformation and Hallucinations

Generative AI can produce content that is factually incorrect,

misleading, or entirely fabricated.

Misinformation is one of the most

significant ethical risks, especially when AI-generated content appears

authoritative. Hallucinations - confident but false outputs - remain a major

challenge.

Why it matters: AI-generated misinformation can influence public opinion,

distort scientific understanding, or spread harmful narratives.

🧠 Intellectual Property and Copyright

Generative AI models are trained on vast amounts of copyrighted material.

There are issues about how AI systems use copyrighted works without explicit

permission, and originality and copyright as core ethical challenges.

Why it matters: Creators worry about unauthorized use of their work, and

companies face legal uncertainty around training data and generated outputs.

🔒 Privacy and Data Protection

Generative AI may inadvertently reveal sensitive information learned

during training. Privacy is a major ethical concern, especially when models

are trained on personal or proprietary data.

Why it matters: Users need assurance that their data won't be leaked,

misused, or embedded in model outputs.

🛡️ Safety and Harmful Content

Generative AI can produce harmful, offensive, or dangerous content if not

properly aligned. Safety, harmful content, and societal impacts are central

ethical issues.

Why it matters: Unrestricted models could generate hate speech, self-harm

instructions, or extremist propaganda.

🌍 Environmental Impact

Training large models requires enormous computational resources.

Environmental concerns are part of the broader ethical landscape.

Why it matters: Energy consumption and carbon emissions raise

sustainability questions as models grow larger.

🏛️ Power Concentration and Inequality

Monopolization of AI capabilities by a few large labs can create global

inequities. Developing countries may lack access to advanced models. A small

number of companies may control critical infrastructure.

Why it matters: Unequal access to AI could widen economic and

technological divides.

🧾 Transparency and Accountability

Since Generative AI systems often operate as "black boxes," there is a

need for transparency and clear accountability frameworks.

Why it matters: Users and regulators need to understand how decisions are

made and who is responsible when things go wrong.

Links

Links

aws.amazon.com/what-is/generative-ai/

cloudskillsboost.google/paths/118

en.wikipedia.org/wiki/Generative_artificial_intelligence

techtarget.com/searchenterpriseai/definition/generative-AI

youtube.com/watch?v=rwF-X5STYks

coursera.org/articles/what-is-generative-ai

news.mit.edu/2023/explained-generative-ai-1109

govops.ca.gov/wp-content/uploads/sites/11/2023/11/GenAI-EO-1-Report_FINAL pdf