Large Language Models

Large Language Models

AI algorithms designed to understand, generate, and produce human language.

Large Language Models (LLMs) represent a huge advance in AI's ability to understand and generate human language. Their applications span numerous fields, making them invaluable tools for businesses and developers alike. They are built using deep learning techniques and trained on vast datasets, often comprising petabytes of text from books, articles, and websites. This extensive training enables them to recognize patterns in language and generate coherent text based on given prompts.

What are LLMs?

What are LLMs?

Large Language Models are advanced AI systems designed to understand and generate human language at scale. An LLM is a language model trained with self‑supervised machine learning on vast amounts of text, enabling it to perform natural‑language tasks such as answering questions, summarizing information, or generating content.

LLMs are a category of deep learning models trained on immense datasets, giving them the ability to produce natural language and other types of content across a wide range of tasks. Because they are trained on billions of words, they learn patterns, grammar, context, and relationships between ideas, allowing them to generate fluent, coherent responses.

These models are typically built on the Transformer architecture, which revolutionized AI by introducing self‑attention; an ability to focus on the most relevant parts of the input when generating output. LLMs use Transformers to process longer text sequences and perform complex tasks like summarization, translation, and question answering. Transformers help LLMs learn long‑range dependencies and contextual meaning, which is why they can maintain coherence across long passages of text.

The "large" in large language models refers to both the scale of the training data and the number of parameters, often in the billions, that the model uses to represent linguistic knowledge. This scale gives LLMs their versatility: they can write essays, translate languages, generate code, analyze documents, and engage in conversation without being explicitly programmed for each task. This broad capability comes from the combination of deep learning, massive datasets, and extensive training.

Modern examples of LLMs include ChatGPT, Google Gemini, and Anthropic Claude, all of which rely on Transformer-based architectures and large-scale training to deliver human‑like language abilities. As these models continue to grow in size and sophistication, they are reshaping how people interact with technology, enabling new forms of creativity, productivity, and problem‑solving.

How do they Work?

How do they Work?

TL;DR LLMs operate primarily on a transformer architecture, which allows them to process input data effectively. They use mechanisms like self-attention to evaluate the relationships between words in a sentence, enabling them to maintain context and produce relevant responses. The training process typically involves unsupervised learning on unstructured data, followed by fine-tuning for specific tasks

Large language models work by learning statistical patterns in massive amounts of text so they can predict and generate language. At their core, LLMs are trained using self-supervised learning, where the model repeatedly tries to predict missing or next words in sentences drawn from huge text corpora. An LLM is a language model trained on vast datasets using this method, allowing it to internalize grammar, facts, relationships, and patterns of human communication. Over billions of training steps, the model adjusts its internal parameters so that its predictions become increasingly accurate.

The underlying architecture that makes modern LLMs so powerful is the Transformer, introduced in 2017 and now the foundation of nearly all state-of-the-art language models. Transformers are systems that use self-attention, a mechanism that lets the model weigh which words in a sentence are most relevant to each other when generating meaning. This allows LLMs to handle long-range dependencies, such as connecting ideas across paragraphs, far better than earlier neural network designs. Because Transformers can process text in parallel rather than sequentially, they scale efficiently to billions of parameters.

During training, the model learns to estimate the probability of each possible next token (a token is usually a word or sub-word). LLMs generate text by consulting their learned statistical patterns to choose the most likely continuation of a prompt. This prediction process is repeated token by token, which is why LLMs can produce long, coherent passages of text. Importantly, the model does not retrieve information from a database; instead, it synthesizes responses based on patterns encoded in its parameters.

Once trained, an LLM can perform a wide range of tasks - translation, summarization, question answering, reasoning, and creative writing - without being explicitly programmed for each one. Although people often summarize LLMs as predicting the next word, the scale of their training and the complexity of the Transformer architecture allow them to generate surprisingly rich, context-aware responses. This versatility is why LLMs have become central to modern AI applications.

Key Components

Key Components

Neural Networks: LLMs are based on neural networks that mimic the way human brains process information. They consist of multiple layers, including embedding layers, feedforward layers, recurrent layers, and attention layers. Each layer plays a role in transforming input data into meaningful output

Parameters: The performance of LLMs is often measured by the number of parameters they contain. These parameters can be thought of as the model's "memories" or learned knowledge from the training data. More parameters generally allow for better understanding and generation capabilities.

Applications

of LLMs

Applications

of LLMs

Large language models have become important tools in AI because they can understand, generate, and transform text at scale. LLMs are now used to automate complex workflows such as document processing, financial analysis, and contract review, dramatically reducing the time required for tasks that once took days or weeks. This makes them especially valuable in fields like finance, law, and enterprise operations, where large volumes of text must be analyzed quickly and accurately.

LLMs are also transforming content creation, enabling teams to generate articles, marketing copy, reports, and even computer code with far greater speed. LLMs streamline creative workflows by producing high-quality written material and assisting developers with coding tasks. Beyond writing, they support translation and localization, offering context-aware, culturally sensitive adaptations of content for global audiences. This makes them powerful tools for international communication and multilingual product development.

Another major application is in search and virtual assistants. LLMs power next-generation search engines and conversational agents capable of understanding complex user intent and responding in natural, human-like language. This includes everything from customer support chatbots to voice-activated assistants that can handle nuanced queries. In enterprise settings, LLMs are increasingly embedded into AI agents that automate multi-step tasks, coordinate workflows, and interact with software on behalf of users.

Real-world deployments span dozens of industries. There are hundreds of production use cases, including applications in healthcare, education, e-commerce, cybersecurity, and entertainment. These range from medical note summarization and tutoring systems to fraud detection, personalized recommendations, and automated moderation. As LLMs continue to improve, they are becoming core infrastructure for organizations seeking to enhance productivity, reduce costs, and deliver more intelligent digital experiences.

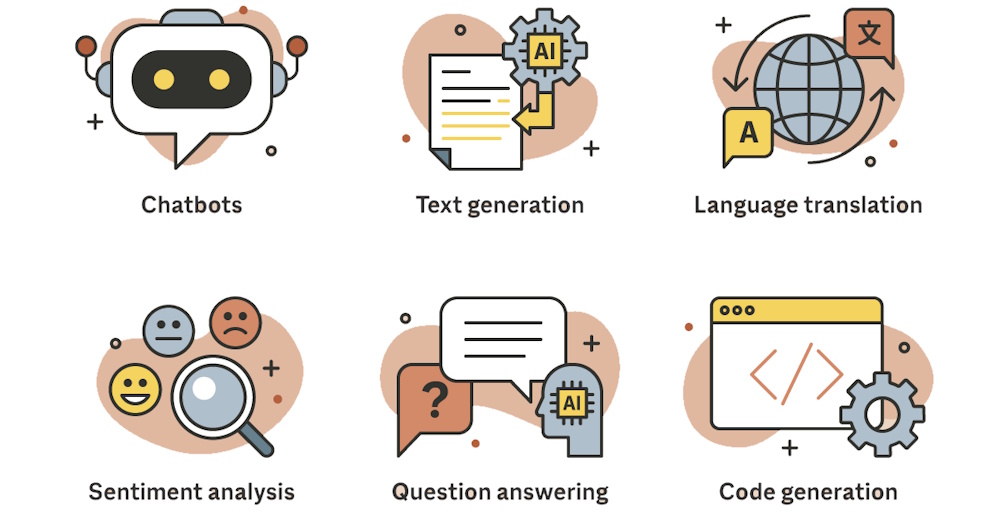

LLMs have a wide range of applications:

- Chatbots: Chatbots like ChatGPT are built on top of LLMs, although they include additional layers to manage context and memory.

- Text Generation: LLMs can create original content, such as articles, stories, and poetry.

- Language Translation: LLMs can translate languages and summarize large texts efficiently.

- Sentiment Analysis: LLMs can detect and analyze the emotional tone behind a body of text.

- Question Answering: LLMs power chatbots and virtual assistants that can engage in human-like conversations.

- Code Generation: Some models are specifically trained to assist in programming tasks by generating code snippets based on user input.

Types of LLMs

Types of LLMs

Large language models can be grouped by architecture, training objective, and specialization. Here are some of the major categories used across modern AI systems.

Decoder‑Only Models

These are the most common LLMs today. They generate text by predicting the next token in a sequence. They excel at open‑ended generation, conversation, creative writing, and coding. Examples include GPT, Claude, LLaMA, and Mistral.

Encoder‑Only Models

These models are designed for understanding text rather than generating it. A classic example is BERT, which is not built for prompting or generative tasks. They are used for classification, sentiment analysis, search ranking, and embeddings.

Encoder-Decoder (Seq2Seq) Models

These models read input with an encoder and produce output with a decoder. They are strong in translation, summarization, and structured text transformation. Examples include T5 and BART (not in the snippet but part of this architecture family).

General‑Purpose Foundation LLMs

These are large, versatile models trained for broad tasks across domains. Examples include GPT, Gemini, Claude, Llama, Mistral, Qwen, Falcon, and others.

Domain‑Specialized LLMs

Some models are fine‑tuned for specific industries or tasks like coding, healthcare, finance, legal analysis, etc. Many LLMs are optimized for enterprise scenarios and specialized workflows.

Multimodal LLMs

These models process more than text. They support other modes such as images, audio, or video. Examples include Gemini and GPT‑4‑class models.

Open‑Source vs. Proprietary LLMs

These include a mix of open‑source models (Llama, Falcon, Mistral) and proprietary models (GPT, Claude, Gemini).

Small, Efficient LLMs (Small Language Models or SLMs)

These include compact models like Stable LM and Mistral 7B, designed for edge devices and local deployment.

Challenges and Limitations

Challenges and Limitations

Large language models have advanced rapidly, but LLMs still face significant limitations.

Key issues include failures in reasoning, hallucinations, and limited multilingual capability, even as models grow larger and more sophisticated. These weaknesses stem from the fact that LLMs learn statistical patterns rather than true understanding, which means they can produce fluent text that is not always accurate or logically sound.

Another major challenge is the computational cost of training and running LLMs. Training a model like GPT-3 required 355 GPU-years and cost several million dollars, making such systems inaccessible to most organizations. Even after training, deploying LLMs at scale demands substantial hardware, energy, and ongoing maintenance. This creates barriers to entry and raises environmental concerns.

LLMs also struggle with hallucinations, bias, and misinterpretation of prompts. Models can generate incorrect, nonsensical, or biased outputs because they rely on patterns in their training data rather than verified facts. These issues can lead to misinformation, unfair outcomes, or unreliable behavior in high-stakes settings like healthcare, law, or finance.

Evaluation itself is another limitation. Assessing LLM performance is difficult because benchmarks often fail to capture real-world complexity, and models may exploit shortcuts rather than demonstrating genuine reasoning. This makes it challenging to measure progress or ensure reliability across diverse tasks.

Finally, LLMs face generalization and robustness challenges. Even though they perform well on familiar patterns, they can break down when encountering ambiguous, adversarial, or out-of-distribution inputs. The MDPI review highlights that these limitations require new methods, safeguards, and hybrid systems to ensure safe and trustworthy deployment.

Links

Links

Chatbots page.

Hallucinations and bias pages.

Prompt Engineering and Prompt Shortcuts.

External links open in a new tab:

- cloudflare.com/learning/ai/what-is-large-language-model/

- techtarget.com/whatis/definition/large-language-model-LLM

- elastic.co/what-is/large-language-models

- sap.com/resources/what-is-large-language-model

- aws.amazon.com/what-is/large-language-model/

- boost.ai/blog/llms-large-language-models/

- en.wikipedia.org/wiki/Large_language_model