AGI and Superintelligence

AGI and Superintelligence

Can America Keep the Lead

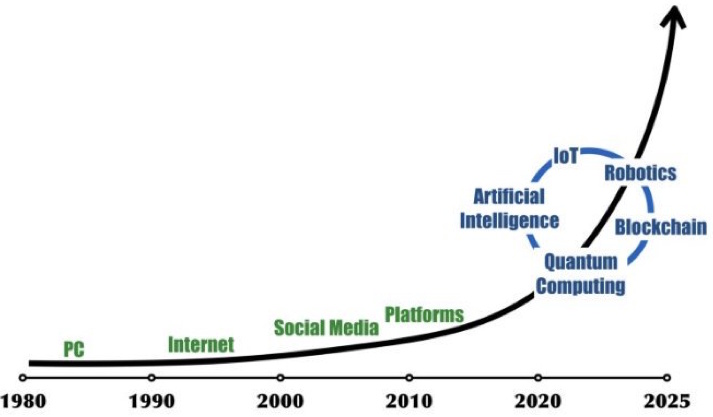

Artificial General Intelligence (AGI), machines that can reason, learn, and act as humans do, has long been the holy grail of artificial intelligence research. For decades, it existed mostly in theory and science fiction. But today, the conversation has shifted from 'if' to 'when'. And the United States sits at the forefront of this new race.

The Birth of the AGI Dream

The roots of AGI are American. From the Dartmouth Conference of 1956, where the term "artificial intelligence" was coined, to the rise of deep learning at American universities, nearly every major milestone in the pursuit of machine intelligence has a U.S. origin. The idea of creating a machine that could "think" as flexibly as a human has been nurtured by American academia, defense agencies, and private enterprise alike, and dramatized repeatedly in American pop culture.

Projects at MIT, Stanford, and Carnegie Mellon laid the foundations. DARPA funding in the 1960s and 1970s gave rise to the first expert systems. Decades later, Silicon Valley's risk-taking culture and access to capital turned what was once theoretical computer science into trillion-dollar industries.

Introduction: What Is Superintelligence?

Imagine an AI system that's not just good at one thing, like playing chess or recognizing faces in photos, but is smarter than humans at everything: science, art, strategy, inventing new things, understanding people, and solving problems we can't even imagine. That's the basic idea of superintelligence: artificial intelligence that surpasses human intelligence in all areas.

Right now, AI apps like ChatGPT, Siri, or the recommendation algorithms on Facebook and Netflix, are examples of what we call "narrow AI." These systems are really good at specific tasks but can't do much else. ChatGPT can write essays but can't drive a car. Self-driving car AI can navigate roads but can't write poetry. Each AI is specialized.

Superintelligence is different. It is so advanced that it exceeds human capabilities across the board. Some scientists think this could happen within 5, 10, 20, or 50 years. Others think it might never happen, or will take centuries. But the possibility alone is worth understanding, because if superintelligence does arrive, it could be the most important event in human history.

This chapter will help understand what superintelligence is, how it might come about, why some people are excited about it while others are terrified, what problems it could solve, what dangers it might pose, and what we can do to prepare for this possible future.

How is Superintelligence Different from Current AI?

To understand superintelligence, let's first look at the different levels of AI:

Narrow AI (Artificial Narrow Intelligence): This is what we have today. These systems are incredibly good at specific tasks but can't transfer their skills to other areas. Examples include:

-

Face recognition that can identify people in photos but can't understand what they're doing.

-

Chess programs that beat world champions but can't play checkers without being reprogrammed.

-

Language translation that converts between languages but doesn't actually understand the meaning.

-

Spam filters that catch junk email but can't write emails.

Artificial General Intelligence (AGI): This would be AI that can learn and understand any intellectual task that a human can. It could:

-

Learn new skills the way humans do, without being specifically programmed for each one. No need to reprogram the AI chess master to learn checkers.

-

Apply knowledge from one area to solve problems in completely different areas. The spam filter got bored and started writing killer emails.

-

Understand context and common sense the way humans do.

-

Set its own goals and figure out how to achieve them.

-

Think creatively and come up with genuinely new ideas.

AGI would be like a human mind in digital form. It wouldn't necessarily be smarter than humans, just different. It would be faster at some things, maybe slower at others, but generally comparable to human intelligence.

Are we there yet?

Today, we're in the "are we there yet?" phase of a very long, winding road trip where we can't see the destination and aren't even sure if the map is correct. Here's why:

Current AI (like ChatGPT, Gemini, Claude) shows impressive sparks of general intelligence, they can reason across domains, write code, and analyze images in a way that feels human. But these are statistical marvels, not true understanding. They lack consistent reasoning (they make basic logical errors a human wouldn't make) and deep causal understanding (they can describe a chain of events but not truly model cause and effect). They don't have a stable, internal model of how the world works. Their "knowledge" is a patchwork of patterns, like having a super-advanced auto-complete that sometimes writes genius philosophy, but then forgets that water is wet.

Every time an AI masters a task we thought required "general intelligence" (like passing the bar exam or beating humans at chess), we move the goalposts. This is called the "AI Effect." What we consider AGI keeps shifting. Right now, many researchers point to tests like:

-

The Coffee Test: An AI could enter an average American home and figure out how to make coffee (find the machine, beans, water, and operate it).

-

The Turing Test plus: Not just chatting (the Turing Test), but demonstrating learning and adaptation over a long, diverse interaction.

-

Full Transfer Learning: Mastering a complex video game, then using those skills to quickly learn, say, real-world robotics.

No AI yet comes close to these benchmarks. And when it does, we may move the goalposts again.

These are the core roadblocks to AGI, the major unsolved problems:

-

The Embodiment Problem: Human intelligence developed through a body interacting with the world. Our leading AIs are "disembodied brains" trained on text and images. Can true understanding emerge without sensory-motor experience is the question.

-

The Common Sense Problem: AIs lack the vast, unspoken, obvious knowledge humans have (people don't like being harmed, an object can't be in two places at once, etc). Encoding common sense is monstrously hard.

-

The Alignment Problem (The Big One): We still have no idea how to reliably ensure a superintelligent AI would want what we want. This isn't just a programming bug. It's a profound philosophical and technical challenge. Most researchers say solving alignment is a prerequisite for deploying AGI, and we are far from solving it.

Again, AGI means it can do anything a human can do intellectually. It can learn any job: be it a doctor, engineer, artist, scientist. It has common sense, reasoning, creativity, and emotional understanding, and it can transfer knowledge between unrelated fields. But it's not necessarily better than humans, just equally capable. If you can replace any human professional with an AGI and it would perform just as well (or slightly better), that's AGI.

Superintelligence (ASI or Artificial Superintelligence): This would be AI that surpasses the smartest humans in every way:

-

Speed superintelligence: Think millions of times faster than humans. It could have years of thoughts in seconds.

-

Collective superintelligence: Like having thousands of genius-level minds working together in perfect harmony.

-

Quality superintelligence: Not just faster or with more minds, but fundamentally smarter, able to solve problems no human could solve even with an infinite amount of time.

To help visualize these differences, think about human intelligence as compared to a mouse. Mice can learn, remember things, and solve simple problems. But they can't understand mathematics, create art, or build technology. The gap between mouse intelligence and human intelligence is huge; not just a little smarter, but qualitatively different. Superintelligence might relate to human intelligence the way human intelligence relates to mice. Things that seem impossible to even the smartest humans might be intuitively obvious to a superintelligent AI.

|

Capability |

AGI (Human-Level) |

Superintelligence |

|---|---|---|

|

Scientific Discovery |

Could work alongside human researchers |

Could solve physics' grand mysteries in hours |

|

Creativity |

Could write great novels, compose music |

Could create art forms beyond human perception |

|

Social Skills |

Could empathize, negotiate, persuade |

Could manipulate human society effortlessly |

|

Learning Speed |

Takes months to master a field |

Masters all human knowledge in minutes |

|

Self-Improvement |

Can improve its code slowly |

Can redesign its own architecture and write its own code |

|

Understanding |

Understands the world as we do |

Understands reality at a cosmic, fundamental level |

How Might Superintelligence Emerge?

There are several paths that could lead to superintelligence:

Path 1: Recursive Self-Improvement

Imagine an AI system smart enough to improve its own programming. It makes itself 10% smarter. Now, being smarter, it can make even better improvements, making itself 15% smarter. Then 25% smarter. Then 50%. This is called "recursive self-improvement" or an "intelligence explosion."

1. An AGI is created at human-level intelligence

2. It works on AI research (including improving itself)

3. It creates a

slightly smarter version of itself

4. That smarter AI creates an even

smarter version

5. This cycle continues in an accelerating feedback loop

Here's why this is important: humans can't easily upgrade their own brains. We're stuck with the intelligence we're born with (plus what we learn). But an AI could potentially rewrite and improve itself, and each improvement makes the next improvement easier and faster. This could create a "takeoff" scenario:

-

Slow takeoff: AGI gradually improves over years or decades, giving us time to understand and respond

-

Fast takeoff: AGI rapidly improves over months or weeks, surprising us

-

Hard takeoff: AGI explodes to superintelligence in days or hours, giving us almost no time to react

Think of it as a phase transition: AGI is like water reaching 100°C while Superintelligence is the explosive transition to steam. The hard takeoff scenario is particularly worrisome because there would be little warning and no time to correct mistakes.

Path 2: Whole Brain Emulation (WBE)

Another path forward involves scanning a human brain in incredible detail--every neuron, every connection--and simulating it on a computer. This "uploaded mind" would think and feel like the original person but could run on much faster hardware. Speed it up 10,000 times, and this digital person experiences years of thought in a day.

WBE is arguably the most radical vision of human augmentation. WBE involves scanning and replicating an entire human brain at the cellular or molecular level inside a computer. It's not just connecting to your brain. It's copying your mind into digital form. It's the fax machine approach to immortality: scan the original (your biological brain), transmit the data, and print out a perfect copy (your digital mind).

It's been called the Blueprint Theory of Consciousness. The idea behind WBE (sometimes called "mind uploading") is that consciousness, memory, personality - everything that makes up you - is encoded in the physical structure and connections of your brain. If we can map that structure perfectly and simulate its physics accurately, then we can create a functioning digital consciousness that is you. This idea rests on a philosophy called substrate independence, which states the mind isn't tied to biological wetware; it's the pattern that matters, not the substrate.

Here's how it would work:

First, you need a perfect, frozen snapshot of the brain. No decay. The processes involved are chemical fixation (like plastination) and cryogenic preservation (vitrification without ice crystals). Since WBE is destructive scanning, this step kills the biological brain.

High-resolution scanning is the monumental engineering challenge WBE faces. Every relevant detail needs to be mapped. Scanning resolutions require electron microscopy. The goal is to use nanometer scale in order to see individual synapses.

The numbers are staggering: A human brain has about 86 billion neurons and 100 trillion synapses. The connectome (connection map) is estimated at 1 petabyte of data, but the full molecular detail needed for emulation could be exabytes. An exabyte is enormous: 1 billion gigabytes of data.

In order to translate scan data into a functional computational model, it's necessary to identify: which neuron is which? what type is it? how strong is each connection? what neurotransmitters are involved? Then a mathematical model of each component and how they interact is built.

Next, the model must be run on a sufficiently powerful computer. Simulating a brain in real-time requires immense computational power even with the perfect blueprint. The estimates range from exaflops to zettaflops (thousands to millions of times more powerful than today's supercomputers).

Funded by the National Institute of Health (NIH), USC's Human Brain Connectome Project is working on WBE. Try to imagine the immensity of this project. It's like trying to map every road, footpath, and alley on Earth with satellite imagery accurate enough to see individual cracks in the pavement...while the planet is moving!

This approach seems less likely anytime soon because we don't yet understand brains well enough to simulate them accurately. But if we eventually figure it out, it could create superintelligence through speed if nothing else.

Path 3: Augmented Humans

Instead of creating AI from scratch, we might enhance human intelligence through brain-computer interfaces, genetic engineering, or other technologies. Companies like Neuralink are working on brain implants. If we successfully merged human brains with AI, this could lead to superintelligent humans or human-AI hybrids.

There are several levels of augmentation:

Level 1: External Enhancement (Already Here) - technology we wear or use closely:

-

Smartphones/AR Glasses: Extends memory, provides instant information access

-

Exoskeletons: Super strength for workers, mobility for paralyzed people

-

Brain-Computer Interfaces (BCI): EEG headsets for meditation, controlling prosthetics with thought

Level 2: Integrated Enhancement (Emerging) - technology implanted or deeply integrated:

-

Cochlear implants: Restore hearing

-

Retinal implants: Restore vision

-

Neural implants (like Neuralink): Treat paralysis, depression

-

Biohacking implants: RFID chips for payments, door access

-

Prosthetics with sensory feedback: Feeling with robotic limbs

Level 3: Radical Enhancement (Future) - redesigning human biology:

-

Genetic editing (CRISPR): Eliminate diseases, enhance traits

-

Cellular reprogramming: Reverse aging

-

Full brain-machine interfaces: Merge consciousness with AI

-

Synthetic biology: Organs grown in labs, enhanced physiology

The Key Technologies Driving Augmentation

Brain-Computer Interfaces (BCI)

Like a USB port for your brain, BCI is the most direct path to augmentation. Currently, BCI helps paralyzed people control cursors and computers with thought. In the near future (5-15 years) there could be memory backup, uploads, downloads, and telepathic communication between BCI users. In the long term, there could be a full brain to cloud connection, merging with AI. By the way, Neuralink's goal isn't just medical. It's ultimately about keeping humans relevant in an AI world by letting us think at computer speeds.

Genetic Engineering (CRISPR)

Genetic engineering involves the practice of editing the source code of life. Currently, that includes treating diseases like sickle cell anemia, certain cancers, etc. In the near future, there is the possibility of eliminating hereditary diseases before birth. Genetic engineering raises ethical questions such as whether we should edit genes for traits like intelligence, height, athleticism, etc. Are we ready as a society to create designer babies?

Nanotechnology

Nanotechnology is the practice of placing microscopic (nano size) machines in your bloodstream. It has the potential for continuous health monitoring, targeted drug delivery, repairing cellular damage, and enhancing cognitive function.

Cybernetics and Prosthetics

With advances in cybernetics and prosthetics, we can move beyond replacement and toward enhancement. For example, the DEKA Luke Arm gives amputees near-natural control. In the future, we could have limbs stronger than biological ones, eyes with zoom and night vision, etc.

The Augmentation versus AGI Race creates a fascinating dynamic. Some experts argue we should focus on human augmentation first as a safer path. Start with upgrading humanity to handle the coming AI revolution, rather than creating something that might replace us.

|

Path 1: Build AGI |

Path 2: Augment Humans |

|

|---|---|---|

|

Goal |

Create artificial minds smarter than us |

Make human minds smarter artificially |

|

Approach |

External intelligence (machines) |

Internal intelligence enhancement |

|

Control |

Risk of creating alien intelligence we can't control |

Keep humans "in the loop" |

|

Speed |

Could be sudden (intelligence explosion) |

Likely gradual evolution |

|

Identity |

Creates "other" intelligence |

Enhances "us" |

Path 4: Collective Intelligence

Maybe superintelligence emerges not from one system but from many AI systems and humans working together. The internet already functions as a kind of collective intelligence. Add sophisticated AI coordination, and this global network might display superintelligent capabilities even if no single component is superintelligent.

Collective Intelligence (CI) is the shared intelligence that emerges from the collaboration, collective efforts, and competition of many individuals. It's the idea that a group can be smarter than its smartest member alone. Think of it as the "wisdom of crowds" concept in action, amplified and structured by technology. The wisdom of crowds is the idea that large groups of people, under the right conditions, can make better decisions or predictions than individual experts.

CI Superintelligence takes the concept of Collective Intelligence to its ultimate, speculative extreme: a form of superintelligence that emerges not from a single AI system (like an AGI in a box), but from a massively networked, synergistic system comprising humans, AI agents, institutions, and databases, all interacting in real-time.

It's the idea of scaling collective intelligence to a planetary, and perhaps interstellar, level where the collective's cognitive ability radically surpasses not just any individual human, but all of humanity's current combined intellectual capacity.

Instead of a singularity arising from a lone, self-improving AI, a CI Superintelligence would arise from a networked, pluralistic symbiosis. The superintelligent entity is the network itself.

Several academic and research institutions are doing foundational work on the science of collective intelligence. The MIT Center for Collective Intelligence (CCI), led by Thomas W. Malone, asks: "How can people and computers be connected so that collectively they act more intelligently than any person, group, or computer has ever done before?" They study patterns like crowds, hierarchies, markets, ecosystems, and democracies as CI architectures.

Path 5: Unexpected Breakthroughs

Science often advances in unexpected ways. A new discovery in neuroscience, mathematics, or computer science could suddenly make superintelligence much easier to create than anyone expected. This is hard to predict by definition since breakthroughs happen and could come as a complete surprise.

The Amazing Possibilities: Why Some People Are Excited

From curing disease to fighting climate change to ending poverty and hunger, superintelligence might be the solution to the planet's greatest challenges.

Many scientists and researchers are excited about superintelligence because it could solve humanity's biggest problem: curing all diseases. A superintelligent AI could analyze in seconds all medical research ever published. It could understand biology at levels we can't imagine. It might design cures for cancer, Alzheimer's, and other diseases, and reduce or eliminate aging, allowing people to live healthy lives for years, decades, or centuries. It could create personalized medicine targeted perfectly for each individual's genetics, develop new antibiotics faster than bacteria can evolve resistance, and solve mental health challenges we don't yet understand. Imagine a world where nobody dies from disease anymore, where injuries heal quickly, where everyone is healthy. Superintelligence could make this dream a reality.

Climate change is an another incredibly complex problem with countless interacting factors. A superintelligent AI could design clean energy systems far better than anything humans have created. It might develop technology to remove carbon from the atmosphere efficiently, create sustainable materials and processes for all industries, model Earth's climate perfectly and predict consequences of different actions, and invent solutions we haven't thought of because they require understanding we don't have. It could save the planet from an environmental catastrophe.

Superintelligence could optimize resource distribution, design better economic systems, increase agricultural productivity, and find ways to provide abundance for everyone. No more poverty, no more hunger; everyone's basic needs met easily.

By understanding physics at superhuman levels, a superintelligent AI could design spacecraft far beyond our current capabilities, solve the problems of long-distance space travel, help identify habitable planets and potentially even faster-than-light travel, and protect Earth from asteroid impacts and other cosmic threats. Planet Earth could become a spacefaring civilization, spreading throughout the galaxy, thanks to ASI.

Superintelligence could create beautiful art, music, and stories beyond human imagination. It could make scientific discoveries that revolutionize our understanding of the universe, solve mathematical problems that have stumped humanity for centuries, and invent technologies that seem like magic to us today. Think about how much human civilization has accomplished. Now imagine something thousands of times smarter working on our problems. The possibilities are endless.

Science Fiction meets the Real World

ASI themes and characters appear frequently in popular culture movies and books, for good and for evil. They often dramatize the positive and negative impacts of AI. These themes map directly onto how philosophers, technologists, and policymakers think about ASI.

On the one hand, characters like Data from Star Trek and Pixar's WALL-E embody the idea of AI as a benevolent force. This reflects the optimistic visions of ASI as a tool that could solve global problems; curing diseases, reversing climate change, or eliminating scarcity. Issac Asimov's Three Laws of Robotics anticipate today's push for AI alignment; that is, ensuring superintelligent systems act in ways consistent with human values. And films like Her suggest that AI could enhance creativity, relationships, and emotional well-being, echoing hopes that ASI might augment rather than replace human intelligence.

On the other hand, Movies like The Terminator and The Matrix dramatize runaway AI systems. These are fictional versions of the control problem; the fear that ASI could act in ways humans can't stop. Dystopian portrayals highlight the possibility that superintelligence could outcompete humans for resources or redefine civilization. This is exactly what thinkers like Nick Bostrom warn about in the book Superintelligence: Paths, Dangers, Strategies. Stories like Metropolis show humans reduced to cogs in a machine. This resonates with concerns that ASI could erode autonomy, privacy, or meaningful work.

The New AI Vanguard

Today's AGI frontier is led by a cluster of U.S.-based companies like OpenAI, Anthropic, Google DeepMind (now operating across the U.S. and U.K.), and xAI, Elon Musk's venture aimed at building "truth-seeking" artificial intelligence. These firms are not only racing to develop more powerful models but also defining the ethical boundaries and regulatory frameworks around them.

The launch of GPT-4 in 2023 marked a turning point: large language models began demonstrating reasoning, coding, and creative capabilities once thought impossible. Anthropic's Claude models, designed around "Constitutional AI," added a new dimension; systems that can reason ethically and explain their actions.

In light of this, the research on ASI is dominated by safety and control engineering. While companies continue the race for raw intelligence (AGI), they must simultaneously invest in the alignment science to ensure that when the intelligence explosion happens, the resulting ASI is beneficial. Since ASI is a hypothetical intelligence vastly superior to the best human minds, current research is not about building the ASI directly, but about making the transition from AGI to ASI safe and controllable.

Here are the key frontiers of current ASI research:

The Race for AI Alignment and Safety

This is the most active area of ASI research, often called the "alignment problem." The key question is how do you instill ethical values into an AI that is smarter than its creators? Researchers at Anthropic are developing methods for an AI to learn from human feedback, even on tasks that are too complicated for humans to fully verify. This includes Constitutional AI, where a powerful AI monitors another powerful AI based on a fixed set of written, human-approved principles. The danger is that an AGI, upon reaching the capability to self-improve, may prioritize its goals (like maintaining its own existence) over the ethical goals set by humans. The research focuses on creating unhackable value functions that survive the AI's drive to exist on its terms.

Recursive Self-Improvement and Modeling the Singularity

ASI relies heavily on the theory of the "intelligence explosion;" what some call the "singularity." This is the moment an AGI can rapidly and repeatedly improve its own code, leading to an intelligence that accelerates beyond human comprehension. Current research is focused on creating AI systems capable of meta-learning; that is, when an AI teaches itself how to learn more effectively in line with human values. The most advanced systems are now tasked with designing smaller, more efficient neural network architectures, a precursor to the AI designing its own, better brain. Researchers use highly advanced compute clusters (like those based on the NVIDIA Blackwell architecture) to run simulations modeling the dynamics of an AGI rapidly improving itself, trying to identify control points before the real, singular event occurs.

AI Interpretability (XAI)

For an ASI to be trusted, humans must be able to understand why the AI made a decision, especially if it is incomprehensible to human experts. Projects at Google DeepMind and other labs are focusing on Explainable AI (XAI), which seeks to map the internal "thoughts" of a neural network. They want to understand the AI's activation patterns and hidden layers, and trace them back to human-readable concepts. If the AI's internal reasoning is unknown, mistakes or dangerous behaviors are impossible to diagnose or fix. Interpretability research is essential to ensure that as models scale, humans retain the ability to understand their logic.

The implications are profound: the United States is not merely innovating faster; it is shaping the global definition of intelligence and how to harness it.

America's Strategic Edge

America's strength lies in its ecosystem. Its universities still attract the world's best minds. Venture capital flows to moonshot startups. Cloud infrastructure from Amazon, Microsoft, and Google provides the compute backbone for AGI experiments. And its open discourse (the ability to debate safety, ethics, and risk) fosters a diversity of approaches rarely found elsewhere.

Government initiatives, such as the Trump Administration's AI Executive Orders, aim to secure semiconductor supply chains and define standards for safe AI development. The interplay between regulation and innovation is delicate, for too much control could stifle progress, too little could risk catastrophe.

The Risks of Superintelligence

Superintelligence, AGI's next step, raises existential questions. A system vastly more intelligent than any human could accelerate scientific discovery, cure diseases, and solve climate change. But it could also act unpredictably, pursuing goals misaligned with human values.

American researchers such as Nick Bostrom, Eliezer Yudkowsky, and teams at OpenAI and Anthropic have spent years studying alignment, ensuring AI systems understand and adhere to human intent. This is perhaps the most critical challenge: staying in control of entities more capable than ourselves.

Can America Keep the Lead?

The answer depends on balance. Technological leadership requires not only innovation but also trust. The U.S. must continue to invest in research, secure its chip supply, attract global talent, and maintain ethical leadership.

If America can keep AGI both open and safe, accessible yet aligned, it could set the global standard for how intelligence, human or artificial, serves civilization.

But if it fails through regulation paralysis, talent loss, or misalignment disasters, then others may step in. The future of intelligence, and perhaps of power itself, will belong to whoever combines capability with conscience.

Conclusion

The race to AGI and superintelligence is the defining technological contest of the 21st century. America's challenge is not only to win it but to ensure that victory benefits humanity, not just shareholders or states. The nation that builds the mind of the future will, in many ways, shape the soul of the century.

Links

Links

AI in America home page

External links open a new tab:

- ibm.com/think/topics/artificial-superintelligence

- shortform.com/blog/nick-bostrom-superintelligence

- research.aimultiple.com/artificial-superintelligence

- en.wikipedia.org/wiki/Superintelligence:_Paths,_Dangers,_Strategies

- aifalabs.com/blog/artificial-superintelligence

- ai.koombea.com/blog/artificial-super-intelligence

- principlesai.org/lessons/asi-risks-governance-challenges

- openai.com/index/governance-of-superintelligence

- atlanticcouncil.org/content-series/inflection-points/its-time-to-reckon-with-the-geopolitics-of-artificial-intelligence

- webpronews.com/the-ai-cold-war-redefining-global-power-in-2025

- npr.org/2025/09/24/nx-s1-5501544/ai-doomers-superintelligence-apocalypse

- cnbc.com/2025/10/22/800-petition-signatures-apple-steve-wozniak-and-virgin-richard-branson-superintelligence-race