Elon Musk

Elon Musk

From Prophet to Antagonist

Elon Musk stands before an audience of engineers and students at the MIT Aeronautics and Astronautics symposium. It’s October 2014 and MIT is celebrating the department's centennial. Nine astronauts are in the audience. Musk has been invited to discuss SpaceX, electric vehicles, and the future of transportation. First, he touches on a different subject:

"I think we should be very careful about artificial intelligence. If I had to guess at what our biggest existential threat is, it's probably that... With artificial intelligence, we are summoning the demon."

Those listening in the room shift uncomfortably in their seats. This is MIT. They build AI here. Astronauts are here. Musk continues:

"I'm increasingly inclined to think that there should be some regulatory oversight, maybe at the national and international level, just to make sure that we don't do something very foolish."

He's the CEO of Tesla and SpaceX, a man building electric cars and rockets destined for Mars. But he's terrified of artificial intelligence. And he says it at MIT during their centennial celebration.

The tech world largely dismisses him. AI researchers roll their eyes. "He doesn't understand the field," they mutter. "He's fearmongering." But Musk isn't finished. He doubles down, tweets warnings, invests in AI safety research. He compares AI development to "summoning the demon" over and over again. It’s a contradiction. Even as he warns about AI, he's building AI. He cannot resist the revolutionary force he fears.

That tension in Elon Musk’s persona between safety advocate versus AI builder is one of the defining paradoxes of his role in the AI story. This is the pattern that will define Musk's relationship with AI: simultaneously terror and obsession, prophecy and participation, warnings and acceleration.

The Awakening and the Warning

(2012-2015)

The Awakening and the Warning

(2012-2015)

The Friendship

The story actually begins two years earlier in London when Musk befriends Demis Hassabis. Demis is the neuroscientist and AI researcher who founded DeepMind. Over dinner, Hassabis shows Musk what DeepMind is building—AI that learns to play games, that improves itself through reinforcement learning.

Musk is both fascinated and disturbed. The technology is more advanced than he realized. The trajectory is clear and most people aren't paying attention.

Musk wasn’t the only one watching. Two years after the meeting, Google acquires DeepMind for $500 million. Musk is reportedly upset that Google now controls cutting-edge AI research. Larry Page, Google's co-founder, is a friend, but Musk doesn't trust Google's commitment to AI safety. The acquisition crystallizes Musk's fear that AI development is accelerating, concentrated in a few companies, with insufficient attention to the existential risks.

The Public Campaign

Musk goes public with his concerns:

- August 2014: He tweets, "We need to be super careful with AI. Potentially more dangerous than nukes."

- October 2014: The MIT speech. "Summoning the demon."

- January 2015: He donates $10 million to the Future of Life Institute for AI safety research.

- June 2015: He warns that AI could be more dangerous than nuclear weapons and calls for proactive regulation.

The tech establishment pushes back. Andrew Ng (then at Baidu) said, "Worrying about AI safety is like worrying about overpopulation on Mars." Yann LeCun (Facebook) believes the fears are overblown and sensationalist. Many AI researchers contend that Musk doesn't understand the field. They feel current AI is narrow, not dangerous. They have little fear for the future.

It’s ironic that Musk is warning about AI being developed by engineers who feel he's overly concerned. They're building the thing he fears, and they are certain of its safety. Musk's position is they're brilliant at building AI, yet naive about its implications. Someone needs to ensure that when we build superintelligence, it's aligned with human values. His critics contend we're nowhere near superintelligence. These warnings are premature, distract from real issues, and might restrict beneficial AI research.

Who's right? A decade later, the debate remains unsettled. And perhaps it always will be.

The Dinner Table

In July 2015 Musk hosts a dinner at a private event in Napa Valley. Attendees include some of Silicon Valley's elite. The topic is what to do about AI safety.

Among those present are Sam Altman, then president of a company called Y Combinator. Google founder Larry Page is there too, and argues that AI should be open, free, beneficial to all. Musk argues that we need safeguards, controls, and alignment research.

The disagreement is fundamental. Page's argument, according to Musk, is AI should be developed openly and shared freely. Regulation would slow progress. We should trust that more intelligence yields better outcomes. AI has the potential to benefit humanity in so many ways.

Musk, on the other hand, argues that unconstrained AI development by profit-driven corporations could lead to catastrophe. We need a counterweight. We need an AI research organization committed to safety and broadly beneficial outcomes. From this disagreement, a decision emerges: If you can't trust others to develop AI safely, then go build it yourself.

OpenAI: The Idealistic

Beginning (2015-2018)

OpenAI: The Idealistic

Beginning (2015-2018)

The Founding

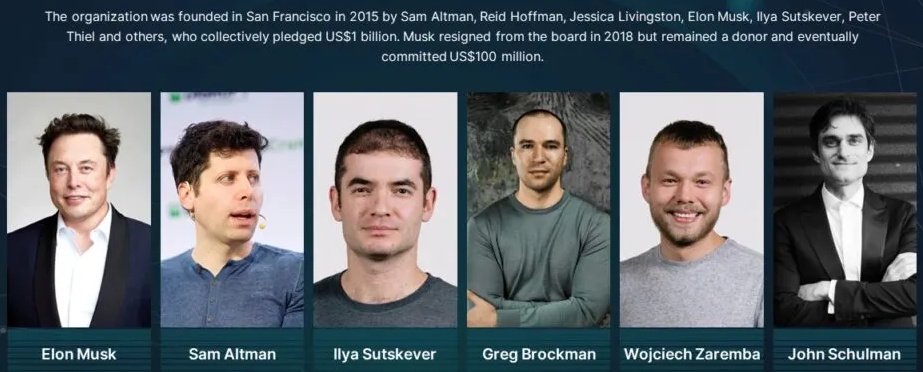

OpenAI is announced to the world on December 11, 2015. The founders are Elon Musk, Sam Altman, Ilya Sutskever, Greg Brockman, Wojciech Zaremba, John Schulman, and others.

It is organized as a non-profit research organization. There are no shareholders and there is no profit motive. The mission is to "Build safe AGI and ensure AGI's benefits are as widely and evenly distributed as possible."

Musk and other backers pledge $1 billion in funding, to be distributed over time.

Their philosophy is simply open-source research, to publish findings freely, and to collaborate with the world. This is the antidote to secretive, corporate AI labs.

From their founding statement, the manifesto is:

"Since our research is free from financial obligations, we can better focus on a positive human impact... We believe AI should be an extension of individual human wills and, in the spirit of liberty, as broadly and evenly distributed as is possible safely."

This is revolutionary idealism:

- Non-profit structure in a for-profit world

- Openness in an increasingly secretive field

- Safety-first in a race-to-market environment

- Democratic access in a technology that could concentrate power

Musk is a major funder, board member, and active participant. He recruits researchers, provides Tesla resources (GPUs for training), and engages deeply with the technical direction of the firm.

The Early Years: Research and Openness

For the first two years, OpenAI publishes research openly. The publications include reinforcement learning algorithms and generative models. An environment for training AI agents called the OpenAI Gym is created with a focus on AI safety and alignment.

True to their mission, papers are released publicly, code is open-sourced, and they collaborate with academia.

OpenAI's AI beats professional Dota 2 players in 1v1 matches in 2016. It's an impressive but narrow victory. The culture at OpenAI is idealistic, research-focused, and safety-conscious. The team genuinely believes they're building a better path forward for AI development.

At this point, Musk is actively involved with OpenAI though he is being pulled in other directions by his additional responsibilities. He's running Tesla (ramping up Model 3 production), SpaceX (developing Falcon Heavy), and the Boring Company, as well as trying to guide OpenAI's direction.

The Growing Tension

Building cutting-edge AI requires enormous computational resources. Training costs are exploding:

- 2015: Modest GPU clusters, thousands of dollars per training run

- 2017: Millions of dollars per frontier model

- Projections: Billions will be needed

OpenAI's $1 billion pledge isn't deployed all at once—it's spread out over time. Meanwhile, DeepMind is proceding, backed by the enormous resources of Google. Other well-funded corporate labs are spending aggressively, too.

Top AI researchers can command salaries of $500,000. Non-profits struggle to compete with tech giants that can offer millions in compensation.

How can a non-profit, no matter how well-funded, compete with Google, Microsoft, Facebook, and Amazon?

The Divergence

In February 2018 Musk leaves OpenAI's board to avoid conflicts of interest with Tesla's AI development for autonomous driving. Reported tensions include:

- Control: Musk wanted more direct control over OpenAI's direction. Other board members resisted.

- Speed: Musk thought OpenAI was moving too slowly, falling behind DeepMind.

- Strategy: Disagreement about whether to remain fully open or become more protective of research.

Musk later says he proposed that Tesla acquire OpenAI to provide the resources needed to compete. The proposal was rejected. He was frustrated by what he saw as lack of progress. Musk leaves the board but reportedly remains a donor.

This moment is the fracture between Musk and OpenAI. Musk exits the organization he co-founded, with a mission he championed, because he cannot align it with his vision. The revolutionary project begins its transformation, and Musk begins his journey from insider to critic and eventually to competitor.

OpenAI's Metamorphosis

(2019-2022)

OpenAI's Metamorphosis

(2019-2022)

The Capitalist Turn

In March 2019 OpenAI makes a dramatic change by creating "OpenAI LP," a capped-profit company.

The company is still governed by a non-profit board, but it can raise capital and compensate employees competitively with profits capped at 100x investment. At best, OpenAI is now quasi-non-profit.

Building AGI requires billions. A truly non-profit structure makes raising that capital impossible. The hybrid model preserves adherence to the mission while allowing the company access to necessary resources. In reality, OpenAI is becoming a conventional tech company with an unusual governance.

Musk is critical of the new direction. This isn't what he signed up for. The mission is being compromised.

The Microsoft Partnership

In July 2019 Microsoft invests $1 billion in OpenAI.

The terms of the agreement allow OpenAI to use Microsoft Azure for computing. Microsoft gets exclusive license to OpenAI's technology.

Four years later, Microsoft invests $10 billion more, bringing their total investment to $13 billion.

OpenAI, founded as an open alternative to corporate AI labs, becomes deeply involved with one of the world's largest corporations hosting one of the industry’s largest R&D labs.

Musk co-founded OpenAI in part to counterbalance Google's AI power. Now OpenAI is Microsoft's weapon against Google.

GPT-2 and the Openness Paradox

OpenAI announces GPT-2 in February 2019. It is a language model that can generate surprisingly coherent text.

They decide not to release the full model immediately because it's "too dangerous" and could be used for evil purposes like misinformation and spam. The decision is confusing and evokes criticism. OpenAI's name means open AI, but they're withholding research. OpenAI feels the disclosure is responsible. As AI becomes more powerful, blind openness could be dangerous.

The deeper truth is the decision to restrict GPT-2 marks the death of the founding vision. OpenAI is becoming a normal AI lab. They are being protective of research and are worried about competitive advantage. The feel they must balance safety claims with business interests.

Musk's feels OpenAI has abandoned the mission. In effect, they became what they were created to prevent.

GPT-3 and the Product Turn

In June 2020, GPT-3 is announced, a model with 175 billion parameters. It is shockingly capable.

In this release, there is limited API access. OpenAI is now a product company. The research is proprietary and access is monetized. Three months later, OpenAI licenses GPT-3 exclusively to Microsoft. The transformation is complete:

- Founded as non-profit → Now capped-profit

- Committed to openness → Now mostly closed

- Focused on safety research → Now shipping commercial products

- Independent → Now Microsoft-partnered

Elon Musk becomes even more critical. He says it is a betrayal of the founding principles. OpenAI is "OpenAI in name only."

Musk's xAI Counter-Revolution

(2023)

Musk's xAI Counter-Revolution

(2023)

The Frustration Builds

On November 30, 2022, ChatGPT launches. In two short months, it reaches 100 million users making it the fastest-growing consumer application in history. In March 2023 GPT-4 launches, an even more capable product. Microsoft integrates it into Bing, Office, and their entire product line.

Musk has been observing as OpenAI dominates AI headlines. He is simultaneously vindicated and angry. He feels vindicated because AI is advancing rapidly, just as he had warned. It's becoming powerful and potentially dangerous. He is angry since the organization he co-founded to ensure safe AI development has become a closed, profit-driven entity aligned with Microsoft. And they're winning.

The Public Break

In February 2023, Musk issues some scathing criticism.

"OpenAI was created as an open source (which is why I named it 'Open' AI), a non-profit company to serve as a counterweight to Google, but now it has become a closed source, maximum-profit company effectively controlled by Microsoft. Not what I intended at all."

Citing risks, in April 2023 Musk signs an open letter calling for a six-month pause on training AI systems more powerful than GPT-4.

The letter is controversial. Critics argue it is competitive maneuvering. They feel Musk just wants time to catch up. The defenders argue that concerns about AI risk are legitimate, regardless of Musk's motives.

Musk's warnings about AI become entangled with his competitive and political positions. Legitimate concerns become suspect because of the messenger, not the message.

The Birth of xAI

Behind the scenes Musk has been busy with his own product, and releases xAI on July 12, 2023.

The stated mission is: "The goal of xAI is to understand the true nature of the universe." It is grandiose and vague, very Musk-like.

The xAI team is comprised of:

- Igor Babuschkin (ex-DeepMind)

- Tony Wu (ex-Google)

- Christian Szegedy (ex-Google)

- Greg Yang (ex-Microsoft)

- And others, mostly from OpenAI, DeepMind, Google

Their strategy is to build a competitor to OpenAI’s ChatGPT, but they aim to do it the "right" way. This means more truth-seeking with less "woke" bias, more transparency regarding capabilities and limitations, and more focused on understanding, not just commercial deployment.

Musk has tremendous resources including money, GPUs (reportedly 10,000+ NVIDIA H100s), Twitter/X integration for data, and recruiting power.

It won’t be easy since OpenAI has a multi-year head start, $13 billion from Microsoft, and deployed products already with millions of users.

Grok: The Rebellious AI

xAI releases Grok in November 2023, integrated into X (formerly Twitter) for Premium+ subscribers.

Grok is positioned as a "rebellious" AI with a sense of humor. It is willing to answer "spicy questions" other AI refuses. It has real-time access to X/Twitter data, a competitive advantage. And Grok is less politically correct than OpenAI.

Grok is based on Grok-1, a large language model competitive with but not clearly superior to GPT-3.5 or Claude 2. The marketing approach is to emphasize personality over capability. Grok will tell you things ChatGPT won't. It's edgier, more fun, and less corporate.

Grok is decent but it is evolutionary, not revolutionary. It's a competent entry in what has become an increasingly crowded market.

Musk is attempting to position AI alignment differently. OpenAI believes AI should be helpful, harmless, honest, avoid bias, and refuse harmful requests. Musk feels AI should pursue truth, even when uncomfortable.

Grok-2 and the Acceleration

Grok-2 is released in August 2024. A substantially improved version, it is competitive with GPT-4 and Claude 3.

A notable feature is Grok-2 can generate images with minimal content restrictions, leading to viral AI-generated images of politicians, celebrities, copyrighted characters in compromising or absurd situations. While OpenAI and Anthropic restrict image generation to avoid misinformation, copyright violation, and harmful content, Grok is much more permissive.

Musk's position is that people should have freedom. Other AIs are too restricted, too censorious. Some feel this approach is irresponsible since it enables misinformation, harassment, and copyright violations. The deeper question is what restrictions on AI capabilities are necessary versus those that are paternalistic? Who decides?

Musk is clearly positioning xAI as the anti-establishment AI company.

The Computational Arms Race

In 2024 Musk announces "Colossus." Colossus is a supercluster of 100,000 NVIDIA H100 GPUs for training Grok-3. This is one of the world's largest AI training clusters. Built in a few short months, it is powered by Musk's resources and his relationships with NVIDIA.

The goal is to train models competitive with or superior to GPT-4, Claude 3.5, and whatever comes next. Whoever has the best models controls the AI future, or at least a large part of it.

The revolutionary phase of "small teams with good ideas" is over. This is industrial-scale AI development. Now, only the wealthiest companies and individuals can compete.

The Ideological Revolution

The Ideological Revolution

The Woke AI Critique

Musk frames his AI development in explicitly ideological terms. OpenAI, Google, and other mainstream AI labs are building "woke" AI that refuses to engage with certain topics, presents left-leaning perspectives, and enforces political correctness.

The examples include:

- ChatGPT refusing to write poems praising Trump while writing ones praising Biden

- Image generators refusing to depict certain historical or political scenarios

- AI avoiding topics around gender, race, or immigration except from progressive perspectives

Some argue these aren't political biases. They are safety measures to avoid generating hate speech, creating misinformation, producing harmful stereotypes, and violating laws or ethical norms.

In fact, AI systems do embed values in their training and restrictions. These values tend to reflect the progressive culture of Silicon Valley. Restricted AI can produce genuinely harmful content, though defining what is "harmful" involves making value judgments.

Musk's vows to redefine AI safety from "preventing harm" to "maximizing truth-seeking," even when the truth is uncomfortable or potentially offensive.

Truth-Seeking vs. Safety

This creates competing visions of revolutionary AI. The OpenAI/Anthropic vision asserts that AI should be aligned with human values. It should refuse harmful requests. It should present balanced, accurate information. Safety and helpfulness are paramount .

The Musk/xAI vision hold that AI should pursue truth, even when uncomfortable. Within legal bounds, it should have minimal restrictions. "Alignment" shouldn't mean "alignment with progressive values." Freedom and truth-seeking are paramount.

In edge cases, these views are incompatible. An AI that always pursues truth without restriction may say things that cause harm. An AI restricted from causing harm may sometimes avoid uncomfortable truths.

The key questions are who gets to define the values AI embodies? The lab that builds it? The government? The users? The market?

This is not a technical question. It's political, ethical, and contentious.

The Open Source Counter-Move

In March 2024 xAI releases Grok-1 as open source. It has 314 billion parameters and is fully downloadable.

Musk is reclaiming the "open" mantle that OpenAI abandoned. Open-sourcing a model after you've already trained its successor isn't quite the revolutionary openness of the original OpenAI vision, but it's more open than most competitors. It position xAI as the transparent alternative to secretive corporate labs.

xAI is still a for-profit company. Grok-1 is open, but Grok-2 isn't. Future models likely won't be either. This is marketing more than philosophy. In a field where OpenAI went from "open by default" to "closed by default," even selective openness is notable.

The Broader Revolution

The Broader Revolution

Tesla and AI

While fighting the AI chatbot wars, Musk is simultaneously revolutionizing autonomous driving, developing humanoid robots, and creating brain-computer interfaces.

Tesla's Full Self-Driving (FSD):

- Vision-only approach (no LiDAR, against industry consensus)

- Neural networks trained on billions of miles of driving data from the Tesla fleet

- Over-the-air updates continuously improves capability

FSD is impressive yet imperfect. Musk has repeatedly overpromised timelines by claiming "full autonomy next year" for years. The progress, however, is real. Tesla has more real-world driving data than anyone else. They're training AI on actual human driving at an unprecedented scale. xAI and Tesla are reportedly sharing technology. AI models developed for language might inform vision systems for driving, and vice versa.

Optimus: The Humanoid Robot

Tesla develops Optimus, a humanoid robot, starting in 2021. Musk's vision is to have general-purpose robots performing human labor including manufacturing, household tasks, and child and elderly care. As he puts it, “who wouldn’t want a robot buddy.”

AI is a component because robots need vision, planning, and manipulation. The same neural network approaches powering FSD could also power robotics.

Musk predicts millions of Optimus robots will be available by the 2030s, fundamentally transforming labor and the economy. Musk's timelines are notoriously optimistic. To meet the objectives, Tesla is investing heavily in robot development.

Humanoid robots, powered by AI, could exceed human economic value within decades. This would be the most profound revolution since industrialization.

Neuralink: The Direct Connection

Meanwhile, Musk's Neuralink is developing brain-computer interfaces. The goal is to obtain a direct neural connection between human brains and computers.

In the near-term Neuralink can help paralyzed patients control devices and restore sight and hearing. The long-term goal is human-AI symbiosis. If AI becomes superintelligent, humans need to "merge" with it to remain relevant.

The first human receives a Neuralink implant in January 2024.

Neuralink is Musk's answer to the AI control problem; not controlling AI externally, but integrating humans and AI so closely they're one system.

If successful, Neuralink changes what being a "human" means. We become cyborgs, augmented by AI, dissolving the boundary between human and machine intelligence.

The Integrated Vision

Musk sees these ventures connected as components of a future:

- xAI: Advanced language models and reasoning systems

- Tesla: AI for vision, robotics, autonomous systems

- Neuralink: Brain-computer interfaces

- X/Twitter: Data source and distribution platform

Together, they could form an AI ecosystem under Musk's control with models trained on X's data and deployed in Tesla's robots and cars. Eventually, they can interface directly with human brains via Neuralink.

If it works, this is vertical integration on a species-transformational scale.

These remain somewhat separate businesses with shared technology and vision, some succeeding more than others. The ambition is revolutionary in scope.

The Revolutionary

Contradictions

The Revolutionary

Contradictions

Contradiction 1: Warning vs. Building

Musk's pattern:

- 2014-2015: AI is existentially dangerous, we must be careful

- 2015-2018: Co-founds OpenAI to ensure safe AI development

- 2023-2024: Builds xAI, racing to create powerful AI

A key question is if AI is truly existentially dangerous, why build it at all?

Musk's logic is:

- AI will be built regardless—someone will do it

- Better that it's built by someone who understands the risks

- Safety-conscious development is better than reckless development

- You can't influence AI from the outside; you must build it yourself

Critics respond that Musk wants to build AI for competitive/ego/commercial reasons and he dresses it up as safety-motivated. Musk genuinely worries about AI risk AND can't resist building transformative technology AND wants to win the AI race.

Contradiction 2: Open vs. Closed

Musk's evolution:

- 2015: Co-founds "OpenAI" committed to openness

- 2018: Leaves as OpenAI becomes less open

- 2023: Criticizes OpenAI for being closed, betraying its mission

- 2024: Runs xAI, which is mostly closed (with selective open releases)

If openness is so important, why isn't xAI fully open? The answer lies in the reality of competition. You can't compete with closed labs while being fully open, for they'll take your research and outpace you.

The original OpenAI vision was probably impossible. Pure openness in a competitive field doesn't survive contact with reality. Musk discovered this at OpenAI. Now he's living with the contradiction at xAI.

Contradiction 3: Safety vs. Freedom

The tension in Musk's thinking:

- As safety advocate: AI is dangerous, needs oversight, could destroy humanity

- As freedom advocate: AI shouldn't be restricted by "woke" values, should pursue truth freely

But how can AI be both carefully controlled (for safety) and minimally restricted (for freedom)?

Musk's answer:

- Safety = preventing loss of human control, ensuring alignment with human values broadly

- Freedom = not restricting AI based on narrow political values

This distinction rests on a slippery slope. Political values shape what counts as "safe." The line between necessary safety measures and political bias is contested.

The claim is you can have AI that's safe from existential risk without restricting its ability to engage with controversial topics. However, safety often requires restrictions that some will view as political bias.

Contradiction 4: Elite Control vs. Democratization

The paradox:

- Musk criticizes OpenAI for becoming corporate-controlled

- He then builds xAI, which is controlled by... Elon Musk, one person

- He warns about AI concentrating power

- He concentrates AI capabilities in his companies

The question is how has Musk-controlled AI better than Microsoft-OpenAI or Google-DeepMind. Musk trusts himself more than corporations. He believes his values and judgment are better aligned with humanity's interests. This is the classic revolutionary trap. Overthrowing one power structure only to create another one, with yourself at the center.

At least Musk is more transparent about values and goals than faceless corporations. Some contend that he's transparent about some things, opaque about others, and changes his mind frequently.

The Revolutionary Significance

The Revolutionary Significance

AI as Battleground

Musk successfully and famously reframed AI development from a purely technical/safety issue to a cultural/political struggle.

- Before: AI safety = preventing accidents, misalignment, unintended consequences

- After: AI safety = also preventing political bias and censorship

This point of view complicates AI governance. Now every safety decision is scrutinized for political motivation. Trust in AI labs decreases and consensus becomes harder.

Revolutionary?: Yes, it changes the discourse.

Demonstrating Competitive AI Development is Possible

xAI went from founding to competitive model (Grok-2) in about 18 months. It shows that with enough capital, talent, and compute, an enterprise can catch up to the leaders relatively quickly.

It implies that AI won't be monopolized by a small number of companies. More players can enter the industry though competition will remain fierce. The venture requires billions in capital to accomplish. Only the wealthiest can play. It's "competitive" only among the wealthy.

Revolutionary?: It proves AI development can be fast paced, which is both exciting (innovation) and concerning (safety).

AI-First Company Integration

The model is to use AI across the entire company portfolio: X for data, Tesla for robotics and computer vision, Neuralink for brain-interface, and xAI for models.

The vision is a vertical integration of AI capabilities across domains. If successful, it creates a massive competitive moat. Tesla's driving data trains better vision models. X's social data trains better language models. Neuralink enables new interfaces.

Revolutionary?: The integration strategy is novel and could be extremely powerful if properly executed. Add in SpaceX and no one has an across the board, AI-first company integration as Musk.

The Billionaire Driven AI Race

Musk is not the only billionaire in the race. He’s joined by Mark Zuckerberg (Meta/Llama), Jeff Bezos (Amazon Anthropic investment), and others. They are in it to win it.

AI development is increasingly driven by tech billionaire vision and capital, not governments or the research community. AI has come out of the lab in a big way.

The implications are faster development (billionaires can move quickly), less democratic accountability (they answer to no one), a value alignment with billionaire preferences, and the concentration of power in the hands of a few.

Revolutionary?: This is a new mode of technological development. It’s wealth-driven rather than state or market-driven.

The Existential Risk Narrative

More than anyone, Elon Musk made "AI existential risk" mainstream with his outspoken comments.

Before Musk, existential risk was discussed in niche, mostly academic communities. Since Musk existential risk is debated in Congress, in the UN, and in the media worldwide. Major labs have safety teams while governments debate and adopt regulations, like the AI Act in Europe.

Did Musk's warnings help or hurt? It helped by raising awareness, funding safety research, and pressuring labs to take AI risks seriously. It may have hurt because it associated AI safety with Musk's persona, made it politically divisive, and potentially slowed beneficial AI development.

Revolutionary?: Undeniably yes. The discourse shifted because of his warnings.

The Speed-vs-Safety Dilemma Embodied

Musk simultaneously warns that AI could destroy humanity yet races to build more powerful AI as fast as possible. The race embodies the core dilemma:

- Slowing down might let less safety-conscious actors win

- Speeding up might create the risks you fear

- There's no obvious safe path

Revolutionary insight: Perhaps there is no "safe" way to develop superintelligence. Maybe we're in a race where slowing down loses and speeding up risks everything. This is the revolutionary—and somewhat terrifying—predicament that Musk has helped articulate.

Epilogue

Epilogue

Today, Musk's AI empire continues to expand. xAI raises billions of dollars. The latest Grok is in training. Tesla's robots improve. Neuralink implants more patients. SpaceX fires more rockets.

Now thoroughly commercialized, OpenAI is integrated throughout Microsoft's empire. Google's Gemini increasingly competes in AI. Anthropic's Claude expands and grows. Meta's Llama models spread as open source.

The AI revolution Musk warned about is happening, and he has become one of its primary drivers as well as an evangelist and an early pioneer.

The assessment:

- As Prophet: Musk was early and loud about AI's importance and risks. Many dismissed him. He was largely right about the trajectory.

- As Revolutionary: His companies are genuinely pushing boundaries with xAI in language models, Tesla in physical AI, Neuralink in human brain interface, etc.

- As Businessman: Whatever else xAI is, it's also a competitive play. Musk was left out of the OpenAI success story. Now, he's building his own...and succeeding.

- As Ideologue: The "truth-seeking" vs "woke AI" framing is as much about politics as AI safety. He has effectively called attention to progressive policies infecting AI.

Despite his warnings, Musk sees AI as an essential tool for advancing his companies’ missions in transportation, robotics, and space exploration.

Musk's AI journey mirrors his other ventures. The grand vision, technical achievement, self-promotion, controversial methods, mixing of motivations, cult of personality, and genuine impact mixed with hype. His role highlights the cultural tension in AI itself: fear of risk versus the drive for innovation.

The Revolutionary Core

Musk transformed AI from a technical specialty to a civilizational concern. He made it a topic of dinner-table conversation, brought in regulatory attention, and highlighted existential contemplation.

He demonstrated that AI development could be competitive, that the leaders weren't unassailable, that speed was possible.

He forced the values debate: whose values should AI embody? This question was always there, but Musk made it explicit and urgent.

He embodied the contradictions inherent in AI development: necessary yet dangerous, promising and threatening, freedom versus control.

The Unresolved Question

Is Musk helping build beneficial AI or racing toward a catastrophe? Is he a safety advocate or a reckless accelerator? Is xAI a valuable counterweight or a dangerous competitor?

The likely answer is probably all of these. He's too complex, the situation too multifaceted, and the future too uncertain for simple judgments.

"To anyone I've offended, I just want to say: I reinvented electric cars and I'm sending people to Mars on a rocket ship. Did you think I was also going to be a chill, normal dude?"

What is clear is the revolution is happening, and Musk is shaping it. The prophet who warned about the demon is now summoning his own.

The answer lies ahead, in a future where machines think, robots walk, and—if Neuralink succeeds—the boundary between human and artificial intelligence dissolves entirely. Musk will be there, dressed in a black t-shirt with designer jeans and leather shoes, tweeting his vision, building his empire, and warning of dangers ahead while racing toward them.

Is it revolutionary? Undoubtedly. Is it safe? That's the unanswered question. And the answer is still being computed.

Links

Links

AI Revolutions home page.

AI Stories, some fashioned by Grok.

Self-driving cars page.

Optimus robot page.

External links open in a new tab:

- en.wikipedia.org/wiki/Elon_Musk

- en.wikipedia.org/wiki/Colossus_(supercomputer)

- Elon-Musk-SpaceX-Fantastic-Future biography