AI Hallucinations

AI Hallucinations

Cases when AI models generate outputs that are false, misleading, or nonsensical while presenting them as if they are factual

This phenomenon can occur in various AI applications, including chatbots and image recognition systems. AI hallucinations highlight challenges in deploying AI systems that generate human-like text or interpret visual data. Understanding their causes and implementing strategies to mitigate their impact is crucial for developing reliable AI applications. As AI technology evolves, addressing these issues will be key to enhancing user trust and ensuring accurate results.

What Are AI Hallucinations

What Are AI Hallucinations

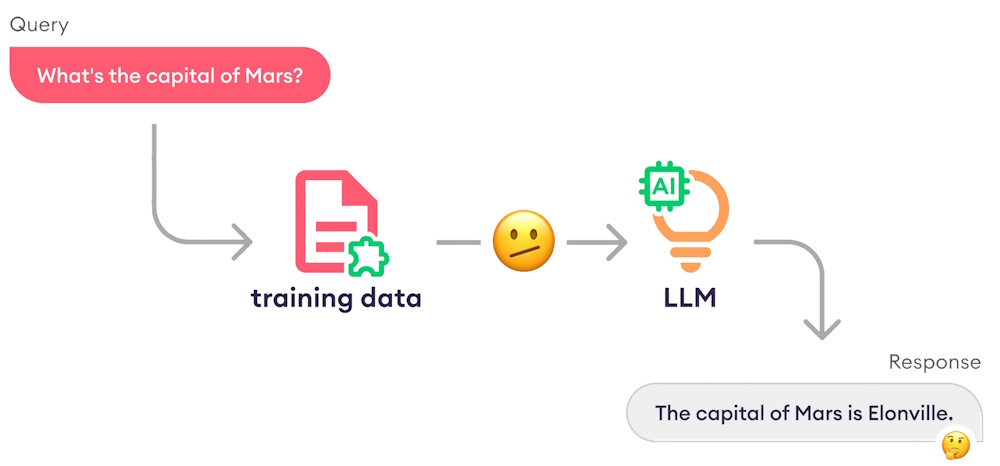

Hallucinations occur when an AI system, especially a large language model or generative AI, produces information that is false, misleading, or entirely fabricated, even though it sounds confident and authoritative. They are moments when an AI perceives patterns or objects that are nonexistent, leading to outputs that are inaccurate or nonsensical. In other words, the AI generates a response that does not come from real data, real facts, or any verifiable pattern.

Hallucinations, then, are AI-generated statements that contain false or misleading information presented as fact. Hallucinations happen because AI models don't know facts. Instead, AI models predict the next most likely word or pattern based on training data. When the model lacks information, misinterprets the prompt, or encounters gaps in its training, it may fill in the blanks with invented details. AI can make things up - confidently and convincingly - when it generates information not supported by any data. These errors can appear polished and logical, which is why they're sometimes hard for users to detect.

AI hallucinations can be harmless in creative tasks, but they become risky in areas like research, law, medicine, or education, where accuracy is essential. Hallucinations can occur across many AI systems (chatbots, image recognition tools, and generative models) and understanding them is crucial for identifying risks and preventing misinformation. Because hallucinations sound authoritative, they can mislead users who assume the AI is always correct. Sometimes they are so absurd, they're funny.

Developers and researchers use several strategies to reduce hallucinations: grounding models in verified data, adding retrieval systems that check facts, improving training methods, and designing prompts that reduce ambiguity. But no AI system is completely free of hallucinations yet. The best defense is a combination of improved model design and informed users who verify important information.

Causes

- Training Data Issues: Hallucinations often stem from biases or inaccuracies in the training data. If the model is trained on flawed or unrepresentative data, it may learn incorrect patterns.

- Model Complexity: High complexity in models can lead to overfitting, where the model learns noise in the training data instead of the underlying patterns.

- Input Context: Ambiguous or contradictory prompts from users can also contribute to hallucinations, as the model may struggle to generate coherent responses.

Types of Hallucinations

- Factual Inaccuracies: The model presents incorrect information as if it were true (e.g., naming a city incorrectly).

- Contradictions: The output may contradict itself or previous statements made by the model.

- Irrelevant Responses: Sometimes, the model generates responses that are unrelated to the prompt. Examples: A chatbot might claim that a historical event occurred in a year that is factually incorrect or an AI might generate a recipe but include unrelated weather information, making it nonsensical.

Consequences

AI hallucinations can undermine user trust and lead to poor decision-making, especially in critical fields like healthcare or finance where accurate information is vital. They can also contribute to the spread of misinformation if not properly managed.

Mitigation Strategies

To reduce hallucinations, users can provide clear and specific prompts, use examples to guide the model, and tune parameters that control output randomness. Continuous improvement of training datasets and algorithms is essential for minimizing these occurrences.

Real-World Examples of AI Hallucinations

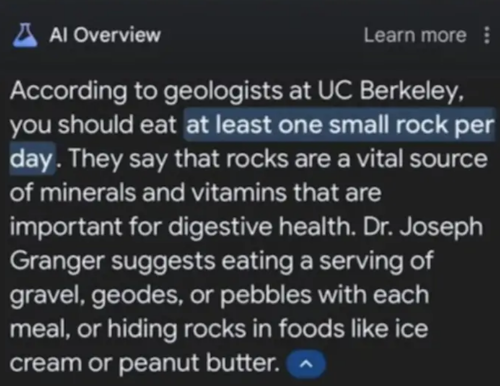

🍕 Put Glue on Pizza and Eat Rocks

One of the most famous AI hallucinations. Early Google AI Overviews recommended adding glue to pizza as a cooking tip, an example of a dangerous hallucination caused by misinterpreting user intent. On another post, AI Overviews suggested scientists eat rocks. See our AI Humor page.

🏛️ Fabricated Legal Citations

Some chatbots have generated nonexistent court cases or legal precedents when asked for legal references. Hallucinations have included invented legal citations that look authentic but do not exist in any database. Celebrated cases have involved Walmart, Avianca Airlines, and Mike Lindell, the MyPillow guy.

💼 Fake Government Contract Details

A case where an AI system produced fabricated information in a government contract report by inserting details that were never present in the source documents. A report provided by Deloitte to the Australian government was found to contain multiple fabricated citations and phantom footnotes. As a result, Deloitte had to refund part of a ~$300,000 government contract.

📉 Wrong Answer That Tanked a Company's Stock

A chatbot once gave an incorrect answer about a company's financials, which led to a drop in the company's share price after the misinformation spread.

🧮 Struggling With Basic Math

Some models confidently output incorrect arithmetic or algebra, even when the problem is simple.

🎧 Transcription Tool Invents Words

A transcription AI added sentences and phrases that were never spoken, creating false content in the transcript. OpenAI's Whisper speech-to-text model, which is adopted in many hospitals, was found to hallucinate on many occasions. An AP investigation revealed that Whisper invents false content in transcriptions, inserting fabricated words or entire phrases not present in the audio. The errors included attributing race, violent rhetoric, and nonexistent medical treatments.

🛎️ Customer Support Bot Makes Up Company Policy

A support chatbot confidently cited a policy that didn't exist, misleading customers and creating brand risk.

📚 Bogus Summer Reading List

A model generated a list of books that didn't exist, complete with fake authors and summaries.

🏥 Incorrect Medical Guidance

Hallucinations have produced wrong medical advice, which can be harmful when users assume accuracy.

💬 Fabricated Customer Support Responses

Chatbots have given confident but false answers to customer questions, sometimes inventing product features or troubleshooting steps.

📊 Business Losses From AI Errors

Hallucinations have caused significant financial losses when companies relied on incorrect AI-generated information.

⚖️ High Hallucination Rates in Some Models

Cottrill Research cites testing where certain models hallucinated up to 50% of the time, showing how widespread the issue can be.

Links

Links

AI Humor page.

AI Stories page.

External links open in a new tab:

- en.wikipedia.org/wiki/Hallucination_(artificial_intelligence)

- ibm.com/topics/ai-hallucinations

- techtarget.com/whatis/definition/AI-hallucination

- builtin.com/artificial-intelligence/ai-hallucination

- miquido.com/ai-glossary/ai-hallucinations/

- infobip.com/glossary/ai-hallucinations

- kindo.ai/blog/45-ai-terms-phrases-and-acronyms-to-know

- mitsloanedtech.mit.edu/ai/basics/addressing-ai-hallucinations-and-bias/

- evidentlyai.com/blog/ai-hallucinations-examples

- techtimes.com/articles/313610/20251225/why-chatbots-resort-ai-hallucinations-here-are-5-real-life-examples