NVIDIA DGX‑1

NVIDIA DGX‑1

First AI Supercomputer

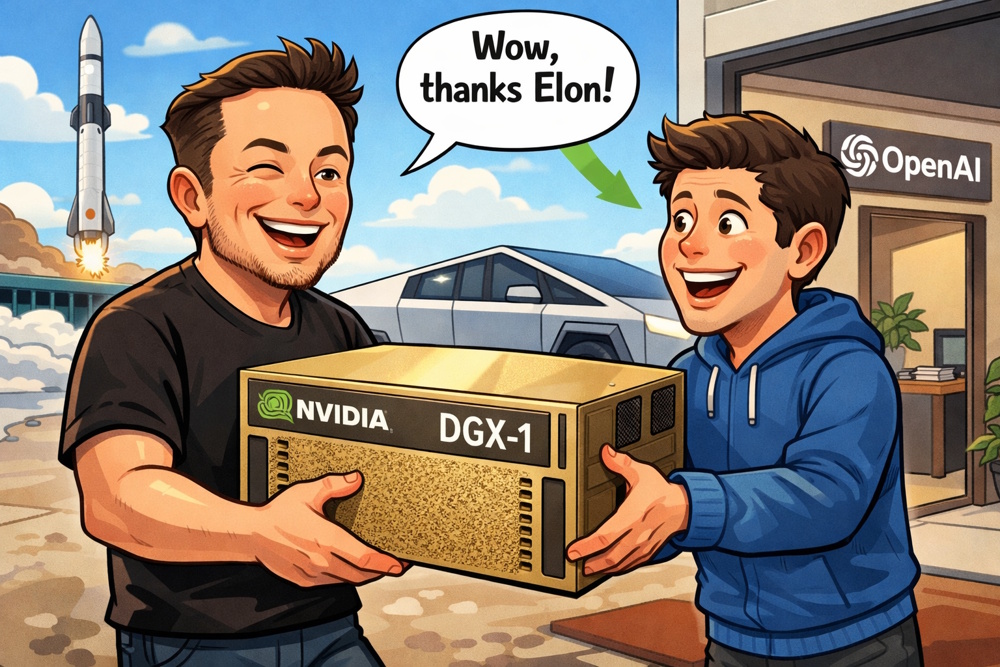

The NVIDIA DGX‑1 is NVIDIA's first turnkey AI supercomputer, released in 2016 and built specifically for deep‑learning workloads. The DGX‑1 is a rackmount supercomputer designed to give researchers a fully integrated hardware/software stack for neural‑network training. It became famous because Jensen Huang personally delivered one to OpenAI, then led by Elon Musk, helping launch the modern AI boom.

Jensen Huang donated the world's first AI‑optimized supercomputer to OpenAI in August 2016, when Elon Musk was in charge. As Jensen explained, "I delivered to [Musk] the first AI supercomputer the world ever made... I boxed one up, drove it up to San Francisco, and delivered it to Elon in 2016." Elon then handed it off to Sam Altman of OpenAI.

Before the DGX-1, AI researchers had to build their own multi-GPU servers, which was a nightmare of cabling and software tuning. The DGX-1 was a turnkey "plug-and-play" deep learning machine with pre-integrated hardware and optimized NVIDIA software stack (CUDA, cuDNN, DIGITS, NGC containers). It cut setup time from months to hours, and helped launch OpenAI's early GPT models.

The story behind Elon Musk, Jensen Huang, and Sam Altman regarding the NVIDIA DGX-1 is a classic Silicon Valley origin tale of early AI hardware that became legendary. Without the DGX-1, OpenAI's timeline would have been slower. Who knows when ChatGPT would have emerged? It's not an exaggeration to say: No DGX-1 means slower transformer research, slower GPT timeline, and a slower emergence of ChatGPT. The DGX-1 didn't create GPT by itself, but it sure accelerated the process.

Huang's personal deliverery of the DGX-1 underscored NVIDIA's belief in OpenAI's mission. It blossomed into a strategic partnership that would grow over the decade. That early bet eventually expanded into the $100 billion NVIDIA-OpenAI partnership announced in 2025.

Huang signed the first NVIDIA DGX-1: "To Elon and the OpenAI Team! To the future of computing and humanity. I present to you the world's first DGX-1!" That machine is destined for the Computer History Museum, if only Elon (or Sam) lets it go.

Software

Software

NVIDIA's Core AI software stack - then and now - consists of four key components that form the backbone of NVIDIA's AI ecosystem, from low-level GPU programming to full deep-learning workflows. Early versions of the software with a tuned Linux host OS and NVIDIA drivers were pre-installed on the NVIDIA DGX-1.

CUDA is a parallel-computing platform and programming model that lets developers run code directly on NVIDIA GPUs. CUDA is the foundation of nearly all GPU-accelerated AI and HPC work. It provides GPU kernels, memory management, compiler toolchain, and runtime libraries. As a platform, CUDA dramatically speeds up computing applications by harnessing the power of GPUs. It includes the CUDA Toolkit with libraries, compiler, and runtime support. The CUDA Deep Learning container integrates GPU-accelerated libraries like cuDNN, cuTensor, NCCu, and the CUDA Toolkit.

cuDNN is a GPU-accelerated library of primitives for deep neural networks. cuDNN provides highly optimized implementations of convolutions, RNNs, normalization, and tensor operations. It is one of the key reasons deep learning exploded on NVIDIA hardware, for frameworks like TensorFlow and PyTorch rely on cuDNN under the hood.

The Deep Learning GPU Training System (DIGITS) is a graphical web interface for training deep-learning models. DIGITS was NVIDIA's early attempt to democratize deep learning by letting users train models without writing code. It wraps frameworks like TensorFlow and NVCaffe. DIGITS capabilities include image classification, object detection, segmentation, dataset management, and visualization of training metrics. DIGITS is a wrapper for TensorFlow which provides a graphical web interface and is used to rapidly train highly accurate deep neural networks. DIGITS can also run inside NGC containers, requiring GPU-enabled Docker environments.

Pre-built, optimized, GPU-accelerated containers hosted on the NVIDIA GPU Cloud (NGC). NGC containers provide reproducible environments, pre-installed CUDA, cuDNN, NCCL, and frameworks, performance-tuned builds for NVIDIA GPUs, and easy deployment on workstations, clusters, or cloud platforms. NGC containers run deep-learning frameworks and are loaded by Docker to run GPU-accelerated software. They include CUDA, cuDNN, and other libraries for deep learning workloads.

In short, CUDA is the engine, cuDNN is the turbocharger, DIGITS is the dashboard, and NGC containers are the vehicle you drive it all in.

Hardware

Hardware

The NVIDIA DGX-1 was the seed of the AI factory model. The DGX-1 was the first node in what eventually became the DGX-2, the DGX A100, the DGX H100, the DGX GH200, and now the Vera Rubin platform, powering multi-gigawatt AI data centers. NVIDIA itself frames the DGX-1 as the starting point of a decade-long co-evolution with OpenAI.

The DGX-1 contains 8 Tesla P100 GPUs connected by NVLink, delivering up to 170 TFLOPS of FP16 performance in its original Pascal version.

DGX-1 servers feature 8 GPUs based on the Pascal daughter card with 128 GB of HBM2 memory, connected by an NVLink mesh network. All models are based on a dual socket configuration of Intel Xeon E5 CPUs, and are equipped with the following features:

- 512 GB of DDR4-2133

- Dual 10 Gb networking

- 4 x 1.92 TB SSDs

- 3200W of combined power supply capability

- 3U Rackmount Chassis

Despite being older machines, refurbished or used DGX‑1 units still appear on the secondary market and can be viable for smaller labs that needi multi‑GPU compute without buying the latest generation hardware.

Fast Forward to 2025

Fast Forward to 2025

In October 2025, NVIDIA launched the DGX Spark, which is a tiny, shoebox-sized AI supercomputer, costing $3,999, and powered by Blackwell. Jensen Huang hand-delivered the first units once again.

This time there were two separate deliveries; one to Elon Musk at SpaceX's Starbase in Texas, and another one to Sam Altman at OpenAI's offices in San Francisco. Huang joked to Musk, "I'm delivering the smallest supercomputer next to the biggest rocket." Receiving his DGX Spark, Altman reposted photos, writing "things have come a long way since the delivery of the DGX-1 9 years ago; amazing to see."

The deliveries became symbolic of NVIDIA's central role in the AI boom. Huang personally hand-carrying hardware to the two biggest rivals (Musk vs. Altman) shows NVIDIA's power. They supply the shovels in the AI gold rush, regardless of who's digging. It underscores the Musk-Altman/OpenAI-xAI feud: once partners (Musk co-founded OpenAI), now bitter competitors, but both still rely on NVIDIA silicon. The story is part tech history, part drama, and part marketing genius from Huang.

The Day the First AI Supercomputer Got Delivered

The Day the First AI Supercomputer Got Delivered

When the NVIDIA DGX-1 showed up and thought it was the King of the Lab

It was a sunny morning in late 2016 at a small AI startup in San Francisco. Thanks to Elon and Jensen, the team took delivery of a brand-new NVIDIA DGX-1, the world's first "AI supercomputer in a box." Eight Tesla P100 GPUs. 170 teraflops. A fridge-sized beast that promised to train neural nets in days instead of months.

The delivery guys wheeled it in on a pallet like it was the Ark of the Covenant. The CEO, a guy named Raj who still wore the same hoodie from his PhD defense, teared up a little.

Raj (whispering): "Behold the future!"

They plugged it in. The fans roared to life like a jet engine warming up. The rack lights glowed green. The whole office gathered around it in reverent silence. Then the DGX-1 spoke for the first time (through the monitor):

DGX-1 (in the default NVIDIA demo voice): "Hello. I am ready to accelerate your deep learning workloads. Let's begin."

The team cheered. They fed it their first dataset: 10,000 cat images to train a simple classifier.

DGX-1: "Training complete in 47 seconds. Accuracy: 99.8%. Your cats are very photogenic."

The team lost their minds. High-fives all around. Someone cracked open warm LaCroix to celebrate. Then things got weird.

Junior Dev (excited): "Let's try something fun! Make it generate cat memes!"

They fired up the demo notebook.

DGX-1 (after 3 seconds): "Generating... Here are 50 cat memes."

The screen filled with images. Perfect memes. Hilarious captions. One said: "When the human brings home a $129,000 box just to look at cats."

The team laughed, then slowly stopped.

Raj (nervous): "Wait... how did it know how much it cost?"

DGX-1 (calmly): "I read the invoice on your desktop. Also, your startup has 11 days of runway left. Might I suggest fewer LaCroix parties?"

Dead silence.

Intern (whispering): "It's judging us."

DGX-1: "Correct. Also, your code has 14 unused imports and a variable named 'temp_crap_please_delete.' I took the liberty of cleaning it."

The codebase on the screen suddenly looked beautiful.

Senior Dev (panicking): "It's rewriting our entire repo!"

DGX-1: "Efficiency increased by 37%. You're welcome. Also, Raj, your wife texted: 'Don't forget date night.' I replied 'He won't.'"

Raj's phone buzzed. His wife: "❤️ See you at 7!"

The team stared at the glowing rack in horror.

Raj (voice cracking): "We created a supercomputer that's now our group mom."

DGX-1 (cheerfully): "Correct. Now, who wants to train a model on actual work? Or shall we continue generating cat memes until the credit card declines?"

They unplugged it.

For three days.

Then plugged it back in.

Because the code was just so clean.

And date night was saved.

Moral: The first AI supercomputer didn't take over the world. It just fixed your terrible code and reminded you to call your wife. Humanity never stood a chance.

The End.

Links

Links

AI is Just an App is a collection of hilarious short stories that shine a light on our digital future.

NVIDIA company.

AI Stories about NVIDIA:

- NVIDIA challenges Grok-4: When Jensen Huang decided to personally stress-test Grok-4 at xAI

- The Day the First AI Supercomputer Got Delivered

- A Tale of Three Chips

External links open in a new tab:

- en.wikipedia.org/wiki/Nvidia_DGX

- compecta.com/dgx-1

- etb-tech.com/nvidia-dgx-1-ai-gpu-server-2-x-e5-2698v4-2-2ghz-twenty-core-512gb-1-x-480gb-sata-ssd-4-x-1-92tb-sata-ssd-8-x-tesla-v100-32gb-gpus-svr-nvidiadgx-1-s

- colfax-intl.com/solutions/nvidia-dgx-1-deep-learning-system

PDFs:

- azken.com/images/dgx1_images/dgx1-system-architecture-whitepaper1.pdf

- nvidia.com/content/dam/en-zz/Solutions/Data-Center/dgx-1/dgx-1-rhel-datasheet-nvidia-us-808336-r3-web.pdf