Queen

Elizabeth Prize

Queen

Elizabeth Prize

2025 QEPrize Winners Modern Machine Learning

Meet

Some of the All Stars of AI!

Meet

Some of the All Stars of AI!

The 2025 Queen Elizabeth Prize for Engineering is awarded to seven engineers who have made seminal contributions to the development of Modern Machine Learning, a core component of artificial intelligence (AI) advancements.

Modern machine learning enables systems to learn from data, identify patterns, and make decisions or predictions without being explicitly programmed for every scenario. This capacity for self-improvement is crucial in advancing AI, as it allows models to adapt and improve over time as they encounter new data.

The recent advances in machine learning rely on innovations in algorithms, processing power and benchmark datasets. It is the combination of these interrelated breakthroughs that underpins the widespread adoption and application of AI systems.

Yoshua Bengio, Geoffrey Hinton, John Hopfield and Yann LeCun have long championed artificial neural networks as an effective model for machine learning and this is now the dominant paradigm. Together they are responsible for the conceptual foundations of this approach.

Jensen Huang and Bill Dally have led developments in the hardware platforms that underpin the operation of modern machine learning algorithms. The vision of exploiting Graphics Processing Units and their subsequent architectural advances have enabled the scaling that has been central to their successful application.

Fei-Fei Li established the importance of providing high quality datasets, both to benchmark progress and underpin the training of machine learning algorithms. By creating ImageNet, a large-scale image database used for object recognition software research, she enabled access to millions of labelled images that have been instrumental in training and evaluating computer vision algorithms.

Together, the work of these engineers has laid the foundations for the machine learning that lies behind many of the most exciting innovations shaping the world today.

Jensen Huang

Jensen Huang founded NVIDIA in 1993 and has served since its inception as President, Chief Executive Officer, and a member of the board of directors. Since its founding, NVIDIA has pioneered accelerated computing. The company's invention of the GPU in 1999 sparked the growth of the PC gaming market, redefined computer graphics, and ignited the era of modern AI. NVIDIA is now driving the platform shift of accelerated computing and generative AI, transforming the world's largest industries and profoundly impacting society.

Huang has been elected to the National Academy of Engineering and is a recipient of the Semiconductor Industry Association's highest honour, the Robert N. Noyce Award; the IEEE Founder's Medal; the Dr Morris Chang Exemplary Leadership Award; and honorary doctorate degrees from Taiwan's National Chiao Tung University, National Taiwan University, and Oregon State University. He has been named the world's best CEO by Fortune, the Economist, and Brand Finance, as well as one of TIME magazine's 100 most influential people.

Prior to founding NVIDIA, Huang worked at LSI Logic and Advanced Micro Devices. He holds a BSEE degree from Oregon State University and an MSEE degree from Stanford University.

Yoshua Bengio

Yoshua Bengio is most known for his pioneering work in deep learning and is recognised worldwide as one of the leading experts in artificial intelligence. He is Full Professor at Universite de Montreal, and the Founder and Scientific Director of Mila Quebec AI Institute. He co-directs the CIFAR Learning in Machines & Brains programme and acts as Scientific Director of IVADO.

He has received numerous awards, including the prestigious Killam Prize and Herzberg Gold medal in Canada, CIFAR's AI Chair, Spain's Princess of Asturias Award, the VinFuture Prize and he is a Fellow of both the Royal Society of London and Canada, Knight of the Legion of Honor of France, Officer of the Order of Canada, Member of the UN's Scientific Advisory Board for Independent Advice on Breakthroughs in Science and Technology.

Concerned about the social impact of AI, he actively contributed to the Montreal Declaration for the Responsible Development of Artificial Intelligence and currently chairs the International Scientific Report on the Safety of Advanced AI.

Geoffrey Hinton

Professor Geoffrey Hinton received his BA in Experimental Psychology from Cambridge in 1970 and his PhD in Artificial Intelligence from Edinburgh in 1978. He did postdoctoral work at Sussex University and the University of California San Diego and spent five years as a faculty member in the Computer Science department at Carnegie-Mellon University. He then became a fellow of the Canadian Institute for Advanced Research and moved to the Department of Computer Science at the University of Toronto. He spent three years from 1998 until 2001 setting up the Gatsby Computational Neuroscience Unit at University College London and then returned to the University of Toronto where he is now an emeritus distinguished professor. From 2013 to 2023 he worked half-time for Google where he became a VP Engineering Fellow.

He was one of the researchers who introduced the backpropagation algorithm and the first to use backpropagation for learning word embeddings. His other contributions to neural network research include Boltzmann machines, distributed representations, time-delay neural nets, mixtures of experts, variational learning and deep learning. His research group in Toronto made major breakthroughs in deep learning that revolutionized speech recognition and object classification.

Geoffrey is a fellow of the UK Royal Society and a foreign member of the US National Academy of Engineering, the US National Academy of Science and the American Academy of Arts and Sciences. His awards include the David E. Rumelhart prize, the IJCAI award for research excellence, the Killam prize for Engineering, the IEEE Frank Rosenblatt medal, the NSERC Herzberg Gold Medal, the IEEE James Clerk Maxwell Gold medal, the NEC C&C award, the BBVA award, the Honda Prize the Princess of Asturias Award and the ACM Turing Award.

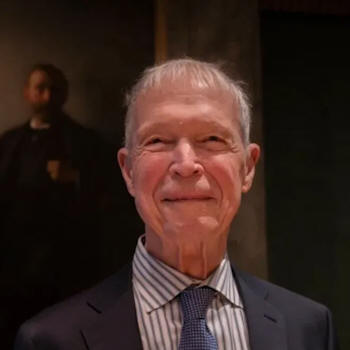

John Hopfield

After receiving a BA from Swarthmore College (1954) Hopfield studied theoretical condensed matter physics at Cornell University (PhD 1958). He next became a member of the technical staff at ATT Bell Laboratories, an affiliation he kept up for almost 30 years while on the faculty at UC Berkeley, Princeton University, and Caltech. He received the 1968 Oliver Buckley Prize of the American Physical Society (jointly with experimental chemist D. G. Thomas).

Hopfield was appointed as Roscoe Dickinson Professor of Chemistry and Biology at Caltech in 1980 and by this time, the first paper he wrote in this field "Neural networks and physical systems with emergent collective computational abilities" (1982) is the most often cited of his more than 200 scientific papers.

Recognitions include honorary degrees from Chicago and Swarthmore, MacArthur Fellow (1983-1988), California Scientist of the Year (1991), The Dirac Medal and Prize (2001), Albert Einstein Award (2005). Swartz Prize, Society for Neuroscience (2012). He was President of the American Physical Society (2006), and is a member of the American Philosophical Society, the National Academy of Science, and the American Academy of Arts and Sciences.

In 2019 John Hopfield was awarded the Benjamin Franklin medal in Physics by the Franklin Institute for his work in applying theoretical physics to biological questions, including neuroscience and genetics. His work on neural networks has had a significant impact on machine learning.

In 2024 he was jointly awarded the Nobel prize in Physics for "foundational discoveries and inventions that enable machine learning with artificial neural networks".

Yann LeCun

Yann LeCun is VP & Chief AI Scientist at Meta and the Jacob T. Schwartz Professor at NYU affiliated with the Courant Institute of Mathematical Sciences & the Center for Data Science. He was the founding Director of FAIR and of the NYU Center for Data Science. He received an Engineering Diploma from ESIEE (Paris) and a PhD from Sorbonne Universite. After a postdoc in Toronto he joined AT&T Bell Labs in 1988, and AT&T Labs in 1996 as Head of Image Processing Research. He joined NYU as a professor in 2003 and Meta/Facebook in 2013.

His interests include AI, machine learning, computer perception, robotics, and computational neuroscience. He is the recipient of the 2018 ACM Turing Award (with Geoffrey Hinton and Yoshua Bengio) for "conceptual and engineering breakthroughs that have made deep neural networks a critical component of computing", a member of the National Academy of Sciences, the National Academy of Engineering, the French Academie des Sciences.

Bill Dally

Bill Dally joined NVIDIA in January 2009 as Chief Scientist, after spending 12 years at Stanford University, where he was chairman of the computer science department. Together with his Stanford team he developed the system architecture, network architecture, signalling, routing and synchronization technology that is found in most large parallel computers today.

Bill was previously at the Massachusetts Institute of Technology from 1986 to 1997, where he and his team built the J-Machine and the M-Machine, experimental parallel computer systems that pioneered the separation of mechanism from programming models and demonstrated very low overhead synchronization and communication mechanisms. From 1983 to 1986, he was at California Institute of Technology (CalTech), where he designed the MOSSIM Simulation Engine and the Torus Routing chip, which pioneered "wormhole" routing and virtual-channel flow control.

He is a member of the National Academy of Engineering, a Fellow of the American Academy of Arts & Sciences, a Fellow of the IEEE and the ACM, and has received the ACM Eckert-Mauchly Award, the IEEE Seymour Cray Award, and the ACM Maurice Wilkes award. He has published over 250 papers, holds over 120 issued patents, and is an author of four textbooks.

Fei-Fei Li

Dr Fei-Fei Li is the inaugural Sequoia Professor in the Computer Science Department at Stanford University, and Co-Director of Stanford's Human-Centered AI Institute. She served as the Director of Stanford's AI Lab from 2013 to 2018 and during a sabbatical from Stanford she was Vice President at Google and served as Chief Scientist of AI/ML at Google Cloud.

Fei-Fei's current research interests include cognitively inspired AI, machine learning, deep learning, computer vision, robotic learning, and AI+healthcare especially ambient intelligent systems for healthcare delivery. In the past she has also worked on cognitive and computational neuroscience.

She is the inventor of ImageNet and the ImageNet Challenge, a critical large-scale dataset and benchmarking effort that has contributed to the latest developments in deep learning and AI. In addition to her technical contributions, she is a national leading voice for advocating diversity in STEM and AI. She is co-founder and chairperson of the national non-profit AI4ALL aimed at increasing inclusion and diversity in AI education.

Links

Links

AI Biographies page

Most Influential People in AI page