AI Tokenization

AI Tokenization

Tokens for AI

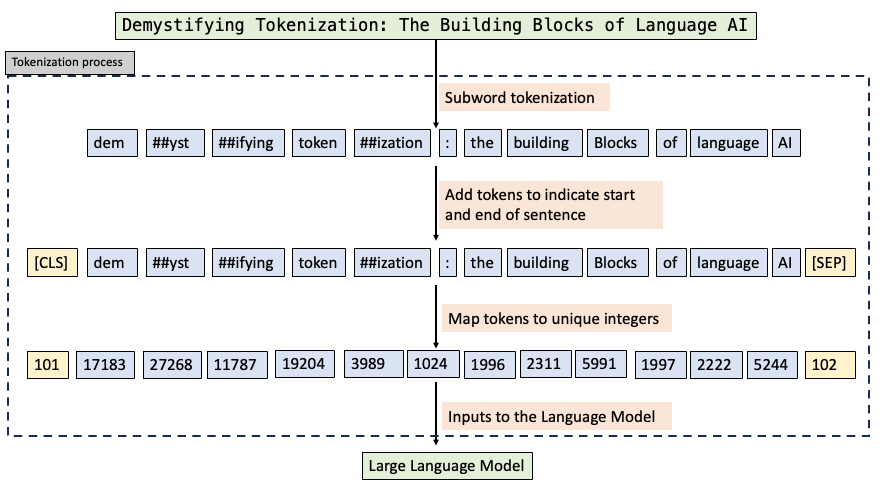

Tokenization is a process that breaks down text into smaller units called tokens, is one of the building blocks of natural language processing

Tokenization is a critical step in preparing text for AI processing, enabling machines to effectively analyze and generate human language across various applications. These tokens serve as the building blocks for AI systems to understand and generate human language. In credit card processing, tokenization is a fraud prevention and identity protection method that replaces a users real information (like their name and card number) with a random string of letters and numbers. This "token" can only be traced to the corresponding customer by the merchant, protecting users information from being potentially exposed during a transaction.

The Process of Tokenization

The Process of Tokenization

Here's how it works:

- Text is first normalized or standardized into a consistent format.

- The normalized text is then divided into tokens, which can be words, subwords, or characters.

- Unique tokens are added to a vocabulary list with numerical indices.

- Tokens are converted into numerical vector representations called embeddings.

Types of Tokenization

Types of Tokenization

Here are the main types of tokenization:

- Space-Based Tokenization: Splits text into words based on spaces.

- Dictionary-Based Tokenization: Divides text according to a predefined dictionary.

- Byte-Pair Encoding (BPE): A subword tokenization method that iteratively merges common character pairs.

- WordPiece: Another subword tokenization algorithm, popularized by models like BERT.

Importance in AI

Importance in AI

Tokenization is fundamental to the operation of large language models which rely on tokens to generate text, translate languages, and answer questions. Tokenization enables AI models to process and analyze language efficiently. It helps in capturing the nuances and complexities of language, and it is crucial for tasks like machine translation, speech recognition, and search engines.

By splitting sentences into tokens, AI can better grasp the context and meaning of words within a sentence, leading to a better interpretation of language. Tokenization allows AI models to process large amounts of text data much faster by breaking it down into smaller, more manageable units. Techniques like subword tokenization can break down unfamiliar words into recognizable parts, enabling AI models to handle words not seen during training. Tokens can be used as features in machine learning models, allowing the identification of important keywords and patterns within text.

Challenges

of Tokenization

Challenges

of Tokenization

- Handling ambiguities in language (e.g., contractions, punctuation).

- Dealing with languages without clear word boundaries, like many tonal languages in Asia.

- Balancing vocabulary size with semantic representation.

Evolution of Tokenization

Evolution of Tokenization

Tokenization has evolved from simple word-splitting to more sophisticated subword tokenization methods. The methods allow AI models like GPT-4 to better understand and generate human language. Some considerations are:

- Token limits: Each AI model has a maximum number of tokens it can process.

- Cost: Many AI services charge based on token usage.

Links

Links

datavisor.com/wiki/tokenization/

mckinsey.com/featured-insights/mckinsey-explainers/what-is-tokenization

airbyte.com/data-engineering-resources/ai-tokenization

towardsdatascience.com/the-art-of-tokenization-breaking-down-text-for-ai-43c7bccaed25

datacamp.com/blog/what-is-tokenization

youtube.com/watch?v=VFp38yj8h3A

neptune.ai/blog/tokenization-in-nlp