Jensen Huang

Jensen Huang

From Pixels to Intelligence

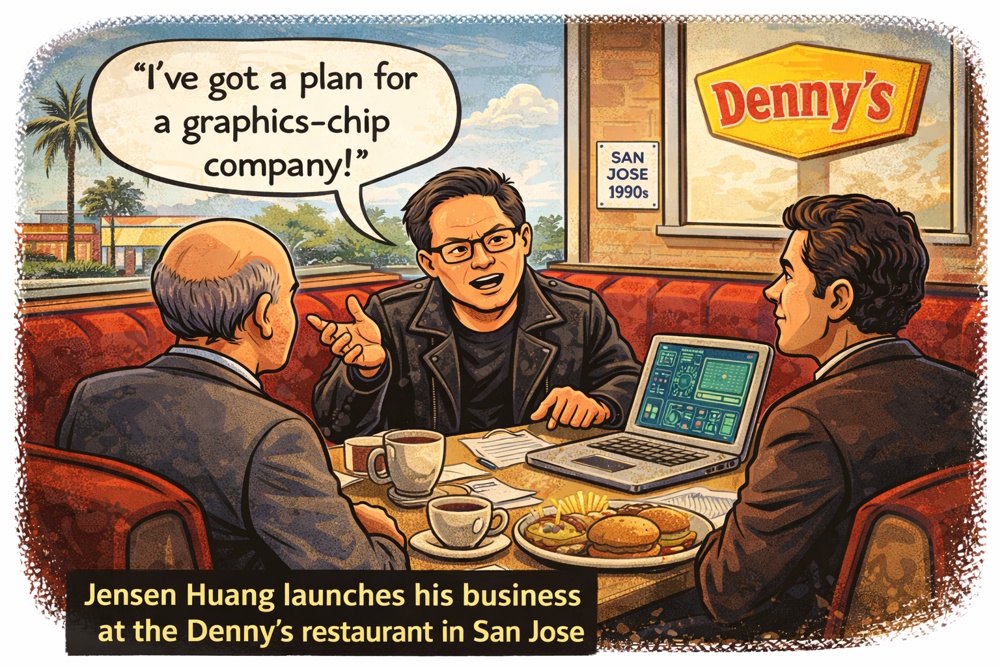

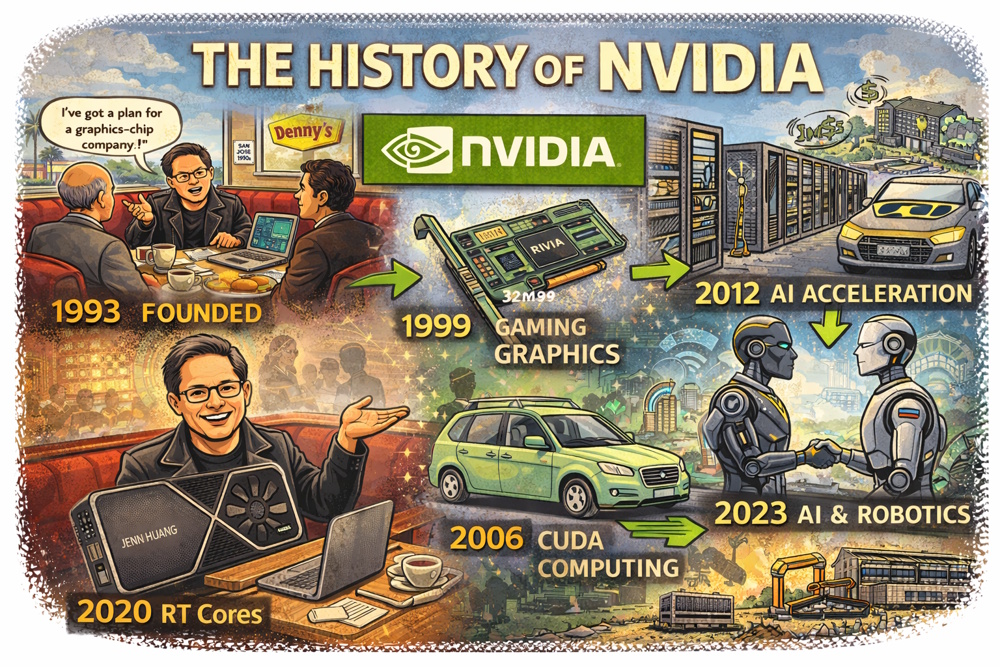

Jensen Huang's story reads like a modern day fairly tale. It all begins with a Grand Slam breakfast. The setting is a non-descript (now famous) Denny's restaurant in San Jose, California. The date is April 1993. Three engineers—Jensen Huang, Chris Malachowsky, and Curtis Priem—meet over a meal to discuss an audacious idea for a unique business venture.

Jensen Huang is 30 years old. Born in Taiwan in 1963, he came to America as a child, sent by his parents to live with relatives in Oregon—a journey he later described as "basically being orphaned." He spent a year at a Kentucky boarding school that was essentially a reform school, though he wasn't a troublemaker. He learned to survive, to adapt, and to compete.

He studied electrical engineering at Oregon State, then Stanford. He worked at LSI Logic and AMD, learning the semiconductor business. He watched the computer graphics industry emerge and saw something others missed: 3D graphics would become essential, and no one was building the chips to make it happen.

At Denny's, the three men sketch their vision on napkins. They will build a company focused entirely on graphics acceleration. The personal computer was exploding, but the graphics were terrible—slow, blocky, and primitive. Every computer needed better graphics. They would build the chips and the boards to deliver it.

They name the company NVIDIA—"NV" for "next version," wrapped in the Latin word for "envy."

The Near-Death Experience

The early years nearly kill them. Their first two chips—NV1 and NV2—fail commercially. The NV1 uses quadratic texture mapping when the industry standardizes on triangular rendering. It's technically elegant but incompatible. They partner with Sega for a gaming console that never launches. By 1996, NVIDIA is running out of money. The fledgling business is at a crossroads.

They have one more chance. Huang makes a desperate pitch to their investors: give us six months and enough money for one more chip. If it fails, we'll shut down the company and return whatever money is left. The investors agree to a deal.

RIVA 128: Survival

RIVA 128: Survival

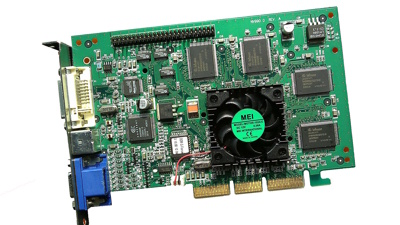

Codenamed NV3, the RIVA 128 represented a total reset. The name RIVA stood for Real-time Interactive Video and Animation, signaling NVIDIA's ambition to unify multimedia and 3D performance. The RIVA 128 embraced triangle-based rendering to support Direct3D and OpenGL. It integrated 2D, 3D, and video acceleration - a major differentiator at the time. It had AGP support, taking advantage of the new high-bandwidth graphics bus. And it featured high clock speeds (100 MHz core) and 4 MB of SGRAM with a 128-bit interface, boasting major performance improvements.

In 1997 the RIVA 128 ships. It's fast, affordable, and compatible with DirectX and OpenGL—the industry standards. It succeeds and NVIDIA survives. But survival isn't enough. Huang wants more.

The GPU Revolution (1999-2006)

The GPU Revolution (1999-2006)

GeForce 256: Inventing the GPU

In August of 1999, NVIDIA announces the GeForce 256 and makes a bold claim—this is the world's first "GPU" (Graphics Processing Unit).

The term is new, coined by NVIDIA's humble marketing department. The term represents a genuine conceptual leap. Previous graphics cards were accelerators—they helped the CPU with graphics tasks. The GeForce 256 is different: it's a processor in its own right, independently handling transformation, lighting, and rendering.

The revolutionary insight: Graphics is parallel processing. A screen has millions of pixels. Each pixel's color can be calculated independently of the others. Therefore, instead of one fast processor doing calculations sequentially (like a CPU), a GPU uses thousands of simpler processors performing calculations simultaneously.

The GeForce 256 has 23 million transistors, way more than the Pentium III CPU of the same era. It can create 10 million polygons per second. Video games suddenly look dramatically better.

NVIDIA goes public in 1999. The stock soars. They're no longer a surviving company; they're the leader in their market segment.

The Xbox Partnership

The industry takes notice. A year later, giant Microsoft approaches tiny NVIDIA to build the graphics chip for the original Xbox. It's a prestige project, but the contract is difficult to accomplish. The pricing is tight, the demands extreme.

The Xbox launches in 2001. The graphics chip (named NV2A) is impressive, but NVIDIA loses money on every unit. Huang later calls it "the worst business decision we ever made" financially, but he admits it established NVIDIA as a serious player.

The lesson learned: Control your own destiny. Never again be dependent on a single partner's demands.

CUDA: The Secret Weapon (2006)

The year 2006 is where the story pivots—where a gaming company plants the seeds of an AI revolution.

That was the year NVIDIA releases CUDA (Compute Unified Device Architecture). It's a programming platform that lets developers use NVIDIA GPUs for general-purpose computing, not just computer graphics.

The industry's reaction: confusion.

Why would a gaming GPU company care about scientific computing? The thinking was GPUs are designed for rendering explosions in video games, not for serious computation. The market for general-purpose GPU computing seems tiny. Huang faces internal resistance, for CUDA requires massive R&D investments with hundreds of engineers building compilers, libraries, and tools. The payoff is unclear. Board members question the strategy. Have they become Microsoft?

Huang sees something others don't see. He understands that the GPU's parallel architecture—built for rendering millions of pixels—is perfect for other massively parallel problems like physics simulations, molecular modeling, weather forecasting, financial analysis, and much more. And something else. Something few are thinking about yet: neural networks.

The bet: Invest heavily in CUDA, build the ecosystem, make NVIDIA GPUs the standard for scientific computing. The applications will follow. It's a act of faith, backed by hundreds of millions in R&D with no clear ROI. It's a risk NVIDIA is willing to take.

The Wilderness Years (2006-2011)

CUDA adoption is slow. Some scientists use it. Some researchers, too. Yet it's niche. The gaming business remains NVIDIA's bread and butter.

And NVIDIA is facing serious competition. AMD acquires ATI in 2006, becoming a serious competitor in graphics. Intel looms as a perpetual threat. With their CPU dominance, they could crush NVIDIA if they chose to do so. NVIDIA pushes into other markets with mobile chips (Tegra), automotive computing, and professional visualization. They're searching for the next big platform in order to diversify beyond the gaming industry.

Huang runs NVIDIA with intensity. He's famous for his leather jacket—always black, always the same style. "My wife and daughter are responsible for my style," he once said. His management style is demanding and detail-oriented. He can be relentless. He sends emails at all hours. He reviews technical decisions personally. NVIDIA employees joke that nothing happens without Jensen knowing about it.

At this stage, the company is successful, but not revolutionary. Revenue grows steadily, although unspectacularly. In 2011, NVIDIA's market cap is about $10 billion. Then everything changes.

The AI Awakening (2012-2020)

The AI Awakening (2012-2020)

AlexNet: The Revelation

September 2012 was the date when ImageNet competition results are announced. Professor Geoffrey Hinton's team from the University of Toronto designed and built a deep neural network called AlexNet, and won the competition by an impossible margin.

The critical detail: They trained AlexNet on NVIDIA GPUs.

Hinton and his students—Alex Krizhevsky and Ilya Sutskever—discovered that GPUs were the perfect vehicle for training neural networks. The parallel architecture that rendered pixels could also calculate millions of neural network weights simultaneously. Training that took weeks on CPUs took days on GPUs.

They used two NVIDIA GTX 580 GPUs—gaming cards that cost about $500 each.

When the AI research community views AlexNet's results, they scramble to replicate the approach. Suddenly, every AI researcher needs GPUs. NVIDIA GPUs.

Huang realizes instantly: This is the moment. This is what CUDA was for. This is the revolution.

The Pivot

NVIDIA doesn't abandon gaming—it remains hugely profitable—but the company's strategic focus shifts dramatically to AI.

From 2013 to 2015, NVIDIA begins redesigning their GPU architecture specifically for deep learning. They release the Tesla K40 and K80 GPUs, designed for data centers, not gaming.

They build specialized hardware features:

Tensor cores: Hardware acceleration specifically for matrix multiplication (the core operation in neural networks)

NVLink: High-speed interconnects between GPUs

Better memory bandwidth and capacity

They expand CUDA with libraries specifically for deep learning:

cuDNN: Deep neural network primitives

TensorRT: Inference optimization

NCCL: Multi-GPU communication

The strategy: Make NVIDIA GPUs the easiest, fastest way to do deep learning. Integrate with every framework—TensorFlow, PyTorch, Caffe. Provide free tools, extensive documentation, and educational resources to fastrack development.

Huang's Transformation

Jensen Huang suddenly becomes AI's chief evangelist. He speaks at AI conferences, not just gaming conventions. He hosts GTC (GPU Technology Conference), transforming it from a developer event into a major AI showcase.

His keynotes become legendary:

He demos in real-time

He explains the technology personally, in depth

He makes audacious predictions about AI's impact

He wears the stylish black leather jacket; by now, a trademark

The persona crystallizes as part CEO, part engineer, and part prophet. He speaks about AI with genuine excitement, technical depth, and absolute conviction that NVIDIA is enabling a revolution.

2016 was another watershed year for AI. AlphaGo defeats Lee Sedol using 1,920 CPUs and 280 NVIDIA GPUs. Huang presents Lee Sedol with an NVIDIA GPU as a gift—a symbolic gesture recognizing the human champion while at the same time highlighting NVIDIA's role in the event.

The Gold Rush

From 2016 to 2020, AI moves from research labs into industry. Every major tech company—Google, Facebook, Microsoft, Amazon, Baidu—races to build AI capabilities. They all need GPUs. Lots and lots of GPUs.

The economics shift: Gaming GPUs cost hundreds. Data center GPUs cost thousands. The Tesla V100, introduced in 2017, costs $10,000-15,000 per unit. Companies buy thousands of them.

During this period, NVIDIA's data center revenue explodes:

2015: $339 million

2016: $830 million

2017: $1.9 billion

2018: $2.9 billion

2020: $6.7 billion

The transformation is extraordinary. NVIDIA transitions from a gaming company that dabbles in computing to a computing company that also does gaming.

Huang's 2006 bet on CUDA that was criticized as too expensive and risky pays off handsomely, beyond anyone's imagination, including Jensen's.

The Cryptocurrency Complication (2017-2018)

An unexpected development occurred during this timeframe. Cryptocurrency mining also needs parallel processing. Bitcoin miners discover that NVIDIA GPUs can mine Ethereum efficiently.

Demand explodes. Gaming GPU prices skyrocket. Gamers can't buy graphics cards because miners snap them all up. NVIDIA's revenue surges, but it's unstable. Huang warns investors that crypto demand is unpredictable.

In 2018, crypto crashes and takes demand with it. In months, NVIDIA's stock plunges 50% . Revenue misses expectations. It's a painful reminder of the risks of unstable markets.

But the AI demand remains solid. The crypto bubble pops, but the AI transformation continues. NVIDIA weathers the storm.

The Intelligence Age (2020-Present)

The Intelligence Age (2020-Present)

The ChatGPT Moment

On November 30, 2022, OpenAI releases a model called ChatGPT. Within a week, everyone knows that AI has fundamentally changed. This isn't a research demo. This is a product that anyone can use, and it's shockingly capable.

The question everyone asks: How was it trained? The answer: Tens of thousands of NVIDIA GPUs.

Suddenly, every company needs an AI strategy. And every AI strategy requires GPU; specifically, NVIDIA GPUs. They have a 95% market share in AI training.

The demand becomes frenzied:

Microsoft buys tens of thousands of GPUs for OpenAI and their own AI

Google, already a major customer, orders more

Meta announces massive GPU purchases

Amazon, Oracle, and every cloud provider expands their GPU offerings

Startups raise funding, just to secure GPU allocations

The Supply Crisis

Almost overnight, NVIDIA can't make GPUs fast enough. Lead times stretch to months. The most advanced chips—the H100 (announced in 2022)—are virtually unobtainable. Each H100 costs $25,000-40,000, and they're sold out through 2024.

Companies get creative:

They buy lower-tier GPUs as placeholders

They beg for allocations

They pay premiums to cloud providers

They wait

Jensen Huang becomes perhaps the most powerful person in tech. He decides who gets GPUs and when. Every CEO wants to be his friend, leather jacket or not.

The Ascent

The numbers become absurd:

NVIDIA's market capitalization:

2019: $110 billion

2020: $322 billion

2022: $360 billion (pre-ChatGPT)

2023: $1.2 trillion (becomes a trillion-dollar company)

2024: Peaks above $3 trillion, becoming the world's most valuable company

2025: Passes the $5 trillion mark

Data center revenue (fiscal year):

2023: $15 billion

2024: $47.5 billion

2025: $115 billion

Jensen Huang's net worth exceeds $100 billion. A gaming GPU company has become more valuable than entire industries, as well as entire countries like Germany, Japan, and India (based on GDP).

Beyond the Chip: The Full Stack

NVIDIA's strategy evolves beyond selling GPUs:

DGX Systems: Complete AI supercomputers; racks of GPUs with networking, optimized for AI training. Price: $200,000 to millions. Jensen, at the urging of Elon Musk, personally delivers a DGX-1 to Sam Altman at OpenAI.

NVIDIA AI Enterprise: Software stack and support for running AI in production.

Omniverse: Platform for 3D design and simulation.

Drive: Autonomous vehicle computing platform.

Grace CPU: NVIDIA designs its own CPUs to pair with GPUs, reducing dependency on Intel/AMD.

Networking: Acquisition of Mellanox (2020, $7 billion) for high-speed data center interconnects.

The vision: Don't just sell GPUs. Sell complete AI infrastructure.

The Revolutionary Significance

The Revolutionary Significance

1. The Platform Power

NVIDIA achieved something extraordinarily rare: platform dominance in an emerging technology.

When deep learning emerged, NVIDIA had:

The hardware (GPUs with parallel architecture)

The software (CUDA, developed years earlier)

The ecosystem (libraries, tools, developer community)

The manufacturing partnerships (TSMC for chip production)

Competitors couldn't just build better chips. They had to replicate an entire ecosystem that NVIDIA had spent 15 years building. By the time they tried, NVIDIA was generations ahead.

Google built TPUs (Tensor Processing Units) for their own use. They're excellent, but only Google can use them. Amazon built Trainium. Same story. The open market remains NVIDIA's domain.

2. The Accidental Revolutionary

Here's the extraordinary part: NVIDIA didn't predict the AI revolution.

Huang bet on CUDA because he believed GPUs could be useful for scientific computing generally. Neural networks were just one possible application among many.

When AlexNet succeeded in 2012, NVIDIA was positioned perfectly, through strategic foresight about parallel computing, not from any specific prediction about deep learning.

The lesson: Sometimes revolution comes from building general capabilities and platforms, then serendipitiously being ready when unexpected applications emerge.

3. The Timing Miracle

If AlexNet had happened in 2002, CUDA wouldn't have existed. If it happened in 2022, competitors might have caught up. But 2012 was perfect timing. CUDA was mature enough to be useful, and competitors hadn't yet siezed the opportunity.

Huang's 2006 investment in CUDA created a six-year head start that compounded into an insurmountable advantage.

4. The Vertical Integration

NVIDIA controls:

Chip architecture design

Software stack (CUDA, cuDNN, etc.)

System design (DGX)

Networking (Mellanox)

Even some applications (Omniverse)

This vertical integration creates a moat. Competitors can copy one piece, but not the integrated whole. NVIDIA built vertical integration in AI infrastructure like Apple did for consumer devices.

5. The Democratization Paradox

NVIDIA's GPUs both democratized and concentrated AI:

Democratized: Any researcher with a few thousand dollars could train state-of-the-art models. Before GPUs, you needed supercomputers. AlexNet ran on $1,000 of consumer hardware.

Concentrated: The largest models require thousands or millions of dollars in GPUs. Only the wealthiest companies can train frontier models. OpenAI's GPT-4 reportedly required over $100 million in computing.

NVIDIA enabled both the grad student experimenting in their dorm and the mega-corporation spending billions.

6. The Business Model Evolution

NVIDIA transformed from:

Consumer hardware (gaming GPUs, $300-1,500)

To enterprise hardware (data center GPUs, $10,000-40,000)

To complete systems (DGX, $200,000+)

To infrastructure + software (AI enterprise, ongoing subscriptions)

Each transition increased margins and customer lock-in. Gaming remains profitable, but data center is the growth engine and profit center.

7. Huang as Leader

Jensen Huang's role was critical:

Technical credibility: He's an engineer who understands the technology deeply. His keynotes include technical details that would bore most CEOs, details that excite developers.

Long-term vision: The CUDA investment required patience and conviction. Many CEOs would have killed it after year three due to minimal revenue.

Execution obsession: NVIDIA ships on aggressive schedules. The industry cadence—new GPU architecture every two years—is punishing, but they are able to maintain the release cycle.

Marketing genius: The leather jacket. The theatrical keynotes. The "AI is the new electricity" messaging. The AI layer cake analogy. He has made GPUs exciting.

Ecosystem thinking: He understood that dominance requires more than good chips. It requires tools, libraries, partnerships, and developer advocacy. He has a vision of AI Factories and the American tech stack becoming the global standard.

8. The Competition

Why can't competitors catch up?

Hardware: NVIDIA partners with TSMC for bleeding-edge manufacturing. They design chips optimized for AI workloads over years of iteration.

Software: CUDA represents over 20 years of development. Thousands of engineers. Millions of lines of code. Vast libraries. Deep optimization. This software base can't be replicated quickly.

Ecosystem: Every AI framework, every tutorial, every course assumes NVIDIA GPUs. Developers know CUDA. Switching to another platforms is costly.

Network effects: More users → better tools → more users. The flywheel is spinning fast.

Manufacturing: TSMC's most advanced nodes. Enormous capital investment. Long-term partnerships. Competitors can't just order equivalent capacity.

AMD tries with ROCm (their CUDA alternative). It's technically competent but lacks the ecosystem. Market share remains low. Intel tries with oneAPI. Same story. Startups build specialized AI chips. Some are impressive (Cerebras, Graphcore, SambaNova), but they're addressing niche use cases, not displacing NVIDIA's dominance. There are no real competitors on the horizon.

9. The Existential Questions

Is NVIDIA vulnerable?

Possibly. The AI field might shift to specialized architectures where general-purpose GPUs aren't optimal. New computing paradigms (quantum, neuromorphic) might emerge. Geopolitical restrictions might fragment the market.

Are they too dominant?

Regulators are watching. 95% market share in AI training invites antitrust scrutiny. Cloud providers are developing their own chips partly to reduce NVIDIA dependence, partly because they are gaining groud.

What happens when GPU demand plateaus?

The current growth rate is unsustainable. Eventually, AI infrastructure buildout will slow. NVIDIA's revenue will stabilize or decline. The stock price has priced in years of growth. But Huang has navigated transitions before, like the one that went from 3D gaming to CUDA to AI. He's proven adept at finding the next wave and riding it.

Epilogue

Epilogue

The arc of NVIDIA is extraordinary: A company founded to make video games look better becomes the one responsible for the essential infrastructure of the AI revolution. A CEO who nearly went bankrupt in 1996 becomes one of the world's wealthiest individuals and leads his company to become the world's richest. Gaming GPUs designed to render explosions and race cars now train models that write poetry, diagnose diseases, drive cars, and recognize cats.

The revolutionary significance: NVIDIA demonstrates that platform companies—those who build general-purpose tools rather than specific applications—can capture enormous value when paradigm shifts occur. They also demonstrate the power of patient capital, technical excellence, and long-term vision. The CUDA investment of 2006 pays off in 2012 and explodes in 2022. That's sixteen years from a risky bet to windfall profits. Jensen Huang, at 61, stands at the center of the AI revolution because he built the infrastructure that made it possible, and was ready when the moment arrived.

In 1993, at that Denny's in San Jose, three engineers wanted to make better graphics for personal computers. Thirty years later, they've helped enable a transformation in human-machine interaction that rivals the personal computer and mobile phone revolutions. The pixels have indeed become intelligence. The gaming company became the AI company. And the kid sent alone from Taiwan to America, who learned to survive and compete, built one of the most valuable and strategically important companies on Earth... ever.

The revolution continues. NVIDIA's next GPUs—Blackwell, announced in 2024, and Vera Rubin in 2026—promise another giant leap forward. The cycle accelerates, yet the core insight remains: parallel processing at scale unlocks capabilities we're only beginning to understand.

Huang once said: "The more you buy, the more you save"—a joke about GPU prices, but also a deeper truth about AI. The companies investing most heavily in NVIDIA's infrastructure are building the most capable AI systems, which generate more value, justifying more investment, in a virtuous cycle. Or in an alarming concentration of power, depending on your perspective. Either way, it's undeniably revolutionary. And Jensen Huang, in his leather jacket, standing on stage demonstrating the latest GPU while explaining tensor operations and transformer architectures, embodies the revolution with technical depth, business acumen, theatrical flair, and relentless execution. Black leather jacket and all.

Links

Links

NVIDIA company.

AI Infrastructure page.

Data centers and the Environment.

AI in America book has a section dedicated to NVIDIA.

AI Stories about NVIDIA:

- NVIDIA challenges Grok-4: When Jensen Huang decided to personally stress-test Grok-4 at xAI

- The Day the First AI Supercomputer Got Delivered

- A Tale of Three Chips