Edge AI

Edge AI

Integrates AI with Edge Computing

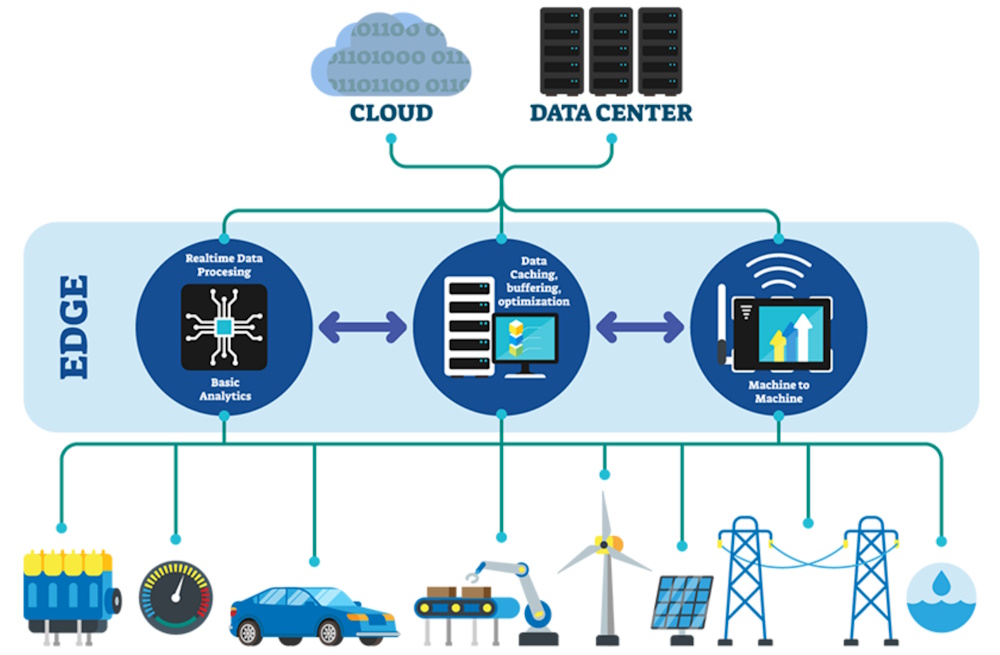

Edge AI or on-device AI refers to the deployment of AI algorithms and AI models on devices at the "edge" of a network, typically local devices like smartphones and wearables, rather than relying on cloud-based or centralized data centers.

The AI models are trained using machine learning on large datasets and then deployed onto the devices' onboard memory. Once deployed, these models can analyze sensor data, images, audio, and other inputs locally, enabling devices to perform image and speech recognition, predictive maintenance, and more.

These edge devices process data locally and make decisions in real-time, without requiring a continuous connection to the cloud. Examples of edge devices include smartphones, IoT (Internet of Things) devices, autonomous vehicles, drones, industrial robots, sensors, and even small embedded systems like Raspberry Pi. Here's an illustration:

Benefits of Edge AI

Benefits of Edge AI

Edge AI offers a shift in how artificial intelligence operates by moving computation closer to where data is generated, rather than relying solely on distant cloud servers. This architectural change delivers one of its most important benefits: real-time responsiveness. Because data is processed locally, devices can analyze information and act within milliseconds, which is essential for applications like autonomous vehicles, industrial automation, and smart cameras. This local processing allows AI-powered devices to function efficiently without constant cloud connectivity, enabling faster and more reliable decision-making.

Another major benefit is enhanced privacy and security. When data stays on the device instead of being transmitted to the cloud, the risk of interception or exposure is significantly reduced. Edge AI minimizes the need to send sensitive information across networks, improving privacy and reducing the attack surface for cyber threats. This makes edge AI especially valuable in sectors like healthcare, finance, and smart homes, where data confidentiality is critical.

Edge AI also improves reliability and resilience. Because devices can operate independently of network conditions, they continue functioning even when connectivity is weak, unstable, or temporarily unavailable. This reduces dependency on cloud infrastructure and ensures consistent performance in environments where connectivity cannot be guaranteed, such as remote locations, moving vehicles, or industrial sites.

A further advantage is reduced bandwidth and operational costs. By processing data locally, edge AI dramatically cuts down on the volume of information that needs to be transmitted to the cloud. This lowers network congestion and reduces cloud-compute expenses. Edge AI reduces both latency and bandwidth requirements by keeping computation on the device, making it more cost-efficient for large-scale deployments involving IoT sensors and embedded systems.

Edge AI enables scalable, real-time automation across industries. The global edge AI market is rapidly expanding because businesses increasingly rely on instantaneous data analysis and automation at the source of data generation. This capability unlocks new possibilities in manufacturing, logistics, retail, and smart infrastructure. This allows organizations to deploy intelligent systems that react immediately to changing conditions.

- Responsiveness: Devices can analyze information and respond within miliseconds because data is processed locally.

- Scalability: Eliminates dependence on centralized infrastructure, enabling wide-scale deployment across devices.

- Energy Efficiency: Modern edge AI chips are designed for low power consumption, extending device battery life.

- Cost-Effectiveness: Reduces the costs associated with cloud storage, bandwidth, and data processing.

- Enhanced Security: Local processing minimizes data transmission risks and potential breaches.

Key Features of Edge AI

Key Features of Edge AI

Edge AI enables real-time data analysis and decision-making

This is important for applications like autonomous driving, robotics, and video analytics that require immediate feedback.

This immediate processing allows for timely detection of changes in a person's health status, for example, which is needed for early health prognosis and intervention. By reducing the need to transfer large volumes of data to central systems, edge computing minimizes potential data exposure and enhances data privacy. This is particularly beneficial in applications where rapid response is essential, such as in patient monitoring systems and predictive maintenance in industrial settings. Here are some other key features:

- Reduced Latency: Data processing occurs locally on the device, significantly reducing the delay caused by sending data to a cloud server and waiting for a response.

- Enhanced Privacy: Since data does not need to leave the device, sensitive information is less likely to be exposed to breaches or misuse.

- Lower Bandwidth Usage: By processing data locally, edge AI reduces the need for constant communication with the cloud, conserving bandwidth and lowering costs.

- Offline Functionality: Edge AI systems can function even without an internet connection, which is especially beneficial in remote or unstable network environments.

Applications of Edge AI

Applications of Edge AI

There are numerous Edge AI devices covering a wide range of applications

- Autonomous Vehicles: Vehicles equipped with Edge AI can process data from cameras, LiDAR, and sensors to make real-time driving decisions without depending on a cloud server.

- Smart Devices: Virtual assistants like Amazon Alexa or Google Assistant use edge processing for wake-word detection.

- Healthcare: Portable medical devices equipped with Edge AI can monitor patients, analyze data, and provide alerts in real time.

- Security: Cameras with Edge AI can perform facial recognition, motion detection, and object tracking directly on the device.

- Manufacturing: AI-powered robots in factories analyze sensor data and optimize processes locally to improve efficiency and reduce downtime.

- Retail: Smart point-of-sale (POS) systems and checkout-free stores (e.g., Amazon Go) use Edge AI for real-time inventory tracking and customer service.

- Drones: Edge AI-powered drones can navigate autonomously, detect objects, and gather environmental data without relying on cloud connectivity.

Getting Started with Edge AI

Getting Started with Edge AI

For the person who wants hands-on knowledge and experience with Edge AI applications

❶ Understand Edge AI Applications

Familiarize yourself with common applications for Edge AI, such as smart home devices (voice assistants, security cameras with facial recognition), Industrial IoT (predictive maintenance in manufacturing), healthcare (wearable health monitoring devices), and retail (customer tracking and personalized recommendations).

❷ Select the Right Hardware

Choose hardware devices that meet your AI requirements (see below). For example:

- Microcontrollers: Raspberry Pi, Arduino, ESP32 (for lightweight tasks).

- Single-Board Computers: NVIDIA Jetson Nano, Google Coral Dev Board (for computer vision).

- Edge Servers: Intel Movidius Myriad, Qualcomm Snapdragon AI processors.

❸ Choose AI Frameworks for Edge Deployment

Many frameworks are optimized for edge environments. These include:

- TensorFlow Lite: For running TensorFlow models on mobile and IoT devices.

- PyTorch Mobile: Lightened PyTorch version for mobile AI.

- OpenVINO: Intel's toolkit for optimized inference.

- ONNX Runtime: For cross-platform model deployment.

- Edge Impulse: Ideal for sensor and IoT AI applications.

❹ Optimize AI Models for Edge Devices

Apply optimization techniques for efficient deployment like quantization, pruning, and knowledge distillation.

- Quantization reduces the precision of the numerical values (weights and activations) used in AI models, typically moving from floating-point numbers (like 32-bit floating-point) to lower precision formats (like 8-bit integers).

- Pruning removes unnecessary parameters (weights or neurons) from a neural network to make it smaller and more efficient.

- Knowledge distillation transfers knowledge from a larger, more accurate model (called the teacher model) to a smaller, more efficient model (the student model).

❺ Deploy AI Models

Load and run the AI model directly on the edge device. You can use TensorFlow Lite interpreters for real-time inferencing. You can optimize device-specific configurations such as NVIDIA Jetson for GPU acceleration.

❻ Testing and Monitoring

Test the accuracy and inference speed on the device. Monitor power usage and latency. Debug issues related to performance or memory constraints.

❼ Maintain and Update Models

Edge AI systems need updates to improve performance. You can create pipelines for Over-the-Air updates and monitor device performance to detect drift in model accuracy.

Edge AI Hardware

Edge AI Hardware

Hardware capable of running AI models efficiently and economically

Some popular hardware platforms include:

- NVIDIA Jetson Series: Compact AI modules for autonomous machines and edge devices.

- Google Coral: Edge TPU-based hardware for efficient ML inference on edge devices.

- Intel Movidius Myriad: Chips designed for computer vision and AI tasks at the edge.

- Raspberry Pi with AI Accelerators: Affordable and customizable for lightweight AI applications.

- Qualcomm Snapdragon: AI-powered processors widely used in smartphones and IoT devices.

Challenges of Edge AI

Challenges of Edge AI

Edge AI introduces powerful capabilities, but it also brings a set of complex challenges that organizations must overcome to deploy it effectively. One of the most significant challenges is resource limitation. Unlike cloud servers, edge devices often have constrained processing power, memory, and energy availability. Moving machine learning training and inference to the edge requires models and hardware that can operate under tight computational budgets. This makes it difficult to run large or complex AI models without careful optimization, pruning, or the use of specialized accelerators.

Another major challenge is system complexity. Edge AI systems must operate across a wide variety of devices, sensors, and network conditions. Deploying AI at the edge involves managing diverse hardware architectures, inconsistent connectivity, and varying operating environments. This complicates development, testing, and maintenance, especially when AI models must be updated or retrained across thousands of distributed devices.

Security and privacy risks also become more difficult to manage. While edge AI reduces the need to send data to the cloud, it increases the number of physical and digital endpoints that must be secured. Moving AI to the edge expands the attack surface, requiring new approaches to protect data, models, and devices in real-time environments. Edge devices may be deployed in public or unmonitored locations, making them vulnerable to tampering, theft, or adversarial attacks.

A further challenge is scalability and lifecycle management. Organizations deploying edge AI must manage updates, model drift, hardware failures, and performance monitoring across large fleets of distributed devices. Ensuring consistent behavior across thousands of edge nodes, each with different conditions and workloads, requires sophisticated orchestration tools and robust operational strategies.

Data quality and real-time constraints pose ongoing difficulties. Edge AI systems must make decisions based on noisy, incomplete, or rapidly changing sensor data. real-time processing demands low latency and high reliability, which can be difficult to guarantee when devices face fluctuating network conditions or limited power availability. These constraints make it challenging to maintain accuracy and stability in mission-critical applications such as autonomous vehicles, healthcare monitoring, and industrial automation.

- Edge devices typically have less computational capacity compared to cloud servers, requiring optimized models.

- AI models need to be compressed or pruned to fit the memory and processing constraints of edge devices.

- Adapting AI solutions to work across a wide range of edge hardware can be challenging.

- Keeping models up-to-date on numerous devices is logistically complex compared to centralized cloud systems.

Future of Edge AI

Future of Edge AI

The proliferation of 5G networks will complement Edge AI, allowing faster communication between devices. Edge AI will be central to the growing IoT ecosystem, enabling smarter and more autonomous devices. The development of AI-specific chips (like neuromorphic processors) will further enhance the capabilities of edge AI devices. Advances in techniques like quantization, pruning, and knowledge distillation will make it easier to run complex models on edge devices.

Edge AI is entering an era where intelligence moves out of the cloud and directly into devices, sensors, vehicles, robots, and infrastructure. By the end of this decade, edge AI will be the default way machines think, act, and make decisions.

🌐 AI Will Live Everywhere, Not Just in the Cloud

By 2030, intelligence will no longer be confined to centralized data centers. Instead, AI will operate at the source of data on devices, sensors, and autonomous systems, enabling real‑time decisions without waiting for cloud responses. This shift is driven by the need for ultra‑low latency, privacy in computation, dealing with poor connectivity, and real‑time autonomy. Edge AI becomes the brain of everyday machines.

🏙️ Smart Cities Will Depend on Edge AI

Cities are reaching a point where vital services like transportation, healthcare, and energy systems require instant decision‑making. Cloud‑only systems cannot keep up. So Edge AI is emerging as a transition for smart cities, enabling real‑time traffic control, emergency response, and medical monitoring. Edge AI will power adaptive traffic lights, autonomous public transit, real‑time pollution monitoring, predictive energy grids, and continuous patient monitoring.

🏭 Industry Will Run on Distributed, Real‑Time AI

AI inference workloads are pushing companies toward distributed data center architectures, especially at the edge and regional hubs. Factories, warehouses, and logistics hubs will rely on edge AI for computer vision on production lines, predictive maintenance, robotics coordination, and safety monitoring. This shift requires new infrastructure, including higher power densities and better connectivity.

🔐 Security Becomes a Priority

As AI moves to the edge, network security must evolve. Edge sites need consistent bandwidth, secure real‑time data pathways, and local processing to reduce cloud exposure. The move to edge AI will change the entire security model, requiring new approaches to protect distributed intelligence.

🤖 Rise of Agentic Edge AI

Agentic AI - AI that can take autonomous actions - will define the next era of edge computing. This means:

- autonomous robots

-

self‑managing IoT systems

- vehicles that coordinate with each other

- edge devices that plan, reason, and act

Thus, Edge AI becomes proactive, not reactive.

⚡ Hardware and Models Are Getting Smaller and Faster

The AI landscape is shifting because models are becoming leaner and more efficient, enabling AI to run everywhere, from phones to microcontrollers. This is where Small Language Models (SLMs) and efficient vision models become crucial. The result: AI becomes ubiquitous, not just powerful.

🧠 Edge AI Will Power Autonomous Everything

The future of Edge AI includes autonomous everything: drones, industrial systems, retail, energy management, etc. Edge AI becomes the backbone of real‑time autonomy in a number of key industries.

🧩 Edge Storage Becomes Critical

Smart cities and IoT systems will require local storage to support fast AI decisions. Edge storage becomes the backbone of real‑time analytics and resilience.

Links

Links

External links open in a new tab:

- ibm.com/think/topics/edge-ai

- flexential.com/resources/blog/beginners-guide-ai-edge-computing

- redhat.com/en/topics/edge-computing/what-is-edge-ai

- scalecomputing.com/resources/what-is-edge-ai-how-does-it-work

- blogs.nvidia.com/blog/what-is-edge-ai

- cisco.com/site/us/en/learn/topics/artificial-intelligence/what-is-edge-ai

- docs.edgeimpulse.com/knowledge/courses/edge-ai-fundamentals/intro-to-edge-ai

- fastly.com/learning/serverless/what-is-edge-ai

- n-ix.com/on-device-ai/