Explainable AI (XAI)

Explainable AI (XAI)

Have you ever wanted an explanation from AI? That's the essence of XAI.

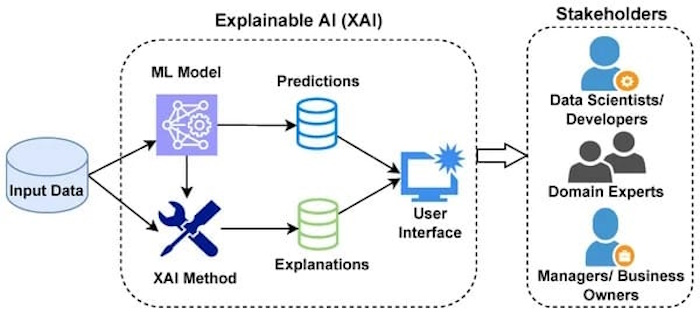

Explainable AI (XAI) is a set of techniques, principles, and processes that enable AI developers and users to comprehend AI models and their decisions. XAI refers to AI methods that render transparent the decision-making processes of machine learning models so they can be understood by humans. As AI becomes more advanced, it's crucial to understand how algorithms arrive at a result.

XAI aims to address the "black box" problem in AI, where complex models such as deep learning neural networks, provide little insight into how the AI operates. It aims to provide an understanding of why an AI model behaves the way it does in order to reach certain decisions.

The main focus of XAI is to make the reasoning behind AI algorithms' decisions or predictions more understandable and transparent. XAI seeks to explain the data used to train the model, the predictions made, and the usage of the algorithms. It essentially requires drilling into the model to extract the reasons behind its behavior.

A corollary concept to Explainable AI is Interpretable AI. While often used interchangeably, Explainable AI and Interpretable AI have very different meanings. XAI explains the AI decision-making process in an understandable way, while interpretable AI refers to the predictability of a model's outputs based on its inputs, and is thus used to understand an AI model's inner workings.

Key Objectives of XAI

Key Objectives of XAI

A primary objective of XAI is to build trust between humans and AI systems. When users understand why a model made a particular prediction, such as approving a loan or flagging a medical risk, then they are more likely to rely on it. Trust is especially important in areas where decisions carry legal, ethical, or safety implications. XAI provides clarity about the factors influencing a model's output, helping users feel confident that the system is behaving fairly and consistently.

Another major goal is accountability. As AI becomes embedded in critical processes, organizations must be able to explain and justify decisions to regulators, auditors, and affected individuals. XAI supports this by revealing how data, features, and algorithms contribute to outcomes. This transparency helps identify errors, biases, or unintended consequences, making it easier to correct models and ensure they comply with emerging regulations around fairness, transparency, and responsible AI use.

XAI aims to improve usability and human-AI collaboration. When explanations are clear, developers can debug models more effectively, decision-makers can interpret outputs with greater confidence, and end-users can understand how to act on AI recommendations. This makes AI systems more practical and reliable in real-world settings. XAI enhances model evaluation by helping teams understand accuracy, fairness, and potential biases.

A further objective is supporting innovation and continuous improvement. By making model behavior visible, XAI helps researchers and engineers refine algorithms, detect weaknesses, and design better systems. Transparent models encourage experimentation because teams can see how changes affect outcomes. This accelerates progress while reducing the risk of deploying flawed or opaque systems that could cause harm or erode public trust.

XAI also seeks to ensure compliance with ethical and regulatory

standards. As governments introduce rules requiring explainability,

organizations need AI systems that can provide clear, auditable reasoning.

XAI frameworks help meet these requirements by documenting how models work,

what data they use, and how decisions are generated, supporting responsible

and legally compliant AI adoption.

- Transparency: Enable users to understand how AI systems operate.

- Accountability: Provide reasoning for AI decisions to ensure ethical compliance.

- Trust: Build confidence among users by clarifying how decisions are made.

- Fairness: Identify and mitigate bias in AI models.

- Compliance: Support adherence to regulations by offering explanations.

Why XAI

Matters

Why XAI

Matters

XAI is important because it makes AI systems understandable, trustworthy, and accountable, especially in high-stakes areas like healthcare, finance, and law. As AI becomes more complex, humans need clear insight into why a model made a decision, not just what the decision was. This transparency is essential for safety, fairness, and responsible deployment.

🌟 Builds Trust Between Humans and AI

Modern AI models like deep learning systems operate as "black boxes," making decisions that even their creators can't fully trace. This lack of transparency creates problems in fields where decisions have real-world consequences. XAI provides explanations that help users understand how and why a model reached a conclusion, increasing confidence in its outputs.

⚖️ Supports Accountability and Ethical Use

XAI helps organizations characterize model accuracy, fairness, transparency, and potential biases. This is crucial when AI decisions affect people's lives; decisions like loan approvals, medical diagnoses, hiring recommendations, or legal risk assessments. Without explainability, it's impossible to justify decisions to regulators, auditors, or the individuals affected.

🛠️ Helps Developers Debug and Improve Models

XAI reveals which features or patterns the model relies on. This visibility allows engineers to detect errors, identify bias, improve model performance, and understand model weaknesses. XAI widens the interpretability of any AI model and helps humans understand the reasons for their decisions.

🏥 Enables Safe Use in High-Stakes Domains

In healthcare, finance, transportation, and public services, opaque AI systems can cause harm if they behave unpredictably. XAI ensures thatv clinicians understand why an AI flagged a medical risk, banks can justify credit decisions, and autonomous systems can be audited for safety. This is why researchers describe XAI as an urgent need to bridge complexity and trust in society.

📜 Supports Regulatory Compliance

Governments and industry standards increasingly require explainability. XAI helps organizations meet requirements around fairness, transparency, non-discrimination, and auditability. As AI becomes more embedded in public life, explainability becomes a legal and ethical necessity.

XAI & AI Ethics

XAI & AI Ethics

XAI and AI ethics are deeply interconnected because explainability is one of the core mechanisms that makes ethical AI possible. Ethical principles like fairness, accountability, transparency, and human oversight cannot be meaningfully enforced unless AI systems can be understood and evaluated. XAI provides the visibility needed to detect bias, justify decisions, and ensure responsible use.

🧠 XAI as the Foundation of Ethical AI

Ethical AI frameworks emphasize transparency and accountability as

essential safeguards. XAI can be viewed as a tool for ethical governance of

AI, because opaque, black-box systems make it impossible to evaluate whether

decisions are fair or harmful. Without explanations, organizations cannot

verify whether an AI system discriminates, violates rights, or behaves

unpredictably.

XAI directly supports ethical principles by revealing how

models use data, which features influence decisions, and whether the system

behaves consistently across different groups. This transparency is essential

for detecting bias, ensuring fairness, and preventing discriminatory

outcomes.

⚖️ XAI Enables Accountability and Oversight

Ethical AI requires that someone can be held responsible for an AI system's decisions. XAI makes this possible by providing traceable reasoning. Explainability is crucial for building trust and transparency in AI systems, especially as they become integrated into critical sectors like healthcare, finance, and law.

🔍 XAI Helps Identify and Mitigate Ethical Risks

Ethical risks often hide inside complex models. XAI exposes these risks by making model behavior interpretable. Ethical analyses emphasize that XAI is frequently invoked as a way to address fairness, safety, and human-centered design concerns in AI systems.

🧩 XAI Supports Human-Centered Design

AI ethics stresses that humans must remain in control of AI systems. XAI contributes to this by making AI decisions understandable to non-experts. When explanations are clear, users can question outputs, override decisions, or provide feedback. This aligns with ethical principles of autonomy, dignity, and informed consent. Human-centered XAI research designs explanations that are usable, meaningful, and aligned with human values.

📜 XAI Helps Meet Ethical and Legal Requirements

Many emerging AI regulations require explainability for high-risk systems. Ethical AI cannot be separated from compliance, and XAI provides the mechanisms needed to satisfy transparency, auditability, and fairness requirements.

XAI Techniques

XAI Techniques

- Feature Attribution: Identifies which features of the input most influenced the output. For example: SHAP (Shapley Additive Explanations) and LIME (Local Interpretable Model-Agnostic Explanations).

- Model Simplification: Creates simpler models to approximate complex ones. This can be achieved with decision trees approximating neural networks.

- Visualization Tools: Heatmaps and attention maps show regions of importance in visual models.

- Counterfactual Explanations: Shows how changing inputs could lead to different outputs.

- Self-interpretable models: These models are themselves the explanations and can be directly read and interpreted by a human.

- Post-hoc explanations: These are techniques applied after the model has been trained to understand its behavior.

"By far, the greatest danger of Artificial Intelligence is that people conclude too early that they understand it."

Applications of XAI

Applications of XAI

XAI is used in many industries including healthcare, finance, and legal

- Autonomous Driving: Understanding AI decisions is critical for user safety. If a driver can understand how and why the vehicle makes its decisions, they will better understand what scenarios it can or cannot handle.

- Defense: Ensuring accountability in AI-driven military systems. DARPA is developing XAI in its third wave of AI systems.

- Healthcare: Interpreting diagnostic models and predicting patient outcomes. Facilitates shared decision-making between medical professionals and patients.

- Finance: Clarifying credit approval processes and fraud detection systems. Helps meet regulatory requirements and equips analysts with the information needed to audit high-risk decisions.

- Legal: Auditing AI-based sentencing recommendations.

Benefits of XAI

Benefits of XAI

XAI provides more accountability and transparency in AI systems

XAI can help developers ensure that systems work as expected, meet regulatory standards, and allow those affected by a decision to understand, challenge, or change the outcome. It also improves the user experience by helping end-users trust that the AI is making good decisions on their behalf. Here are some additional benefits:

- Builds trust: Individuals might be reluctant to trust an AI-based system if they can't tell how it reaches a conclusion. XAI is designed to give end users understandable explanations of its decisions.

- Improves the overall system: With added transparency, developers can more easily identify and fix issues.

- Identifies cyberattacks: Adversarial machine learning attacks attempt to fool or misguide a model into making incorrect decisions using maliciously designed data inputs. An adversarial attack against an XAI system would reveal the attack by showing unusual or odd explanations for its decisions.

- Safeguards against bias: XAI aims to explain attributes and decision processes in machine learning algorithms. This helps identify biases that can lead to poor outcomes related to training data quality or developer biases.

Challenges of XAI

Challenges of XAI

XAI faces several deep challenges that limit how effective, reliable, and widely adopted it can be. These challenges come directly from the complexity of modern AI systems and the difficulty of translating mathematical reasoning into human-understandable explanations. Research highlights that while XAI is essential for trust and accountability, it still struggles with technical, practical, and ethical limitations.

Complexity of Modern Models

One of the biggest

challenges is that today's AI systems are extraordinarily complex. MDPI

notes that these models operate as "black boxes," making it difficult to

trace how inputs lead to outputs. Even when explanations are generated, they

often oversimplify the underlying reasoning. This creates a tension: the

more powerful the model, the harder it is to explain without losing accuracy

or nuance.

Lack of Standardization

XAI suffers from

inconsistent methods and no universal standards. IEEE research emphasizes

that the field lacks a unified framework for what counts as a "good"

explanation. Different stakeholders need different types of explanations,

but current tools rarely satisfy all groups. This makes it difficult to

evaluate or compare XAI systems across industries.

Trade-off Between Accuracy and Interpretability

A

recurring challenge is the performance-interpretability trade-off. Simpler

models (like decision trees) are easier to explain but less accurate for

complex tasks. More advanced models (like deep learning) are highly accurate

but difficult to interpret. This as a core limitation in XAI research.

Organizations often must choose between transparency and performance, which

is especially problematic in high-stakes domains.

Risk of Misleading or Incomplete Explanations

Many

XAI techniques generate post-hoc explanations, interpretations created after

the model has already made a decision. These explanations can be

approximate, incomplete, or even misleading. Post-hoc methods may fail to

reflect the true internal logic of the model. This creates a false sense of

transparency, which can be more dangerous than no explanation at all.

Difficulty Evaluating Explanations

Evaluating

whether an explanation is correct, useful, or fair is itself a major

challenge. ResearchGate's review notes that there is no consensus on how to

measure explanation quality. Should explanations be judged by accuracy,

simplicity, user satisfaction, or regulatory compliance? Different metrics

lead to different conclusions, making evaluation inconsistent and

subjective.

Human Factors and Cognitive Limits

Even when

explanations are technically correct, humans may not understand them. Users

vary widely in expertise, cognitive load, and expectations. Explanations

that are too technical overwhelm users; explanations that are too simple

fail to provide meaningful insight. This human-machine gap is a persistent

challenge highlighted across XAI literature.

Ethical and Security Risks

XAI can unintentionally

expose sensitive information about training data or model structure. This

creates privacy and security risks. It can also reveal proprietary

algorithms, making companies hesitant to adopt fully transparent systems.

Ethical concerns arise when explanations expose biases but organizations

lack the tools or incentives to fix them.

Links

Links

AI Ethics page.

Bias page.

Data Privacy page.

External links open in a new tab:

- builtin.com/artificial-intelligence/explainable-ai

- techtarget.com/whatis/definition/explainable-AI-XAI

- ibm.com/think/topics/explainable-ai

- insights.sei.cmu.edu/blog/what-is-explainable-ai/

- en.wikipedia.org/wiki/Explainable_artificial_intelligence

- edps.europa.eu/system/files/2023-11/23-11-16_techdispatch_xai_en.pdf

- c3.ai/glossary/machine-learning/explainability/

- paloaltonetworks.com/cyberpedia/explainable-ai

- cloud.google.com/vertex-ai/docs/explainable-ai/overview