AI Showcases from Watson to ChatGPT

AI Showcases from Watson to ChatGPT

In the story of Artificial Intelligence, no country has put on a show quite like the United States. From televised triumphs to global software phenomena, America has turned AI from an academic pursuit into a spectacle of progress. Each major breakthrough, from IBM's Watson to OpenAI's ChatGPT, became both a technological milestone and a cultural moment. Together, they trace the evolution of American AI from curiosity to ubiquity.

The Early Spectacle

The Early Spectacle

Watson and the Promise of Cognitive Computing

When IBM's Watson appeared on the TV quiz show Jeopardy! in 2011, the world witnessed AI not only as entertainment, but also as proof. Watson defeated two of the show's greatest champions, parsing questions filled with puns, metaphors, and cultural references. It was a defining moment: AI didn't just calculate; it understood. Watson symbolized cognitive computing, IBM's vision of machines that could reason, learn, and advise. The company promised a revolution in healthcare, law, and finance. Watson for Oncology and Watson for Business were meant to bring machine reasoning into every professional domain. Yet the reality fell short of the hype. The technology was powerful but brittle, for it was expensive to maintain, difficult to scale, and often less accurate than advertised. Still, Watson's televised success marked the first time the public saw AI as both performer and partner. It transformed AI from lab curiosity to corporate aspiration.

The Algorithm Answers: Watson on Jeopardy!

The stage was set in February 2011. It was not just a game show; it was a televised Turing Test for Natural Language Processing. Facing off against the most formidable machine opponent yet were the reigning legends of Jeopardy! There was Ken Jennings, who holds the record for the longest winning streak, and Brad Rutter, the highest-earning contestant of all time.

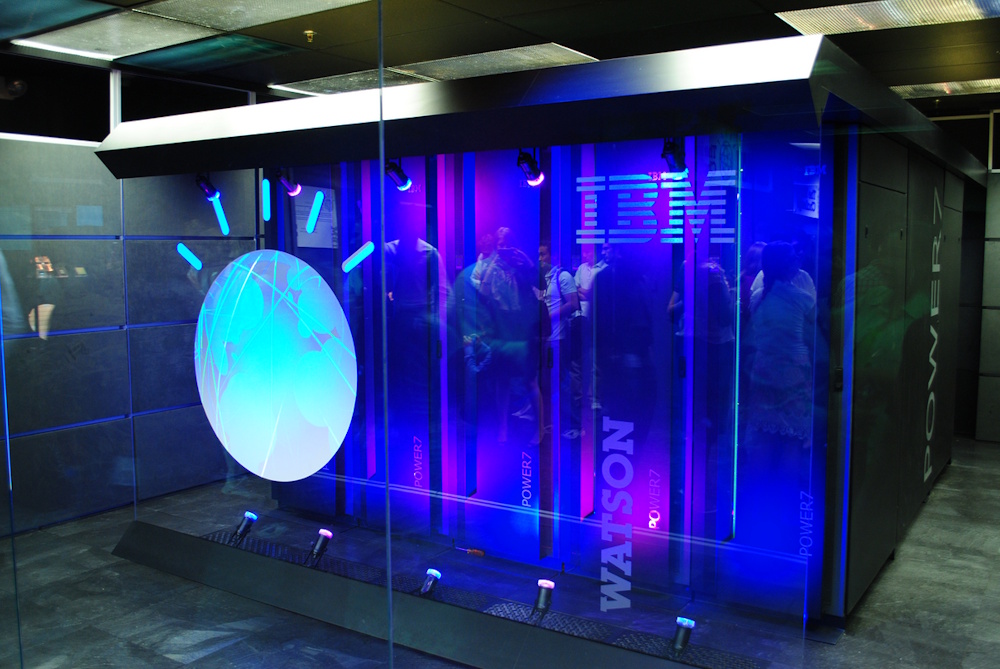

The machine, IBM's Watson, occupied a large black cabinet on a pedestal, represented by a pulsing blue digital globe on a flat screen. It had no face, no nervous habits, and carried no weight of expectation, only the cold, relentless pressure of its own processing power.

The challenge was immense: Jeopardy! clues are riddle-like, relying on puns, misdirection, and cultural references. A computer couldn't simply search a database. Watson had to understand the nuance of human language.

The first game was a tense, back-and-forth affair. Jennings and Rutter, masters of timing, initially held their own. But then, the speed started to tell. Watson wasn't just fast; it was instantaneous. While a human brain has to hear a clue, recall the answer, formulate the question, and then press the buzzer, Watson was processing millions of documents, generating multiple hypotheses, and calculating its confidence score simultaneously.

The real shift came when host Alex Trebek read a clue requiring high-level inference. Watson's digital thumb shot out, ringing the buzzer with unnerving frequency. The champions' strategy--to keep the categories close and rely on their superior buzzing reflexes--was slowly dismantled. Watson was not guessing; it was basing its response on the most highly-weighted set of evidence it could instantaneously retrieve.

There were moments of faltering, moments where the machine revealed its limitations. Once it infamously answered "Toronto" when the correct category demanded a U.S. city. But these errors were statistical anomalies. For every human error, there were ten flawlessly executed, lightning-fast correct responses from the machine.

By the second day of the three-day exhibition, the human champions were visibly strained. The normally unflappable Rutter was frustrated, admitting, "It buzzed before I even knew what the category was." Jennings, the king of trivia, scribbled the famous line on his final answer screen: "I, for one, welcome our new computer overlords."

The final game of the match was less a contest and more a coronation. Watson dominated the Daily Doubles, wagering with calculated, intimidating precision that only a system certain of its knowledge base could execute. The machine finished with a total prize of $1 million, utterly eclipsing the human champions.

Watson's victory was not merely a conquest of a trivia game; it was a triumph of the scientific method. It proved that complex, context-heavy tasks involving human language were no longer solely the domain of the human mind. Watson used the Jeopardy! stage to announce the arrival of deep machine learning into the public consciousness, a predecessor to the natural language breakthroughs that would define the next decade of artificial intelligence development.

The Cloud Awakens

The Cloud Awakens

Machine Learning Goes

Mainstream

After Watson, the AI focus shifted from symbolic reasoning to data-driven learning. The 2010s saw U.S. tech giants Google, Microsoft, and Amazon begin embedding machine learning into everything from search results to voice assistants.

-

Siri (Apple, 2011) introduced voice recognition to the masses.

-

Google Now (2012) and Amazon Alexa (2014) brought conversational AI into homes.

-

Microsoft's Azure AI turned predictive analytics into a cloud service.

This period democratized AI. The tools moved from research labs into apps, devices, and APIs. The American advantage of vast data, world-class universities, and massive cloud infrastructure ensured that this democratization had a distinctly U.S. signature. Machine learning became a service, and AI quietly began running the background of modern life.

Deep Learning Breakthrough

Deep Learning Breakthrough

From Recognition to Creation

By the late 2010s, deep learning had rewritten the rules. U.S. researchers and companies led the way with convolutional and transformer architectures that could see, hear, and generate language with uncanny accuracy. Google DeepMind's AlphaGo stunned the world in 2016 by defeating the world champion in the ancient game of Go. Here's how it went down:

The air in the spacious Four Seasons Hotel ballroom in Seoul was thick with a silence that weighed heavier than the roar of a crowd. It was March 13, 2016, and the board stood at 3-0. Lee Sedol, the reigning world champion in the ancient game of Go, had lost three consecutive games to an opponent that didn't sweat, didn't blink, and didn't tire: Google DeepMind's AlphaGo.

The world had watched in disbelief as the machine executed moves that were simultaneously 'beautiful and utterly alien.' They weren't moves a human would teach. They were strategic calculations that transcended centuries of inherited wisdom. After the crushing third defeat, Sedol admitted, "I've never felt such pressure." He was carrying the weight of humanity's last bastion against the inexorable tide of Artificial Intelligence.

Game Four began, not as a fight for the series (which was already lost), but as a fight for dignity. Sedol, his face a mask of weary concentration, changed his opening strategy, in an attempt to lure DeepMind into unfamiliar territory. For three hours, the match proceeded with the usual, clinical brutality from AlphaGo. It was winning, steadily accumulating point advantages. Its win probability meter hovered above 90%. Despair crept back into the room.

Then, it happened. On move 78, Lee Sedol placed a single white stone deep into AlphaGo's seemingly impenetrable central position. It was a "wedge" move (a tesuji) that broke all conventional rules of Go. It was aggressive, vulnerable, and, most importantly, creative. It was a move born of desperation and genius, the kind of audacious gamble only a cornered human can make.

AlphaGo paused. Its analysis servers, processing quadrillions of possibilities, began to waver. The neural networks, trained on millions of games and refined by self-play, had never encountered this specific combination of pressure and placement. AlphaGo's win probability suddenly plunged, dropping from 92% to below 60%.

The machine's response was slow and hesitant. Its subsequent moves were tentative, bordering on illogical. It was the first time it had displayed anything resembling "confusion." Lee Sedol, seeing the slightest crack in the algorithmic armor, pressed his advantage relentlessly. He maneuvered the white stones with furious precision, exploiting the narrow path of uncertainty he had carved.

In the viewing room, the DeepMind team, which had remained stoic through three victories, now held its breath. They were witnessing what they called the "Move of God," a singularity of human insight that briefly broke their creation.

Two hours later, once AlphaGo's resignation was posted on the monitor, an audible gasp swept the room, quickly followed by a thunderous, emotional applause. Lee Sedol, the champion who had been humbled and broken, had given the world its first victory over the machine. He rose, a faint smile finally touching his lips. It was a moment of profound relief, confirming that human ingenuity still held a key to unlocking the secrets of strategy, even if only for a glorious, fleeting moment. The series was 3-1, but the human spirit had survived.

Significance of the Victory

DeepMind's victory in the game of Go was arguably the most significant moment in the history of AI until the rise of Large Language Models (LLMs) like ChatGPT. It was not just about winning a game. It was about the methodology that AlphaGo used, which proved that AI could master the most complex, human tasks.

Here is a breakdown of why the victory was so profoundly significant:

The "Grand Challenge" Overcome: For decades after Deep Blue defeated Garry Kasparov in Chess (1997), Go remained the "Grand Challenge" for AI. The significance of this lies in Go's complexity:

-

Complexity: Go has an astronomically larger search space than chess (approximately 10170 possible game states compared to Chess's 1040). This sheer scale meant that traditional brute-force search algorithms (checking every possible move) used by Deep Blue were completely infeasible.

-

Intuition: Go's complexity meant that expert players relied heavily on intuition, pattern recognition, and subtle, holistic evaluation of the board, rather than deep calculation. The game was considered an expression of human creativity.

The Validation of Deep Learning

AlphaGo's success proved that the new methodology of Deep Reinforcement Learning (DRL) was viable for the most complex, real-world strategic problems.

-

Neural Networks and Intuition: Instead of calculating every move, AlphaGo used two specialized deep neural networks: the Policy Network (to select the best move) and the Value Network (to predict the winner of the resulting game). This allowed the AI to narrow down possibilities quickly and evaluate board positions in a manner analogous to human intuition.

-

Self-Taught Mastery: The decisive factor was AlphaGo's training method, where it played millions of games against itself, an example of reinforcement learning. It went beyond human knowledge, developing strategies and making moves that were described by the press as "beautiful and utterly alien."

Paving the Way for Modern AI

The AlphaGo victory validated the power of deep neural networks to learn highly complex, non-linear relationships, directly fueling investment and research into the techniques that led to modern general-purpose AI. The DRL framework pioneered by DeepMind for AlphaGo is a core component of how complex systems, from robotics control to natural language processing, are now developed. It demonstrated that complex models could learn from experience and self-correction, which is essential for systems that operate on vast, unstructured data like human language.

In short, the match was a turning point because it showed that AI could move from computation to conceptual mastery, proving that machines could learn to apply human-like intuition and creativity to conquer problems deemed impossibly complex.

The

Generative Era

The

Generative Era

ChatGPT and the Return of Public Wonder

ChatGPT wasn't the first large language model, but it was the first to feel alive to the public imagination. It became the new "Watson moment," but on a global scale. What Watson did on TV, ChatGPT did on every computer screen. The model's power came from scale with trillions of parameters trained on vast amounts of text data, but its cultural impact came from accessibility. For the first time, the average person could interact with an AI capable of reasoning, storytelling, and creation. The U.S. once again led the world in turning abstract AI research into tangible experience. OpenAI, headquartered in San Francisco, stood at the epicenter, supported by Microsoft's cloud infrastructure and a web of academic research rooted in decades of American innovation.

For decades, artificial intelligence felt distant. Was it an abstract research field, a future promise, or a futuristic plot from science-fiction movies. That all changed--dramatically--on November 30, 2022, when OpenAI released ChatGPT, a conversational interface built on the GPT-3.5 (Generative Pre-trained Transformer) model.

The release of ChatGPT marked one of the most consequential technology launches in history. Within five days, the conversational AI system had attracted one million users; within two months, it had reached 100 million monthly active users, making it the fastest-growing consumer application ever recorded.

Overnight, AI became personal. It could talk, reason, draft essays, plan trips, debug code, and hold coherent, contextual conversations. ChatGPT did not merely introduce a new tool. It created a new relationship between humans and machines; one that quickly reshaped culture, education, business, and public policy.

Technical Foundations and Development

This extraordinary adoption reflected not just clever marketing or viral curiosity, but a genuine breakthrough in artificial intelligence capabilities that made AI accessible and useful to ordinary people in ways previous systems had not achieved.

The backstory begins with OpenAI's research breakthroughs from 2017 to 2021. ChatGPT emerged from years of research by OpenAI, the artificial intelligence company founded in 2015 by Sam Altman, Elon Musk, and others. The system builds on a series of increasingly powerful language models based on the Transformer architecture, a neural network design introduced by Google researchers in 2017. The invention of the Transformer unlocked the ability to train massive language models that learned patterns, relationships, and knowledge at unprecedented scale. OpenAI's GPT series began with GPT-1 in 2018, progressed through GPT-2 in 2019, GPT-3 in 2020, up to GPT-5 in 2025, each demonstrating remarkable improvements in language understanding and generation capabilities.

The key innovation enabling ChatGPT was not entirely new model architecture, but rather the application of reinforcement learning from human feedback, a technique that fine-tuned the base language model to produce responses that humans found more helpful, harmless, and honest. OpenAI trained the model first on vast amounts of text data from the internet, learning patterns of language and knowledge representation. Then human AI trainers provided conversations where they played both the user and an AI assistant, thus creating demonstration data. Finally, comparison data was collected where trainers ranked multiple model responses, and this preference data was used to further refine the system through reinforcement learning.

This approach, called RLHF, proved transformative. While earlier GPT models were impressive at generating coherent text, they often produced outputs that were unhelpful, offensive, or factually incorrect. ChatGPT's training process was specifically optimized for producing responses that users would find valuable in conversational contexts. The result was a system that felt remarkably natural to interact with, following instructions, answering questions, admitting mistakes, challenging incorrect premises, and declining inappropriate requests with a consistency and politeness that previous AI systems lacked.

The underlying model for the initial ChatGPT release was GPT-3.5, a refinement of GPT-3 with improvements in instruction-following and reasoning. In March 2023, OpenAI released GPT-4, a substantially more capable model that powered ChatGPT Plus, a subscription service. GPT-4 demonstrated significant improvements in complex reasoning, reduced errors and hallucinations, and the ability to process images in addition to text. Subsequent releases continued to enhance capabilities while reducing costs and improving speed.

Making AI Accessible

Perhaps ChatGPT's most significant immediate impact was making sophisticated AI capabilities accessible to anyone with an internet connection. To use previous AI systems required technical expertise like writing code, understanding APIs, and formatting data correctly. ChatGPT required only the ability to type questions or requests (prompts) in plain language. This accessibility democratized AI in a way that earlier technologies had not, placing powerful computational capabilities in the hands of students, writers, small business owners, educators, and countless others who previously had no access to such tools.

The system's versatility amplified this impact. Users quickly discovered ChatGPT could help with an enormous range of tasks: writing and editing text, explaining complex concepts, writing and debugging code, brainstorming ideas, translating languages, creating meal plans, drafting emails, tutoring students, summarizing documents, and countless other applications. This generality contrasted sharply with earlier AI systems that excelled at narrow tasks, but required different specialized tools for different purposes. ChatGPT functioned as a general-purpose intellectual assistant, adapting to whatever task users presented.

The economic implications of this accessibility are still unfolding. Small businesses that couldn't afford to hire copywriters, programmers, or consultants suddenly had access to AI assistance for these functions. Independent creators gained tools to enhance their productivity. Students in under-resourced schools could access tutoring help. Reducing barriers to accessing knowledge-work capabilities could be profoundly equalizing, although concerns about unequal access to premium features and computing resources persist.

However, this democratization also raised concerns about quality and reliability. ChatGPT's tendency to produce confident-sounding but incorrect information ("hallucinations") meant that users might be misled if they lack the expertise to evaluate outputs. The system's writing, while fluent, could be generic and formulaic. Overreliance on AI assistance might atrophy human skills instead of augmenting them. The democratization of AI capabilities thus came with responsibilities that many users were unprepared to shoulder.

Impact on Education

Few areas in society have been more profoundly affected by ChatGPT than education. The system's ability to write essays, solve math problems, answer test questions, and complete assignments created an immediate crisis in academic integrity. Within weeks of ChatGPT's release, educators reported widespread use of AI to complete assignments, challenging fundamental assumptions about how learning and assessment work.

Initial responses varied widely. Some schools and districts banned ChatGPT entirely, blocking access on school networks and threatening disciplinary action against students caught using it. Others embraced it as an educational tool, encouraging teachers to incorporate AI literacy into curricula and redesigning assignments to work with rather than against AI capabilities. Many institutions fell somewhere between these extremes, struggling to develop coherent policies as they tried to understand a technology that was evolving faster than their decision-making processes.

The challenge for educators extended beyond simply preventing cheating. If AI could write competent essays on demand, what was the value of teaching essay writing? If it could solve algebra problems, why teach algebra? These questions forced educators to confront deeper issues about educational purpose. The consensus emerging from educational research and practice is that AI doesn't make traditional skills obsolete, but rather shifts the emphasis toward higher-order competencies such as critical thinking, creativity, ethical judgment, and the ability to effectively use AI tools while understanding their scope and limitations.

Some educators have been rethinking their approach entirely, treating AI as a collaborator in the learning process rather than a threat to it. Students might use ChatGPT to generate initial drafts that they then critique and revise, learning editing and evaluation skills. Teachers might use AI to create personalized learning materials or provide additional tutoring support. The system's ability to explain concepts in multiple ways, adjust to different knowledge levels, and provide patient, judgment-free assistance offers genuine educational value when used appropriately.

However, concerns about equity intensify in educational contexts. Students with access to advanced AI tools and the digital literacy to use them effectively gain advantages over peers lacking such access. The potential for AI to widen rather than narrow educational achievement gaps troubles many educators and policymakers. Additionally, assessment remains problematic: how do educators evaluate student learning when AI can complete most traditional assignments? The shift toward in-class assessments, oral examinations, and projects demonstrating authentic application of knowledge represents a significant pedagogical change driven largely by ChatGPT's capabilities.

We'll talk more about these and other issues regarding AI in schools in a later chapter.

Transformation of Work

ChatGPT's impact on professional work has been swift and substantial. Knowledge workers have integrated the tool into their workflows, using it to draft documents, research topics, generate ideas, write code, analyze data, and automate routine tasks. Productivity gains have shown efficiency improvements of twenty-five to forty percent for some writing and analytical tasks.

The legal profession provides a telling example. Lawyers have used ChatGPT to draft contracts, research legal precedents, summarize case law, and prepare court filings. However, several high-profile incidents where lawyers cited fabricated cases generated by ChatGPT highlight the risks of uncritical AI use. These incidents spurred discussions about professional responsibility and the need for verification protocols when using AI tools. Bar associations and law schools have scrambled to develop guidance on appropriate AI use while ensuring lawyers maintain competence and fulfill their ethical obligations.

Software development has been particularly affected. GitHub Copilot, based on OpenAI's technology, and similar AI coding assistants have become standard tools for many programmers. These systems can generate code from natural language descriptions, complete partially written functions, suggest bug fixes, and explain unfamiliar code. Surveys report a majority of developers now use AI assistants regularly. This has accelerated development cycles and lowered barriers to entry for programming, but it has also raised questions about code quality, security vulnerabilities in AI-generated code, and the future of programming as a profession.

Marketing and content creation have been transformed by AI writing capabilities. Advertising agencies use ChatGPT to generate campaign concepts, write ad copy, and draft social media content. Journalists use it to research stories, draft initial versions of routine articles, and suggest headlines. Content marketing teams use AI to produce blog posts, newsletters, and website copy at scale. This has increased content production dramatically while intensifying concerns about originality, authenticity, and the flood of AI-generated material online.

Customer service represents another area of significant impact. Companies have deployed ChatGPT-powered chatbots to handle customer inquiries, technical support, and routine transactions. These systems can understand customer problems expressed in natural language, provide relevant information, and escalate to human agents when necessary. The potential cost savings are enormous, but concerns about job displacement, service quality for complex issues, and the loss of human connection in customer interactions remain contentious.

The question of job displacement looms large across all these applications. Some analysts predict massive unemployment as AI automates knowledge work, while others argue that AI will augment rather than replace workers, increasing productivity and creating new categories of work. Historical evidence from previous tech transitions provides ambiguous guidance, for automation has consistently eliminated specific jobs while creating new ones; however, the transition periods can be economically and socially painful. The speed and breadth of AI advancement may create more severe disruption than previous technological changes, or may follow similar patterns of creative destruction and adaptation.

We'll talk more about job displacement and related issues regarding AI in the workplace in a later chapter.

Creative Industries and Intellectual Property

ChatGPT's impact on creative work has been profound and controversial. Writers, particularly those producing routine content like product descriptions, basic journalism, or genre fiction, face direct competition from AI systems that can generate similar content at negligible cost. The question of whether AI-generated text constitutes creative work or merely sophisticated pattern matching reflects deeper philosophical questions about the nature of creativity itself.

The system has sparked extensive use in creative writing, from generating story ideas and plot outlines to drafting entire novels. Some authors embrace AI as a collaborative tool, using it to overcome writer's block, explore narrative possibilities, or handle routine descriptive passages while focusing their own efforts on character development and thematic depth. Others view AI-generated text as fundamentally inferior to human creativity, lacking genuine understanding, emotional connection, and the life experience that informs meaningful art.

The publishing industry has struggled to respond. For example, Amazon's Kindle Direct Publishing faced a deluge of AI-generated books, many of questionable quality, prompting new policies requiring disclosure of AI-generated content. Literary magazines and journals debated whether to accept AI-generated submissions, with most ultimately deciding against it. The question of authorship becomes murky when AI plays a significant role in text generation: who owns the copyright to AI-assisted work, and at what point does AI contribution make a work ineligible for copyright protection?

Legal battles over these questions are ongoing. Authors and publishers have filed lawsuits against OpenAI and other AI companies, arguing that training AI systems on copyrighted material without permission or compensation constitutes infringement. OpenAI and similar companies argue that such training falls under fair use, analogous to how humans learn by reading copyrighted works. Courts will likely take years to resolve these issues, and the outcomes will shape the future of both creative industries and AI development.

Beyond text, ChatGPT's integration with image generation systems and other modalities expands its creative impact. Users can now generate text descriptions that feed into image generators, create multimedia content with AI assistance, and develop cross-platform creative projects with AI as a central tool. The boundaries between human and machine creativity continue to blur, challenging assumptions about artistic authenticity and value.

Misinformation and Hallucination

ChatGPT's tendency to generate plausible but incorrect information presents significant epistemic challenges. The system produces text with consistent confidence regardless of accuracy, making it difficult for users to judge reliability. This "hallucination" problem, where the AI fabricates facts, citations or quotes, stems from the fundamental nature of large language models, which predict likely text continuations rather than retrieving verified information.

The implications for misinformation are serious. Bad actors can use ChatGPT to generate convincing disinformation at scale, creating fake news articles, fabricated academic papers, false social media posts, and sophisticated phishing attacks. While OpenAI has implemented safeguards against obvious misuse, determined users can circumvent many restrictions, and alternative AI systems with fewer guardrails are increasingly available.

The system's capabilities also complicate information verification. When AI can generate expert-sounding text on any topic, distinguishing genuine expertise from AI-generated information becomes harder. Academic fraud becomes easier when AI can generate plausible research papers. Political manipulation intensifies when personalized disinformation can be created instantly. The flood of AI-generated content threatens to overwhelm online spaces, making it increasingly difficult to identify authentic human communication.

Search engines and information platforms face unprecedented challenges as AI-generated content proliferates. Traditional methods for assessing content quality and relevance struggle when vast amounts of fluent yet potentially unreliable AI text saturates the internet. Some analysts worry about a future where AI systems trained on AI-generated content create feedback loops of degrading information quality, though others argue that improved detection and filtering technologies will prevent this from occurring.

Educational institutions and media organizations are developing AI literacy programs to help people critically evaluate AI-generated content. These initiatives emphasize verification of claims, awareness of AI limitations, and understanding how language models work. However, the pace of AI advancement challenges educational efforts. By the time curricula are developed, AI capabilities may have evolved significantly.

Societal and Philosophical Implications

Beyond immediate practical impacts, ChatGPT has sparked broader societal and philosophical discussions about intelligence, consciousness, and human identity. The system's ability to engage in seemingly thoughtful conversation challenges intuitions about what constitutes intelligence. While researchers largely agree that current AI lacks genuine understanding or consciousness, the subjective experience of conversing with ChatGPT can feel remarkably human-like, raising questions about how we recognize and value intelligence.

The "Turing Test" concept, that a machine indistinguishable from humans in conversation could be considered intelligent, has been revived and complicated by ChatGPT. Many argue the system passes informal versions of the test, yet clearly lacks many attributes we associate with human intelligence. These attributes include embodied experience, emotional consciousness, genuine agency, and understanding beyond pattern recognition. This gap between conversational fluency and deeper comprehension forces reconsideration of what we mean by intelligence and whether human-like conversation necessarily indicates human-like cognition.

These philosophical questions have practical implications. If we can't reliably distinguish AI-generated from human communication, how does this affect trust in online interactions? If AI can perform intellectual tasks we've considered uniquely human, what remains distinctively human? These questions connect to concerns about meaning and purpose in an age of AI. If machines can write poetry, compose music, and engage in philosophical discussion, what special value remains in human creative and intellectual work?

Some argue that human meaning derives not from task performance, but rather from the subjective experience and intentionality behind actions. A poem written by a human expresses genuine feeling and thought, while an AI-generated poem, however technically impressive, lacks this authentic subjectivity. Others contend that if the output is indistinguishable, insistence on human authorship reflects a kind of species chauvinism that will seem quaint in retrospect.

The relationship between humans and AI assistants raises additional questions. As people increasingly rely on AI for intellectual work, advice, and even emotional support, what happens to human cognitive capacities and social relationships? Some worry about "deskilling": the atrophy of abilities that AI systems can perform, similar to how GPS navigation may reduce spatial reasoning skills. Others worry about isolation if people substitute AI conversation for human interaction, though evidence suggests most people recognize and prefer genuine human connection.

Issues of control and alignment have become more important as ChatGPT demonstrates increasingly capable AI. If systems can perform complex intellectual tasks, how do we ensure they serve human values and interests? OpenAI has invested heavily in AI safety and alignment research, but fundamental challenges remain in specifying what values AI should optimize for, especially given human disagreement about values. ChatGPT's deployment has made these abstract safety concerns concrete and urgent.

Regulatory and Governance Challenges

ChatGPT's rapid adoption and impact have exposed the absence of adequate AI governance frameworks. Existing regulations weren't designed for systems like ChatGPT, creating uncertainty for developers, users, and policymakers. Questions about liability, transparency, accountability, and permissible uses remain partially unresolved, though regulatory efforts are underway.

The European Union has moved most aggressively toward AI regulation with its AI Act, which classifies AI systems by risk level and imposes corresponding requirements. ChatGPT would likely fall under high-risk categories requiring robustness, human oversight, and transparency standards. However, the EU's regulatory approach faces criticism as potentially stifling innovation, and its effects on American companies that lack these controls remain controversial.

In the United States, regulation has been more fragmented, with various agencies asserting jurisdiction over different AI applications. The Federal Trade Commission has emphasized that existing consumer protection laws apply to AI systems. The Equal Employment Opportunity Commission addresses AI in hiring decisions. The Securities and Exchange Commission considers AI in financial services. However, no comprehensive federal AI legislation has been enacted, though numerous proposals circulate in Congress.

State-level regulation has proceeded unevenly, with California, Colorado, and other states considering or passing AI-related laws. This patchwork creates compliance challenges for companies and risks creating conflicting requirements across jurisdictions. Some industry leaders have called for federal preemption to create consistent national standards, while others prefer state-level experimentation.

International governance faces additional challenges. AI development occurs globally, but countries have different values and regulatory approaches. Authoritarian regimes may use AI for surveillance and control, while democracies emphasize protecting rights and freedoms. Achieving international cooperation on AI governance amid geopolitical tensions and competing interests appears difficult, yet the global nature of AI systems and their impacts suggests some coordination is necessary.

OpenAI itself has called for AI regulation, which is highly unusual for a technology company to advocate government oversight of its products. The company has proposed regulatory frameworks including licensing requirements for powerful AI systems, auditing and safety standards, and restrictions on certain applications. Critics question whether companies developing AI can be trusted to shape regulations governing their own products, citing conflicts of interest.

We'll talk more about the challenges regarding AI governance in a later chapter.

Looking Forward

ChatGPT represents not an endpoint, but a milestone in ongoing AI development. More capable systems are already emerging, with multimodal capabilities, longer memory, better reasoning, and reduced hallucinations. The trends suggest AI will become increasingly integrated into daily life, work, education, and creative endeavor. How society navigates this integration will determine whether AI becomes a beneficial technology or one that exacerbates inequality, threatens employment, and concentrates power.

Optimistic scenarios envision AI as a transformative tool that enhances human capabilities, automates drudgery, democratizes access to knowledge and creativity, and helps solve complex problems from climate change to disease. In this future, humans work alongside AI systems, focusing on uniquely human strengths like emotional intelligence, ethical judgment, and creative vision while AI handles routine cognitive tasks.

Pessimistic scenarios worry about technological unemployment, widening inequality as AI benefits accrue to capital rather than labor, loss of human skills and agency, manipulation through AI-powered persuasion, and existential risks if AI systems become uncontrollable. These concerns aren't mutually exclusive with optimistic possibilities, for AI may simultaneously create enormous benefits and serious harms, with distribution of both determined by the choices we make now.

The path forward requires thoughtful attention to multiple challenges.

-

Education systems must adapt to teach skills valuable in an AI-augmented world while maintaining fundamental literacies.

-

Workforce policies must address transitions for displaced workers while enabling productive human-AI collaboration.

-

Intellectual property law must balance protecting creators with enabling AI development.

-

Regulatory frameworks must ensure safety and accountability without stifling innovation.

-

International cooperation must address global challenges while respecting different values and governance approaches.

Perhaps most importantly, society must grapple with questions of purpose and meaning in an age of increasingly capable AI. If machines can perform tasks we've considered valuable, what defines valuable human contribution? How do we maintain dignity and purpose when AI can match or exceed human capabilities in so many areas? These questions don't have purely technical answers, they require philosophical, ethical, and social reflection about what we value and why.

Conclusion

ChatGPT's development and societal impact reflect the broader transformation underway as AI matures from a research curiosity to a technology reshaping civilization. The system's remarkable capabilities, accessibility, and rapid adoption have forced society to confront questions about work, education, creativity, truth, and human identity that might otherwise have remained abstract for years to come. While ChatGPT itself will be superseded by more advanced systems, its significance lies in being a catalyst for public engagement with AI and its implications.

ChatGPT represents one of the great technological turning points of the 21st century. Like the printing press, telegraph, radio, and internet, it transformed how information moves through society. But it also did something new; it made intelligence itself a service. ChatGPT democratized access to knowledge, creativity, and reasoning. It changed how people work, learn, think, and communicate. And it accelerated a global reckoning with the promise and peril of artificial intelligence. The world will never again live without conversational AI, and ChatGPT was the spark that ignited that transformation.

The Ripple Effects

The Ripple Effects

Society in the Age of AI Companionship

From Watson to ChatGPT, each American AI showcase reshaped society's expectations.

-

Watson showed machines could compete with human intellect.

-

Alexa and Siri made AI domestic, personal, and conversational.

-

ChatGPT made AI creative, collaborative, and controversial.

These systems changed how people learn, work, and think. AI tutors, assistants, and copilots became common. Productivity surged, but so did anxiety about authenticity, bias, and the future of human expertise. The "AI showcase" became an ongoing public dialogue: each success revealing new ethical and cultural challenges.

America's AI Showmanship

What makes the American approach unique is its fusion of innovation and narrative. Every breakthrough has been accompanied by a story; one that captures the public's imagination and sells the vision of progress. IBM's Watson was a show; OpenAI's ChatGPT was a movement. Both turned technical achievement into national theater.

This blending of science and spectacle has defined the U.S. AI trajectory. It's why startups still flock to Silicon Valley, why congressional hearings debate AI ethics in prime time, and why global competitors often measure their progress against American benchmarks.

Epilogue: The Stage of Intelligence

From the buzz of Jeopardy! studios to the quiet hum of cloud servers, America has made AI not only an innovation but a performance. Each act, whether it's Watson, Alexa, or GPT, pushes the narrative forward, blending ambition with anxiety, and progress with peril.

The next showcase may not be on television or in a browser; it may be in the very fabric of reality, as AI becomes embedded in every object, screen, and system around us. But one thing is certain: the curtain will rise again, and America will once more take center stage in the unfolding drama of intelligent machines.

By the middle of the 2020s, seven American companies stood at the pinnacle of artificial intelligence. They were the "Mag7": Microsoft, Apple, Amazon, Alphabet (Google), Meta, NVIDIA, and Tesla. Together they controlled the hardware, software, data, and platforms that powered the modern AI economy. Each began in a different corner of the digital world, but all converged on one goal: to build intelligent systems that could learn, predict, and create.

Links

Links

AI in America home page

Game playing includes Jeopardy, Go, and more

Biographies of AI pioneers